-

摘要:

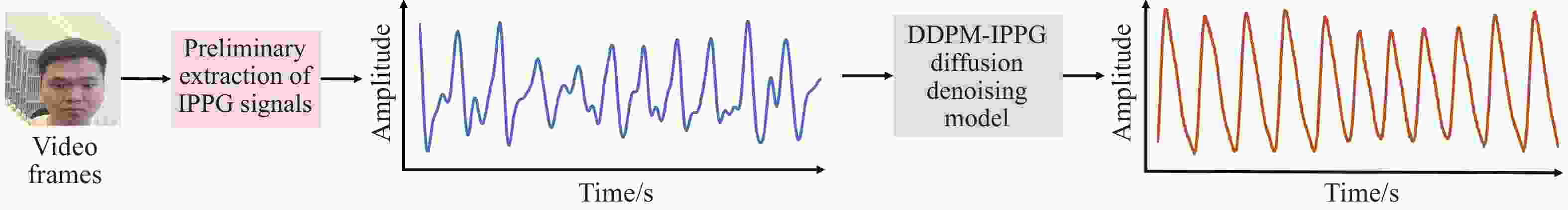

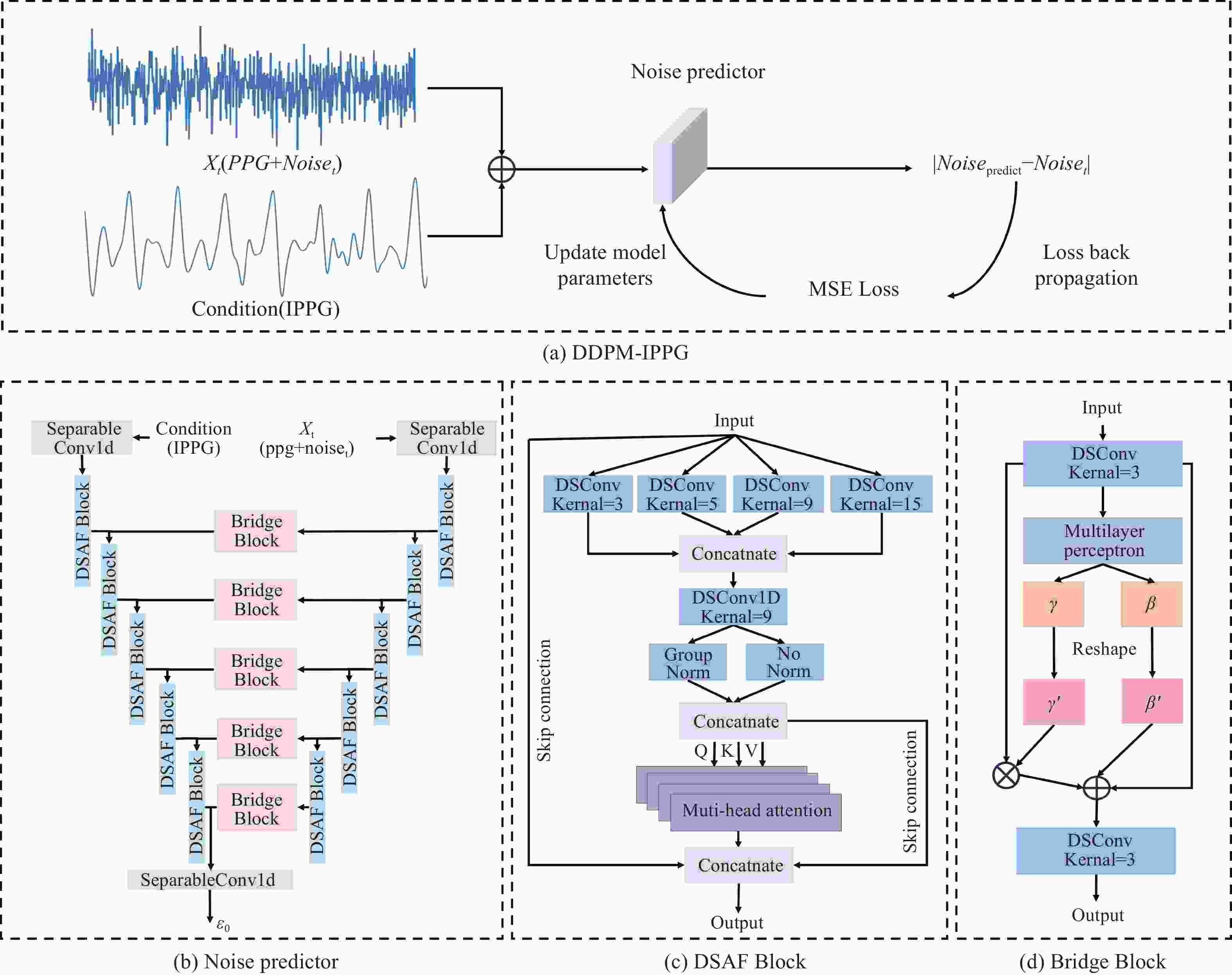

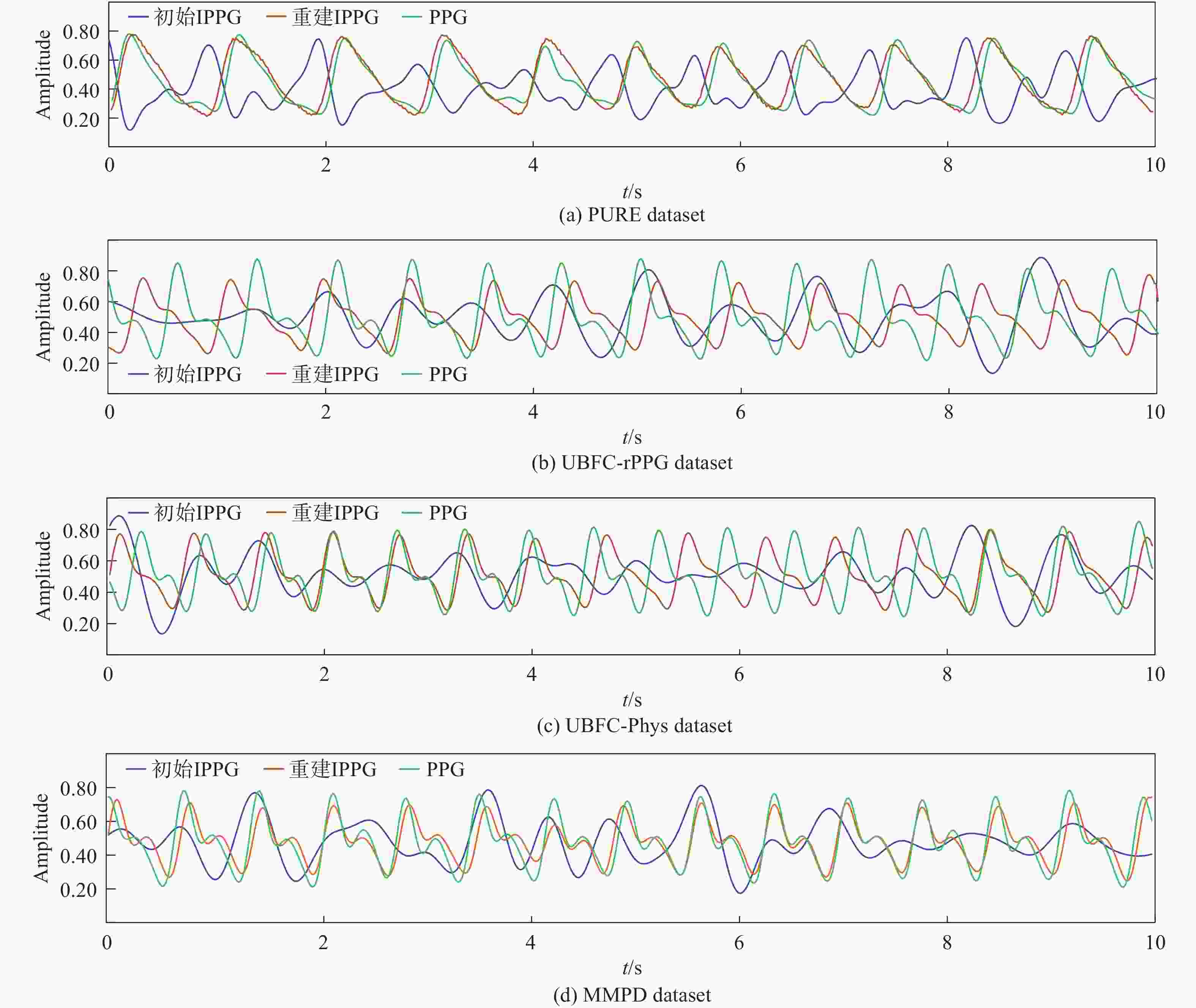

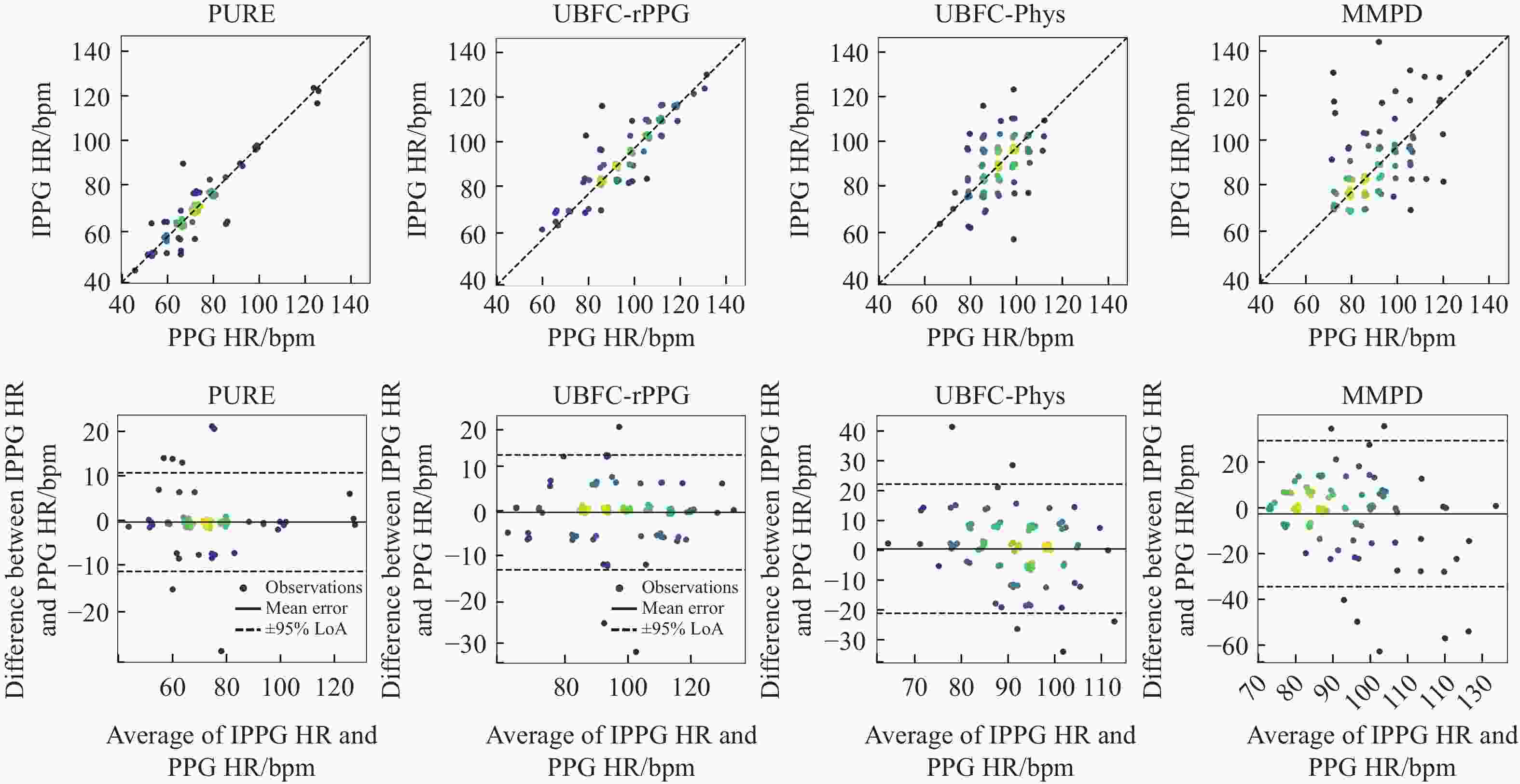

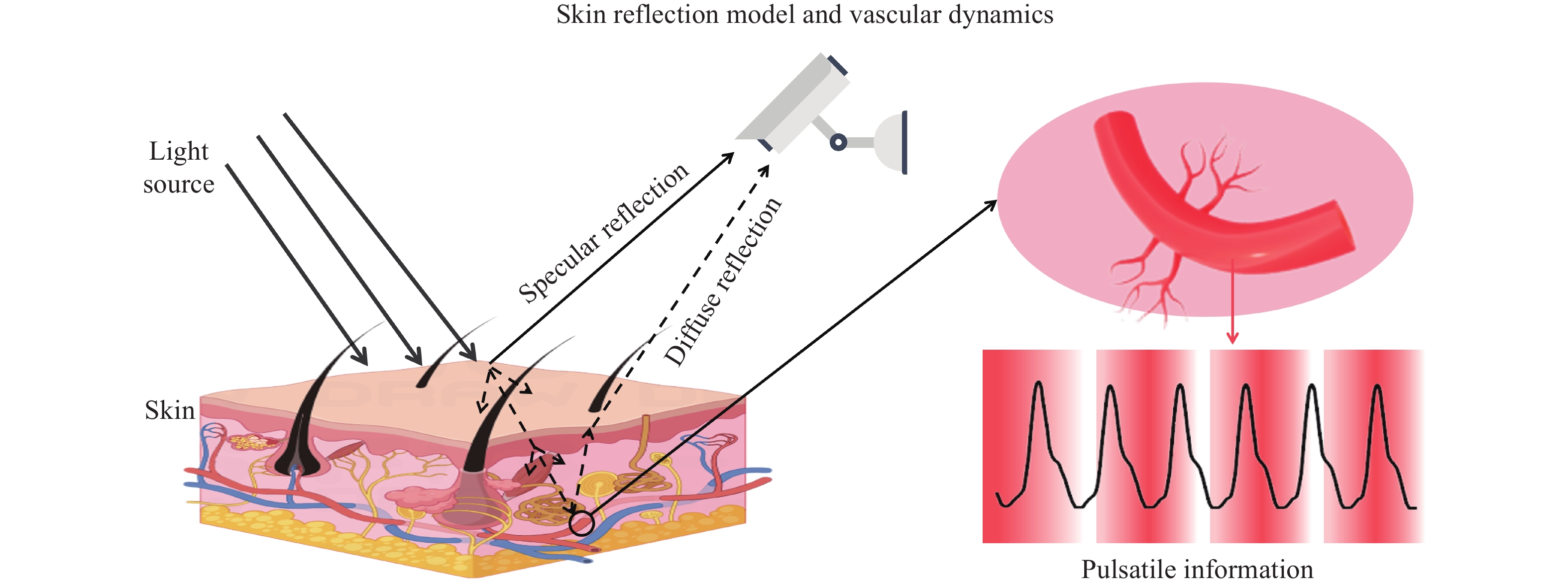

针对成像式光体积描记术(Image Photoplethysmography, IPPG)信号采集过程中易受到噪声干扰的问题,本文提出了一种针对IPPG噪声分布特性的去噪扩散概率模型(Denoising Diffusion Probability Model for IPPG, DDPM-IPPG),通过扩散和逆扩散阶段消除基线漂移与噪声,提升信号的信噪比和后续心率指标的准确性。首先,在扩散阶段对光体积描记术(Photoplethysmography, PPG)信号逐步添加高斯噪声,构建噪声序列,训练基于非线性交融模块和桥接模块的噪声预测器。其次,在逆扩散阶段利用训练完善的噪声预测器对初步提取的IPPG信号进行逐步去噪,恢复出形态相似于PPG的IPPG信号。最后,将本文提出的模型与当前主流模型在PURE、UBFC-IPPG、UBFC-Phys和MMPD数据集上进行验证和对比分析。实验结果表明:与现有最高精度提取方法相比,DDPM-IPPG在PURE数据集上,信噪比提升1.06 dB,心率的平均绝对误差下降0.24 bpm,均方根误差下降0.41 bpm;在UBFC-IPPG数据集上信噪比提升1.50 dB。本文提出的DDPM-IPPG模型在IPPG信号消除基线漂移与噪声方面达到了先进水平,能够更精确地逼近真实信号,为生理健康评估与远程医疗监测提供了更加可靠的数据基础。

Abstract:Image Photoplethysmography (IPPG) signals are easily disturbed by noise during acquisition. To address the issue, this study proposes a denoising diffusion probability model for IPPG (DDPM-IPPG). This model eliminates baseline drift and noise through diffusion and reverse diffusion stages, and improves the signal-to-noise ratio and heart rate accuracy. First, Gaussian noise is gradually added to the photoplethysmography (PPG) signal during the diffusion phase to create a noise sequence. A noise predictor based on a nonlinear fusion module and a bridging module is trained. Subsequently, in the reverse diffusion phase, the well-trained noise predictor is employed to perform step-by-step denoising on the initially extracted IPPG signal. Through this denoising, a signal with high signal-to-noise ratio is recovered. The model proposed in this paper is validated and compared with current mainstream algorithms on the PURE, UBFC-IPPG, UBFC-Phys, and MMPD datasets. The experimental results show that DDPM-IPPG improves the signal-to-noise ratio by 1.06 dB on the PURE dataset comparing with the existing highest-precision extraction method. The mean absolute error of heart rate decreases by 0.24 bpm. The root mean square error of heart rate decreases by 0.41 bpm. On the UBFC-IPPG dataset, the signal-to-noise ratio is improved by 1.50 dB. The proposed DDPM-IPPG model has achieved the current advanced level in eliminating baseline drift and noise from IPPG signals, enabling a more precise approximation of the true signals and providing a more reliable data foundation for physiological health assessment and telemedicine monitoring.

-

Key words:

- imaging photoplethysmography /

- signal denoising /

- diffusion model /

- attention mechanism

-

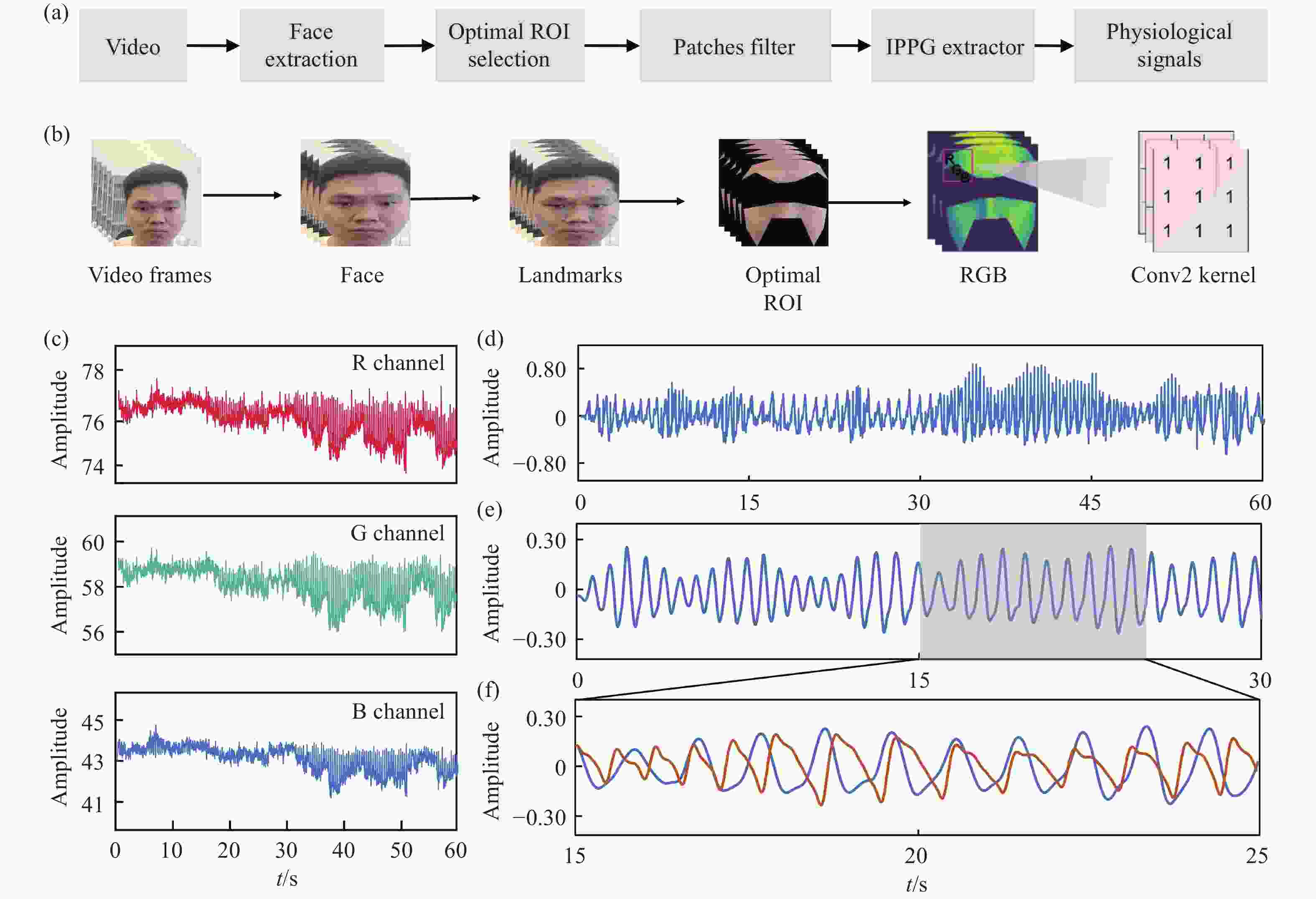

图 3 IPPG信号初步提取方法。(a) IPPG信号提取流程图;(b) IPPG的图像处理模块;(c) RGB图;(d) 原始IPPG波形图;(e) 滤波后的IPPG波形图;(f) 截取部分帧的IPPG信号与PPG信号(黄色部分)对比

Figure 3. Preliminary extraction method for IPPG signals. (a) IPPG signal extraction flowchart; (b) IPPG image processing module; (c) RGB image; (d) original IPPG waveform diagram; (e) filtered IPPG waveform diagram; (f) comparison of IPPG signals and PPG signals (yellow portion) from a portion of a frame

表 1 IPPG数据集

Table 1. IPPG dataset

数据集名称 受试者

数量视频

数量视频

时长帧率 分辨率 PPG

采样率采集设备与方式 采集场景/条件描述 PURE 10人 60 1 min 30 Hz 640×480 60 Hz Eco274CVGE 相机

CMS50E 脉搏血氧仪稳定、交谈、慢速平移、快速平移、

小范围旋转、中度旋转共6种条件UBFC-rPPG 42人 46 1 min 30 Hz 640×480 60 Hz LogitechC920相机

CMS50E血氧仪室内自然光,通过数字游戏诱导心率变化 UBFC-Phys 56人 168 3 min 35 Hz 1024 ×1024 64 Hz E0-23121CRGB相机

EmpaticaE4腕带室内自然光,压力诱导情境 MMPD 33人 660 1 min 30 Hz 320×240 30 Hz SamsungGalaxyS22手机

HKG-07C血氧仪4种照明(自然光、白炽灯、低LED、高LED)

和4种活动(静止、转头、说话、行走)表 2 IPPG提取方法在PURE数据集性能对比

Table 2. Comparison of IPPG extraction methods on PURE dataset

方法 评估指标 SNR HR AVNN SDNN (dB)↑ MAE(bpm)↓ RMSE(bpm)↓ r↑ MAE(ms)↓ RMSE(ms)↓ MAE(ms)↓ RMSE(ms)↓ CHROM[18] 5.35 4.85 16.27 0.72 40.19 − 42.18 − POS[19] − 2.45 3.88 0.85 10.83 16.42 21.56 30.86 ESA-rPPGNet[26] − − − − 8.92 − 11.75 − PhysFormer[23] 6.31 4.36 13.00 0.76 24.83 − 29.11 − DiffPhys[30] 10.07 1.46 5.88 0.90 13.75 − 21.10 − PulseGan[29] 6.69 2.01 6.87 0.87 24.04 − 45.63 − DeepPhys[20] 6.32 3.96 12.95 0.76 25.55 − 41.20 − rPPG-MAE[27] − 0.40 0.92 0.99 − − − − SiNC[28] − 0.61 1.84 1.00 − − − − STFPNet[31] − 0.47 0.69 0.99 − − − − RhythmFormer[25] − 0.27 0.47 0.99 − − − − Ours 11.13 0.03 0.06 0.99 8.90 9.66 42.67 49.66 表 3 IPPG提取方法在UBFC-rPPG数据集性能对比

Table 3. Comparison of IPPG extraction methods on UBFC-rPPG dataset

方法 评估指标 SNR HR AVNN SDNN (dB)↑ MAE(bpm)↓ RMSE(bpm)↓ r↑ MAE(ms)↓ RMSE(ms)↓ MAE(ms)↓ RMSE(ms)↓ POS[19] − 2.47 3.88 0.84 12.76 18.28 21.76 31.40 CHROM[18] 4.92 3.19 9.98 0.90 29.11 − 24.21 − ESA-rPPGNet[26] − − − − 5.14 − 13.76 − PhysFormer[23] 6.01 2.83 6.43 0.99 7.11 − 13.49 − DiffPhys[30] 7.98 1.05 1.63 0.99 7.11 − 13.49 − PulseGan[29] 7.90 1.19 2.10 0.97 7.52 − 18.36 − rPPG-MAE[27] − 0.17 0.21 0.99 − − − − STFPNet[31] − 0.41 0.95 0.99 − − − − RhythmFormer[25] − 0.50 0.78 0.99 − − − − Ours 9.48 0.50 0.74 0.99 7.46 10.64 21.71 30.90 表 4 IPPG提取方法在UBFC-Phys和MMPD数据集性能对比

Table 4. Comparison of IPPG extraction methods on UBFC-Phys and MMPD datasets

方法 评估指标 HR(UBFC-Phys) HR(MMPD) MAE(bpm)↓ RMSE(bpm)↓ r↑ MAE(bpm)↓ RMSE(bpm)↓ r↑ GREEN[40] 13.55 18.80 0.29 21.68 27.69 −0.01 ICA[17] 10.04 15.73 0.36 18.60 24.30 0.01 CHROM[18] 4.49 7.56 0.80 13.66 18.76 0.08 LGI[41] 6.27 10.41 0.70 17.08 23.32 0.04 PBV[42] 12.34 17.43 0.33 17.95 23.58 0.09 POS[19] 4.51 8.16 0.77 12.36 17.71 0.18 DeepPhys[20] − − − 22.27 28.92 −0.03 PhysNet[24] − − − 4.80 11.80 0.60 TS-CAN[21] − − − 9.71 17.22 0.44 PhysFomer[23] − − − 11.99 18.41 0.18 EfficientPhys[22] − − − 13.47 21.32 0.21 RhythmFormer[25] − − − 3.07 6.81 0.86 Ours 1.40 3.51 0.89 5.99 10.39 0.63 -

[1] ZHANG X B, XIA ZH Q, DAI J, et al. MSDN: a multistage deep network for heart-rate estimation from facial videos[J]. IEEE Transactions on Instrumentation and Measurement, 2023, 72: 5032415. [2] TAO X, SU L W, RAO ZH, et al. Facial video-based non-contact emotion recognition: a multi-view features expression and fusion method[J]. Biomedical Signal Processing and Control, 2024, 96: 106608. doi: 10.1016/j.bspc.2024.106608 [3] 黄凯, 王峰, 王晔, 等. 基于颜色和光流的多注意力机制微表情识别[J]. 液晶与显示, 2024, 39(7): 939-949.HUANG Kai, WANG Feng, WANG Ye, et al. Multi-attention micro-expression recognition based on color and optical flow[J]. Chinese Journal of Liquid Crystals and Displays, 2024, 39(7): 939-949. (in Chinese). [4] GUARDUCCI S, JAYOUSI S, CAPUTO S, et al. Key fundamentals and examples of sensors for human health: wearable, non-continuous, and non-contact monitoring devices[J]. Sensors, 2025, 25(2): 556. doi: 10.3390/s25020556 [5] LEE R J, SIVAKUMAR S, LIM K H. Review on remote heart rate measurements using photoplethysmography[J]. Multimedia Tools and Applications, 2024, 83(15): 44699-44728. [6] PEREPELKINA O, ARTEMYEV M, CHURIKOVA M, et al. HeartTrack: convolutional neural network for remote video-based heart rate monitoring[C]. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), IEEE, 2020: 1163-1171. [7] YU Z T, PENG W, LI X B, et al. Remote heart rate measurement from highly compressed facial videos: an end-to-end deep learning solution with video enhancement[C]. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), IEEE, 2019: 151-160. [8] CASADO C Á, LÓPEZ M B. Face2PPG: an unsupervised pipeline for blood volume pulse extraction from faces[J]. IEEE Journal of Biomedical and Health Informatics, 2023, 27(11): 5530-5541. doi: 10.1109/JBHI.2023.3307942 [9] ALNAGGAR M, SIAM A I, HANDOSA M, et al. Video-based real-time monitoring for heart rate and respiration rate[J]. Expert Systems with Applications, 2023, 225: 120135. doi: 10.1016/j.eswa.2023.120135 [10] ZOU B CH, GUO Z ZH, HU X CH, et al. RhythmMamba: fast, lightweight, and accurate remote physiological measurement[C]. Proceedings of the 39th AAAI Conference on Artificial Intelligence, AAAI Press, 2025: 11077-11085. [11] WU B W, JIANG T, YU ZH X, et al. Proximity sensing electronic skin: principles, characteristics, and applications[J]. Advanced Science, 2024, 11(13): 2308560. doi: 10.1002/advs.202308560 [12] 饶治, 李炳霖, 隋雅茹, 等. 成像式光体积描记术精神压力检测[J]. 中国光学(中英文), 2022, 15(6): 1350-1359.RAO ZH, LI B L, SUI Y R, et al. Image photoplethysmography for mental stress detection[J]. Chinese Optics, 2022, 15(6): 1350-1359. (in Chinese). [13] 嵇晓强, 刘振瑶, 李炳霖, 等. 面部视频非接触式生理参数感知[J]. 中国光学, 2022, 15(2): 276-285. doi: 10.37188/CO.2021-0157JI X Q, LIU ZH Y, LI B L, et al. Non-contact perception of physiological parameters from videos of faces[J]. Chinese Optics, 2022, 15(2): 276-285. (in Chinese). doi: 10.37188/CO.2021-0157 [14] CASADO C Á, CAÑELLAS M L, LÓPEZ M B. Depression recognition using remote photoplethysmography from facial videos[J]. IEEE Transactions on Affective Computing, 2023, 14(4): 3305-3316. doi: 10.1109/TAFFC.2023.3238641 [15] ANIL A A, KARTHIK S, SIVAPRAKASAM M, et al. Dynamic ROI adaptation for accurate non-contact heart rate estimation using VGG-13 based encoder-decoder model and facial landmarks[C]. ICASSP 2025 - 2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, 2025: 1-5. [16] POH M Z, MCDUFF D J, PICARD R W. Advancements in noncontact, multiparameter physiological measurements using a webcam[J]. IEEE Transactions on Biomedical Engineering, 2011, 58(1): 7-11. doi: 10.1109/TBME.2010.2086456 [17] DE HAAN G, JEANNE V. Robust pulse rate from chrominance-based rPPG[J]. IEEE Transactions on Biomedical Engineering, 2013, 60(10): 2878-2886. doi: 10.1109/TBME.2013.2266196 [18] WANG W J, DEN BRINKER A C, STUIJK S, et al. Algorithmic principles of remote PPG[J]. IEEE Transactions on Biomedical Engineering, 2017, 64(7): 1479-1491. doi: 10.1109/TBME.2016.2609282 [19] 陈森路, 刘育梁, 徐团伟. 基于自适应感兴趣区域的视频心率测量[J]. 光学精密工程, 2021, 29(7): 1740-1749.CHEN Sen-lu, LIU Yu-liang, XU Tuan-wei. Video heart rate measurements based on adaptive region of interest[J]. Optics and Precision Engineering, 2021, 29(7): 1740-1749. (in Chinese). [20] CHEN W X, MCDUFF D. DeepPhys: video-based physiological measurement using convolutional attention networks[C]. Proceedings of the 15th European Conference on Computer Vision - ECCV 2018, Springer, 2018: 356-373. [21] LIU X, FROMM J, PATEL S, et al. Multi-task temporal shift attention networks for on-device contactless vitals measurement[C]. Proceedings of the 34th International Conference on Neural Information Processing Systems, Curran Associates Inc., 2020: 1627. [22] LIU X, HILL B, JIANG Z H, et al. EfficientPhys: enabling simple, fast and accurate camera-based cardiac measurement[C]. 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), IEEE, 2023: 4997-5006. [23] YU Z T, SHEN Y M, SHI J G, et al. PhysFormer: facial video-based physiological measurement with temporal difference transformer[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 2022: 4176-4186. [24] YU Z T, LI X B, ZHAO G Y. Remote photoplethysmograph signal measurement from facial videos using spatio-temporal networks[C]. Proceedings of the 30th British Machine Vision Conference 2019, BWVA Press, 2019: 277. [25] ZOU B CH, GUO Z ZH, CHEN J SH, et al. RhythmFormer: extracting patterned rPPG signals based on periodic sparse attention[J]. Pattern Recognition, 2025, 164: 111511. doi: 10.1016/j.patcog.2025.111511 [26] KUANG H L, LV F B, MA X L, et al. Efficient spatiotemporal attention network for remote heart rate variability analysis[J]. Sensors, 2022, 22(3): 1010. doi: 10.3390/s22031010 [27] LIU X, ZHANG Y T, YU Z T, et al. rPPG-MAE: self-supervised pretraining with masked autoencoders for remote physiological measurements[J]. IEEE Transactions on Multimedia, 2024, 26: 7278-7293. doi: 10.1109/TMM.2024.3363660 [28] SPETH J, VANCE N, FLYNN P, et al. Non-contrastive unsupervised learning of physiological signals from video[C]. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 2023: 14464-14474. [29] SONG R CH, CHEN H, CHENG J, et al. PulseGAN: learning to generate realistic pulse waveforms in remote photoplethysmography[J]. IEEE Journal of Biomedical and Health Informatics, 2021, 25(5): 1373-1384. doi: 10.1109/JBHI.2021.3051176 [30] CHEN SH T, WONG K L, CHIN J W, et al. DiffPhys: enhancing signal-to-noise ratio in remote photoplethysmography signal using a diffusion model approach[J]. Bioengineering, 2024, 11(8): 743. doi: 10.3390/bioengineering11080743 [31] LI ZH P, XIAO H G, XIA Z Y, et al. STFPNet: a simple temporal feature pyramid network for remote heart rate measurement[J]. Measurement, 2025, 252: 117287. doi: 10.1016/j.measurement.2025.117287 [32] HUANG B, HU SH, LIU Z M, et al. Challenges and prospects of visual contactless physiological monitoring in clinical study[J]. npj Digital Medicine, 2023, 6(1): 231. doi: 10.1038/s41746-023-00973-x [33] LUGARESI C, TANG J Q, NASH H, et al. MediaPipe: a framework for building perception pipelines[J]. arXiv:, 1906, 08172: 2019. [34] HO J, JAIN A, ABBEEL P. Denoising diffusion probabilistic models[C]. Proceedings of the 34th International Conference on Neural Information Processing Systems, Curran Associates Inc., 2020: 574. [35] SOHL-DICKSTEIN J, WEISS E A, MAHESWARANATHAN N, et al. Deep unsupervised learning using nonequilibrium thermodynamics[C]. Proceedings of the 32nd International Conference on Machine Learning, JMLR. org, 2015: 2256-2265. [36] STRICKER R, MÜLLER S, GROSS H M. Non-contact video-based pulse rate measurement on a mobile service robot[C]. Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication, IEEE, 2014: 1056-1062. [37] BOBBIA S, MACWAN R, BENEZETH Y, et al. Unsupervised skin tissue segmentation for remote photoplethysmography[J]. Pattern Recognition Letters, 2019, 124: 82-90. doi: 10.1016/j.patrec.2017.10.017 [38] TANG J K, CHEN K Q, WANG Y T, et al. MMPD: multi-domain mobile video physiology dataset[C]. 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), IEEE, 2023: 1-5. [39] SABOUR R M, BENEZETH Y, DE OLIVEIRA P, et al. UBFC-Phys: a multimodal database for psychophysiological studies of social stress[J]. IEEE Transactions on Affective Computing, 2023, 14(1): 622-636. doi: 10.1109/TAFFC.2021.3056960 [40] VERKRUYSSE W, SVAASAND L O, NELSON J S. Remote plethysmographic imaging using ambient light[J]. Optics Express, 2008, 16(26): 21434-21445. doi: 10.1364/OE.16.021434 [41] PILZ C S, ZAUNSEDER S, KRAJEWSKI J, et al. Local group invariance for heart rate estimation from face videos in the wild[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), IEEE, 2018: 1254-1262. [42] DE HAAN G, VAN LEEST A. Improved motion robustness of remote-PPG by using the blood volume pulse signature[J]. Physiological Measurement, 2014, 35(9): 1913-1926. doi: 10.1088/0967-3334/35/9/1913 -

下载:

下载: