-

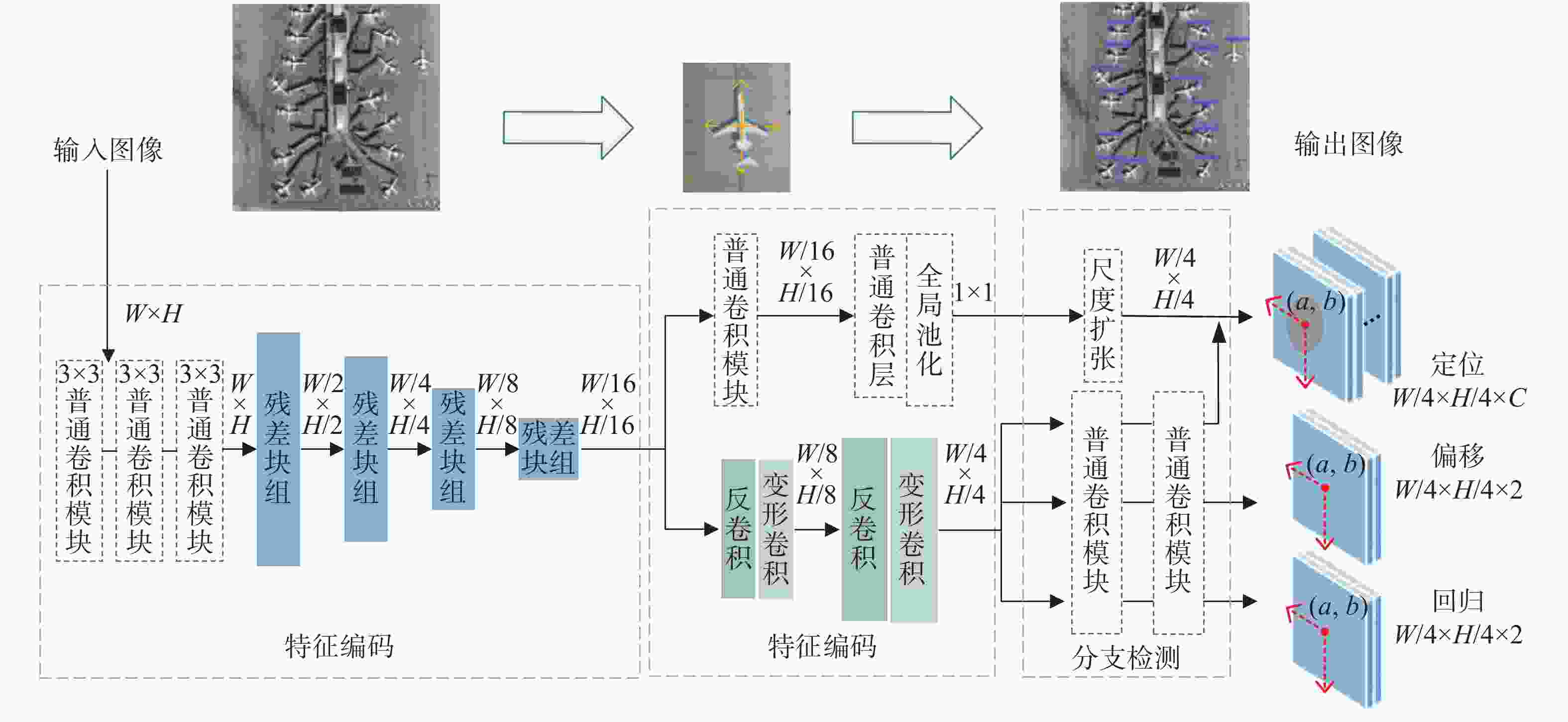

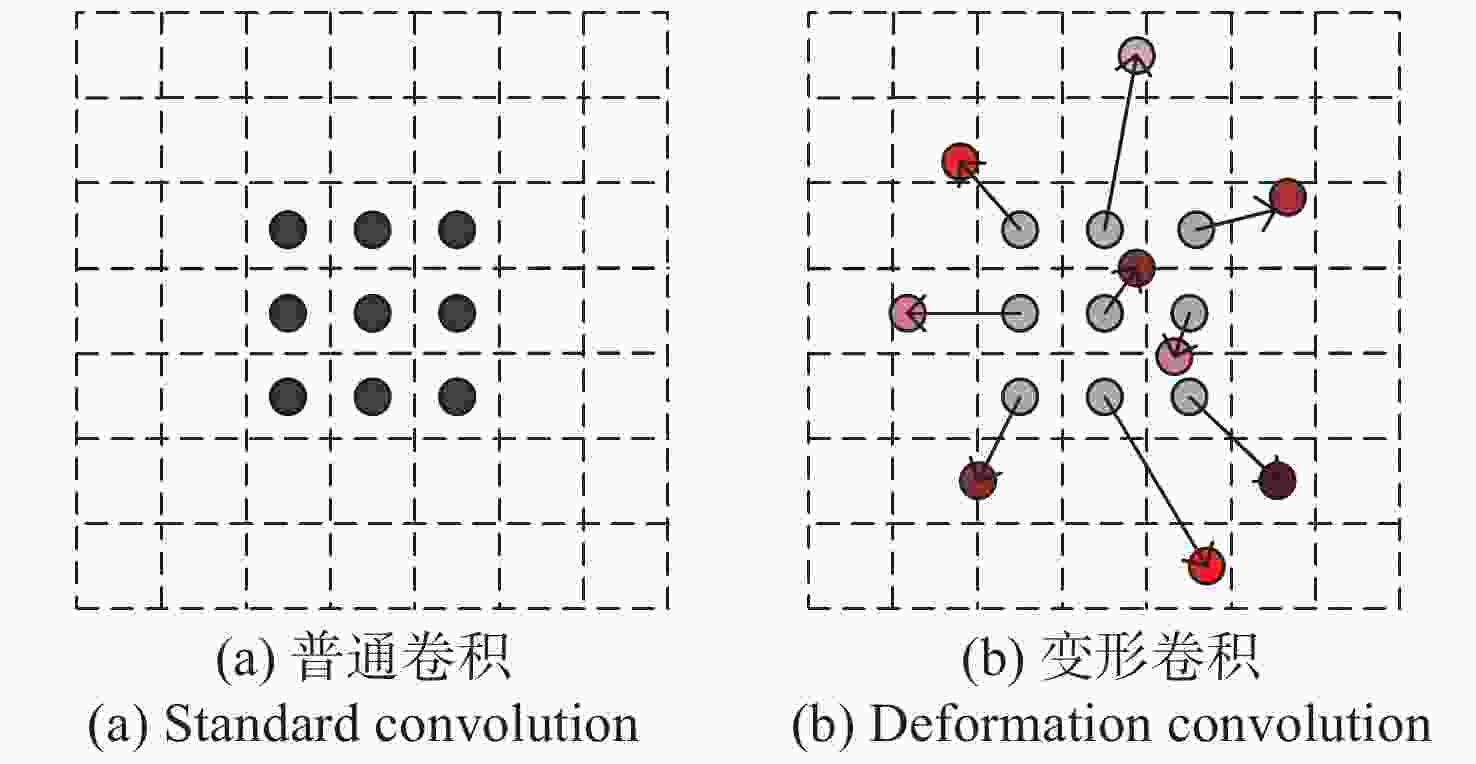

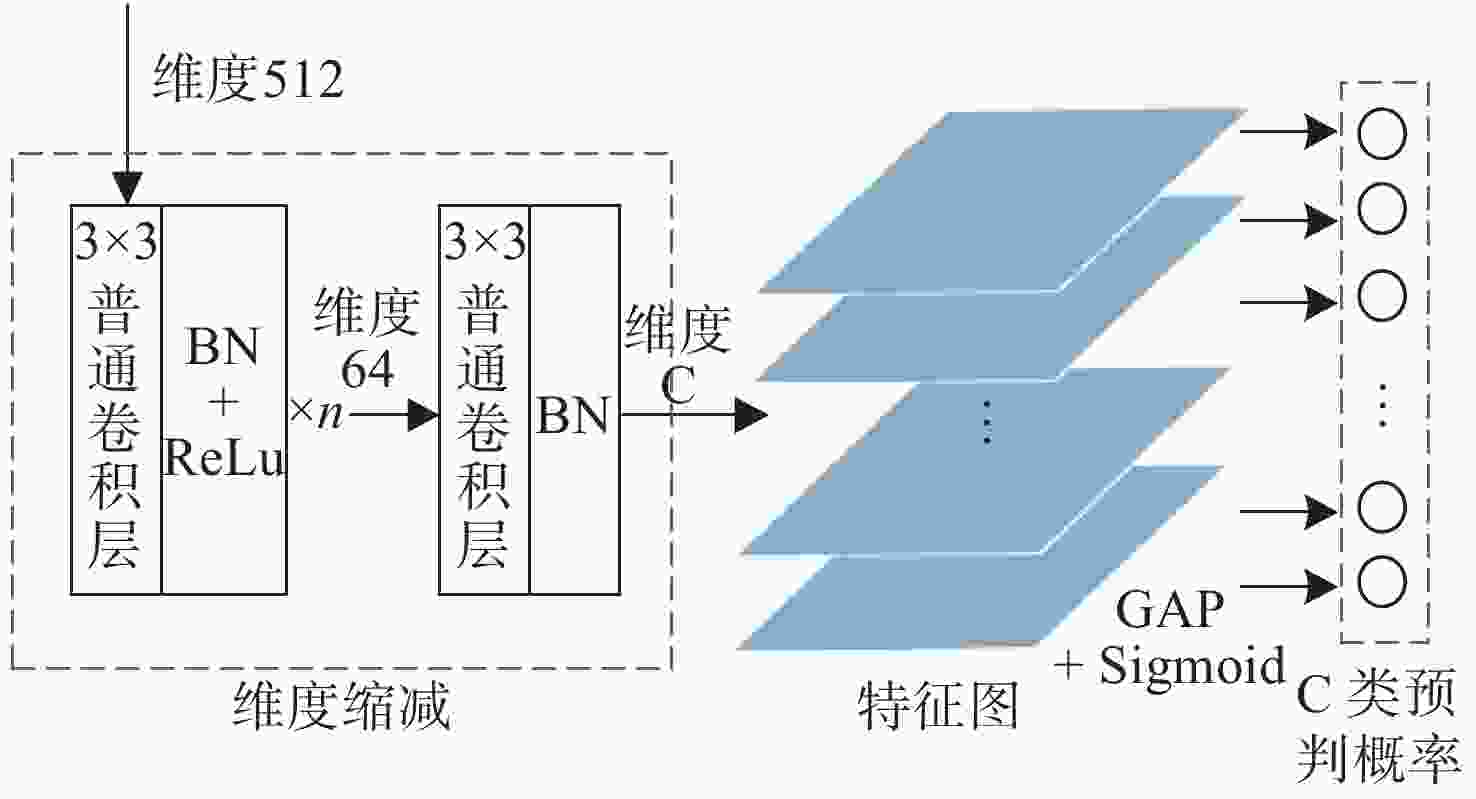

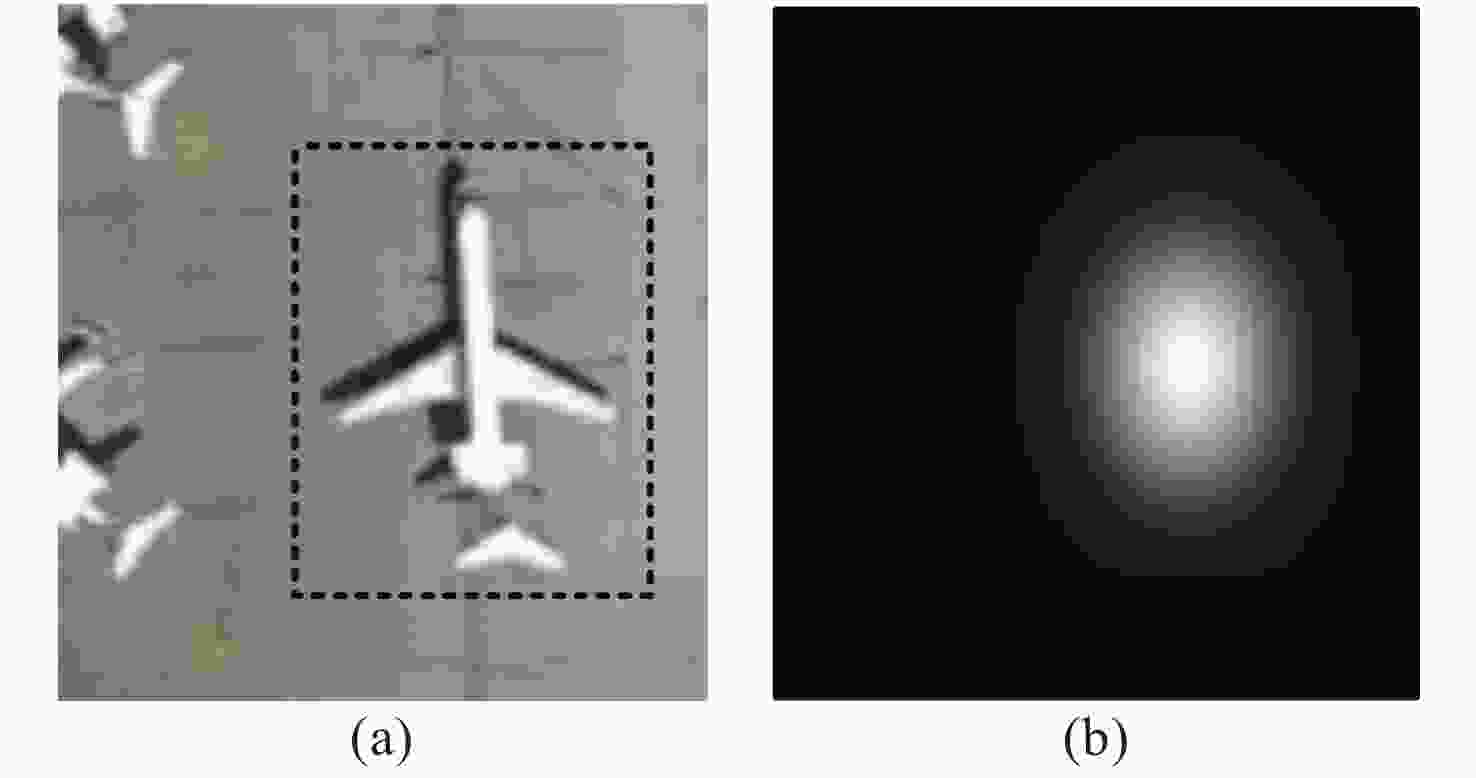

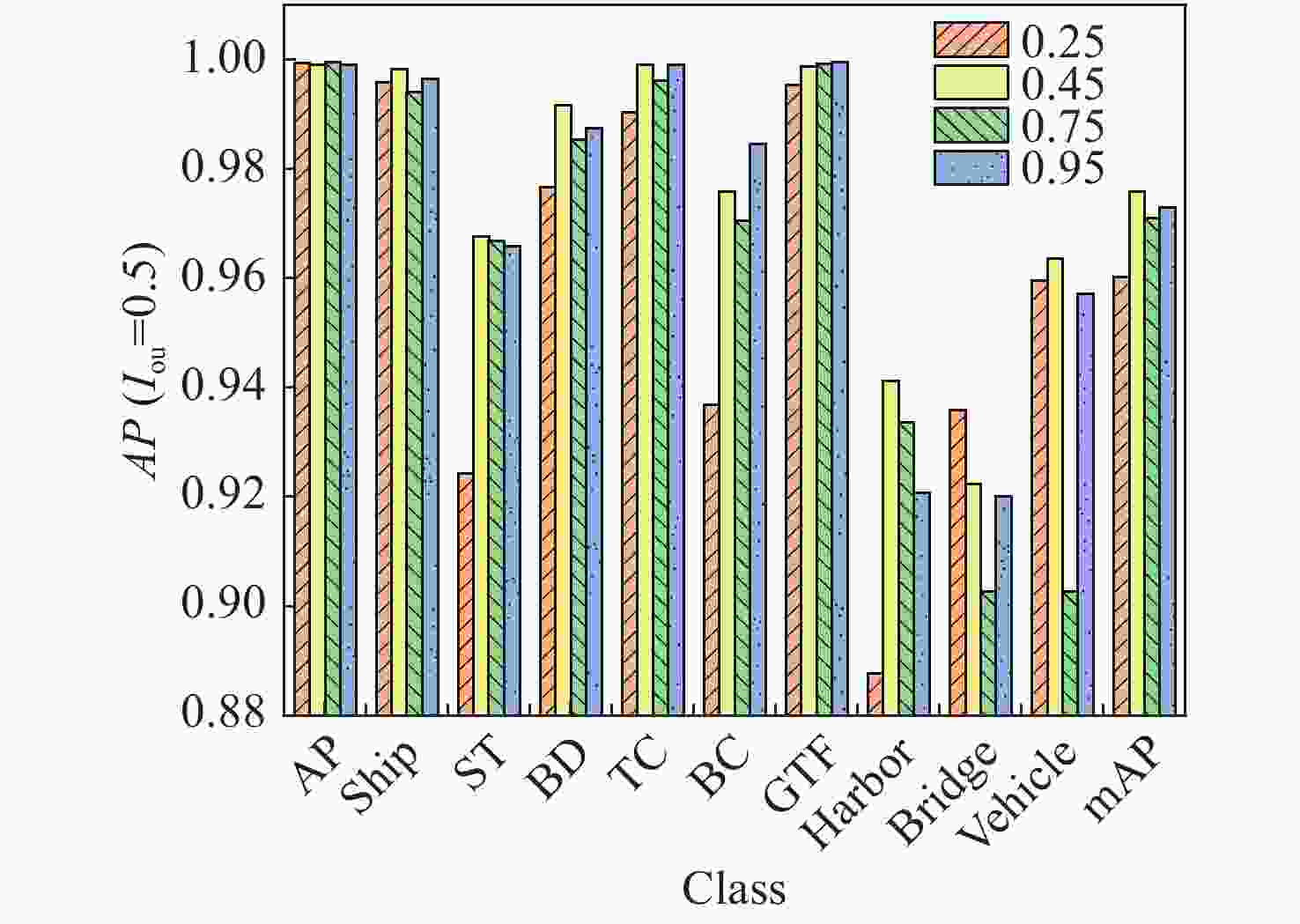

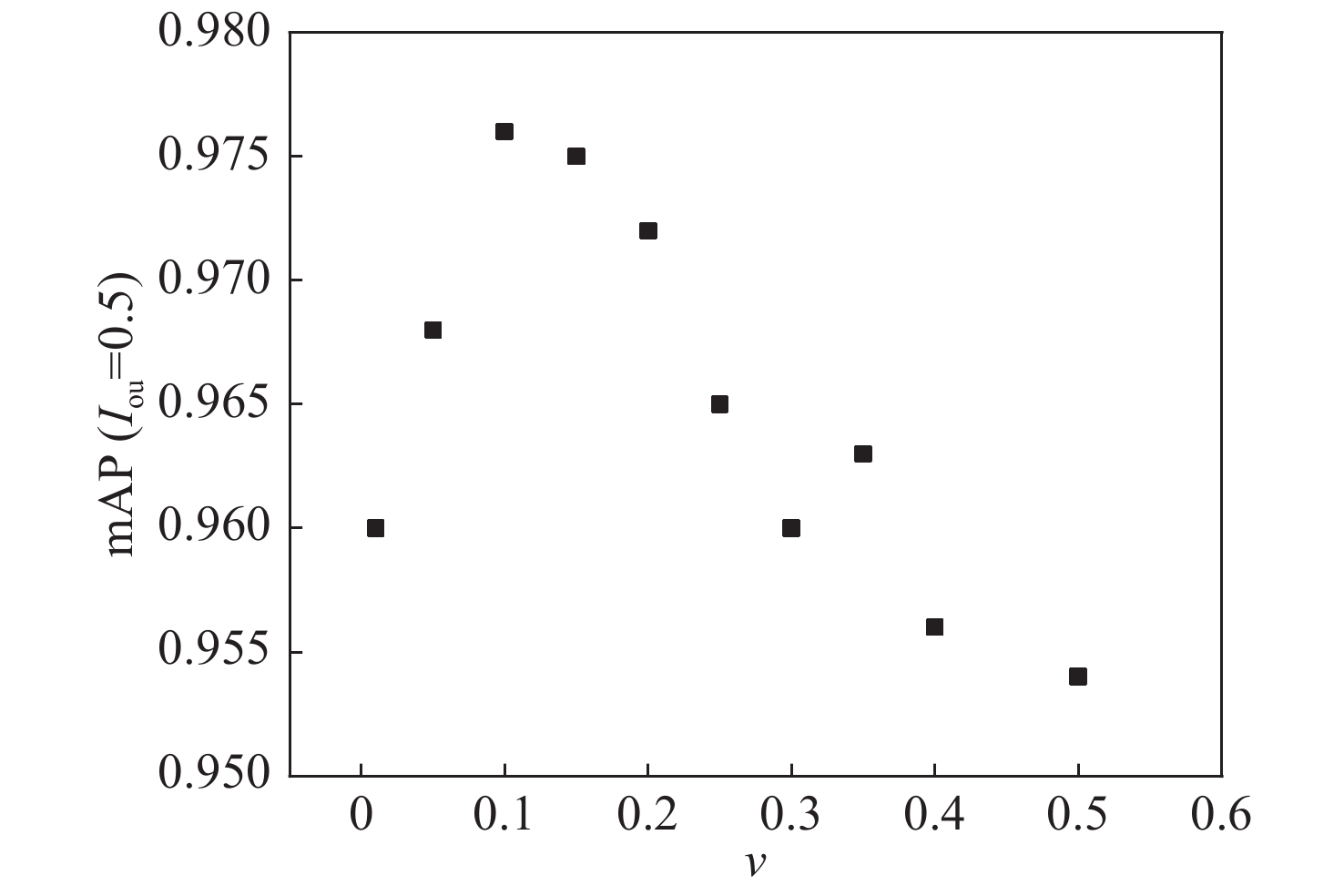

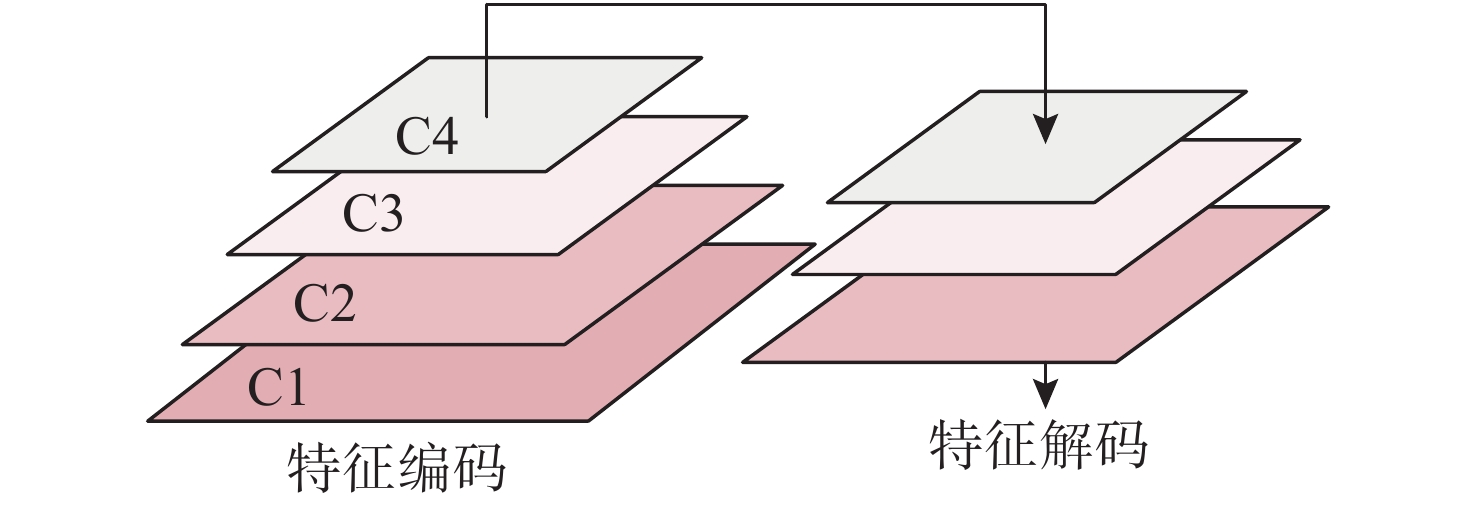

摘要: 在复杂背景下的光学遥感图像目标检测中,为了提高检测精度,同时降低检测网络复杂度,提出了面向光学遥感目标的全局上下文检测模型。首先,采用结构简单的特征编码-特征解码网络进行特征提取。其次,为提高对多尺度目标的定位能力,采取全局上下文特征与目标中心点局部特征相结合的方式生成高分辨率热点图,并利用全局特征实现目标的预分类。最后,提出不同尺度的定位损失函数,用于增强模型的回归能力。实验结果表明: 当使用主干网络Root-ResNet18时,本文模型在公开遥感数据集NWPU VHR-10上的检测精度可达97.6%AP50和83.4%AP75,检测速度达16 PFS,基本满足设计需求,实现了网络速度和精度的有效平衡,便于后续算法在移动设备端的移植和应用。Abstract: To improve the detection accuracy and reduce the complexity of optical remote sensing of target images with a complex background, a global context detection model based on optical remote sensing of targets is proposed. First, a feature encoder-feature decoder network is used for feature extraction. Then, to improve the positioning ability of multi-scale targets, a method that combines global-contextual features and target center local features is used to generate high-resolution heat maps. The global features are used to achieve the pre-classification of targets. Finally, a positioning loss function at different scales is proposed to enhance the regression ability of the model. Experimental results show that the mean average precision of the proposed model reaches 97.6% AP50 and 83.4% AP75 on the NWPU VHR-10 public remote sensing data set, and the speed reaches 16 PFS. This design can achieve an effective balance between accuracy and speed. It facilitates subsequent porting and application of the algorithm on the mobile device side, which meets design requirements.

-

表 1 ResNet18与Root-ResNet18结构

Table 1. Structures of ResNet18 and Root-ResNet18

阶段 输出尺寸 ResNet18 Root-ResNet18 C1 128×128 7×7, 64 3×3, 64 3×3, 64 3×3, 64 3×3, MaxPool $\left[ {\begin{array}{*{20}{c}} {3 \times 3,64} \\ {3 \times 3,64} \end{array}} \right]\times 2$ C2 64×64 3×3, MaxPool $\left[ {\begin{array}{*{20}{c}} {3 \times 3,128} \\ {3 \times 3,128} \end{array}} \right]\times 2$ $\left[ {\begin{array}{*{20}{c}} {3 \times 3,64} \\ {3 \times 3,64} \end{array}} \right]\times 2$ C3 32×32 $\left[ {\begin{array}{*{20}{c}} {3 \times 3,128} \\ {3 \times 3,128} \end{array}} \right]\times 2$ $\left[ {\begin{array}{*{20}{c}} {3 \times 3,256} \\ {3 \times 3,256} \end{array}} \right]\times 2$ C4 16×16 $\left[ {\begin{array}{*{20}{c}} {3 \times 3,256} \\ {3 \times 3,256} \end{array}} \right]\times 2$ $\left[ {\begin{array}{*{20}{c}} {3 \times 3,512} \\ {3 \times 3,512} \end{array}} \right]\times 2$ C5 8×8 $\left[ {\begin{array}{*{20}{c}} {3 \times 3,512} \\ {3 \times 3,512} \end{array}} \right]\times 2$ — 表 2 数据集NWPU VHR-10目标尺寸统计表

Table 2. Statistics of target sizes in the NWPU VHR-10 dataset

尺度(pixel) 0−10 10−40 40−100 100−300 300−500 500以上 宽 0 0.1327 0.6948 0.1599 0.0126 0 高 0 0.1448 0.7205 0.1242 0.0105 0 表 3 不同模型在数据集NWPU VHR-10上的平均精确度对比

Table 3. Comparison of mean average precisions of different models in the NWPU VHR-10 dataset

模型 主干网络 飞机 船舰 油罐 棒球场 网球场 篮球场 田径场 港口 桥梁 车辆 平均精确度(mAP) RICAOD AlexNet 0.9970 0.9080 0.9061 0.9291 0.9029 0.8031 0.9081 0.8029 0.6853 0.8714 0.8712 SSD VGG16 0.9839 0.8993 0.8918 0.9851 0.8791 0.8481 0.9949 0.7730 0.7828 0.8739 0.8912 YOLOv3 Darknet53 0.9091 0.9091 0.9081 0.9913 0.9086 0.9091 0.9947 0.9005 0.9091 0.9035 0.9243 MMDFN VGG16 0.9934 0.9227 0.9918 0.9668 0.9632 0.9756 1.0000 0.9740 0.8027 0.9136 0.9504 MSDN ResNet50 0.9976 0.9721 0.8383 0.9909 0.9734 0.9991 0.9868 0.9719 0.9267 0.9010 0.9558 MSCNN ResNet50 0.9940 0.9530 0.9180 0.9630 0.9540 0.9670 0.9930 0.9550 0.9720 0.9330 0.9600 GCDN Root-ResNet18 0.9991 0.9983 0.9677 0.9916 0.9991 0.9759 0.9988 0.9412 0.9224 0.9636 0.9757 表 4 检测阈值0.75下的不同模型平均精确度对比

Table 4. Comparison of mean-average precision of different models under the 0.75 detection threshold

模型 平均准确率 (AP) 平均准确率(AP)($I_{ {\rm{{ou} } } }$=0.50:0.95) 平均召回率(AR)($I_{ {\rm{{ou} } } }$=0.50:0.95) $I_{ {\rm{{ou} } } }$=0.50:0.95 $I_{ {\rm{{ou} } } }$=0.50 $I_{ {\rm{{ou} } } }$=0.75 小目标 中目标 大目标 小目标 中目标 大目标 CenterNet 0.663 0.968 0.768 0.576 0.669 0.704 0.630 0.725 0.741 MSCNN 0.706 0.960 0.824 0.547 0.578 0.701 0.600 0.605 0.700 GCDN-woGC 0.679 0.973 0.775 0.572 0.690 0.750 0.639 0.744 0.786 GCDN 0.705 0.976 0.834 0.612 0.727 0.706 0.683 0.773 0.740 表 5 不同模型的平均检测时间对比

Table 5. Comparison of the average detection times with different models

模型 输入尺寸 时间/s RICAOD 400×400 2.89 MMDFN 400×400 0.75 YOLOv3 640×640 0.13 MSDN 600×800 0.21 GCDN 640×640 0.06 表 6 不同模型在数据集DOTA上的平均精确度对比

Table 6. Comparison of the mean-average precisions with different models in the DOTA dataset

模型 主干网络 平均检测精度(mAP) YOLOv2 GoogleNet 39.20 R-FCN ResNet101 52.58 Faster-RCNN ResNet101 60.46 SCFPN-scf ResNet101 75.22 GCDN Root-ResNet18 75.95 -

[1] 许夙晖, 慕晓冬, 柯冰, 等. 基于遥感影像的军事阵地动态监测技术研究[J]. 遥感技术与应用,2014,29(3):511-516.XU S H, MU X D, KE B, et al. Dynamic monitoring of military position based on remote sensing image[J]. Remote Sensing Technology and Application, 2014, 29(3): 511-516. (in Chinese) [2] VALERO S, CHANUSSOT J, BENEDIKTSSON J A, et al. Advanced directional mathematical morphology for the detection of the road network in very high resolution remote sensing images[J]. Pattern Recognition Letters, 2010, 31(10): 1120-1127. doi: 10.1016/j.patrec.2009.12.018 [3] DALAL N, TRIGGS B. Histograms of oriented gradients for human detection[C]. Proceedings of 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, IEEE, 2005: 886-893. [4] LOWE D G. Distinctive image features from scale-invariant keypoints[J]. International Journal of Computer Vision, 2004, 60(2): 91-110. doi: 10.1023/B:VISI.0000029664.99615.94 [5] LIU W, ANGUELOV D, ERHAN D, et al.. SSD: single shot multibox detector[C]. Proceedings of the 14th European Conference on Computer Vision, Springer, 2016: 21-37. [6] REDMON J, FARHADI A. Yolov3: an incremental improvement[J]. arXiv: 1804.02767, 2018. [7] 马永杰, 宋晓凤. 基于YOLO和嵌入式系统的车流量检测[J]. 液晶与显示,2019,34(6):613-618. doi: 10.3788/YJYXS20193406.0613MA Y J, SONG X F. Vehicle flow detection based on YOLO and embedded system[J]. Chinese Journal of Liquid Crystals and Displays, 2019, 34(6): 613-618. (in Chinese) doi: 10.3788/YJYXS20193406.0613 [8] LIN T Y, GOYAL P, GIRSHICK R, et al.. Focal loss for dense object detection[C]. Proceedings of 2017 IEEE International Conference on Computer Vision, IEEE, 2017: 2999-3007. [9] LAW H, DENG J. Cornernet: detecting objects as paired keypoints[C]. Proceedings of the 15th European Conference on Computer Vision, Springer, 2018: 765-781. [10] ZHOU X Y, WANG D Q, KRÄHENBÜHL P. Objects as points[J]. arXiv: 1904.07850, 2019. [11] XIAO B, WU H P, WEI Y CH. Simple baselines for human pose estimation and tracking[C]. Proceedings of the 15th European Conference on Computer Vision, Springer, 2018: 472-487. [12] LI K, CHENG G, BU SH H, et al. Rotation-insensitive and context-augmented object detection in remote sensing images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2018, 56(4): 2337-2348. doi: 10.1109/TGRS.2017.2778300 [13] MA W P, GUO Q Q, WU Y, et al. A novel multi-model decision fusion network for object detection in remote sensing images[J]. Remote Sensing, 2019, 11(7): 737. doi: 10.3390/rs11070737 [14] 梁华, 宋玉龙, 钱锋, 等. 基于深度学习的航空对地小目标检测[J]. 液晶与显示,2018,33(9):793-800. doi: 10.3788/YJYXS20183309.0793LIANG H, SONG Y L, QIAN F, et al. Detection of small target in aerial photography based on deep learning[J]. Chinese Journal of Liquid Crystals and Displays, 2018, 33(9): 793-800. (in Chinese) doi: 10.3788/YJYXS20183309.0793 [15] 姚群力, 胡显, 雷宏. 基于多尺度卷积神经网络的遥感目标检测研究[J]. 光学学报,2019,39(11):1128002. doi: 10.3788/AOS201939.1128002YAO Q L, HU X, LEI H. Object detection in remote sensing images using multiscale convolutional neural networks[J]. Acta Optica Sinica, 2019, 39(11): 1128002. (in Chinese) doi: 10.3788/AOS201939.1128002 [16] 邓志鹏, 孙浩, 雷琳, 等. 基于多尺度形变特征卷积网络的高分辨率遥感影像目标检测[J]. 测绘学报,2018,47(9):1216-1227.DENG ZH P, SUN H, LEI L, et al. Object detection in remote sensing imagery with multi-scale deformable convolutional networks[J]. Acta Geodaetica et Cartographica Sinica, 2018, 47(9): 1216-1227. (in Chinese) [17] 董潇潇, 何小海, 吴晓红, 等. 基于注意力掩模融合的目标检测算法[J]. 液晶与显示,2019,34(8):825-833. doi: 10.3788/YJYXS20193408.0825DONG X X, HE X H, WU X H, et al. Object detection algorithm based on attention mask fusion[J]. Chinese Journal of Liquid Crystals and Displays, 2019, 34(8): 825-833. (in Chinese) doi: 10.3788/YJYXS20193408.0825 [18] WANG CH, BAI X, WANG SH, et al. Multiscale visual attention networks for object detection in VHR remote sensing images[J]. IEEE Geoscience and Remote Sensing Letters, 2019, 16(2): 310-314. doi: 10.1109/LGRS.2018.2872355 [19] 左俊皓, 赵聪, 朱晓龙, 等. Faster-RCNN和Level-Set结合的高分遥感影像建筑物提取[J]. 液晶与显示,2019,34(4):439-447. doi: 10.3788/YJYXS20193404.0439ZUO J H, ZHAO C, ZHU X L, et al. High-resolution remote sensing image building extraction combined with Faster-RCNN and Level-Set[J]. Chinese Journal of Liquid Crystals and Displays, 2019, 34(4): 439-447. (in Chinese) doi: 10.3788/YJYXS20193404.0439 [20] 于渊博, 张涛, 郭立红, 等. 卫星视频运动目标检测算法[J]. 液晶与显示,2017,32(2):138-143. doi: 10.3788/YJYXS20173202.0138YU Y B, ZHANG T, GUO L H, et al. Moving objects detection on satellite video[J]. Chinese Journal of Liquid Crystals and Displays, 2017, 32(2): 138-143. (in Chinese) doi: 10.3788/YJYXS20173202.0138 [21] LIU W, RABINOVICH A, BERG A C. Parsenet: looking wider to see better[J]. arXiv: 1506.04579, 2015. [22] ZHANG H, DANA K, SHI J P, et al.. Context encoding for semantic segmentation[C]. Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2018: 7151-7160. [23] HE K M, ZHANG X Y, REN SH Q, et al.. Deep residual learning for image recognition[C]. Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 2016: 770-778. [24] ZHU R, ZHANG SH F, WANG X B, et al.. ScratchDet: training single-shot object detectors from scratch[C]. Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2019: 2263-2272. [25] LIN M, CHEN Q, YAN SH CH. Network in network[J]. arXiv: 1312.4400, 2013. [26] CHENG G, ZHOU P CH, HAN J W. Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 54(12): 7405-7415. doi: 10.1109/TGRS.2016.2601622 [27] XIA G S, BAI X, DING J, et al.. DOTA: a large-scale dataset for object detection in aerial images[C]. Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2018: 3974-3983. [28] ZEILER M D, FERGUS R. Visualizing and understanding convolutional networks[C]. Proceedings of the 13th European Conference on Computer Vision, Springer, 2014: 818-833. [29] CHEN K, WANG J Q, PANG J M, et al.. MMDetection: open MMLab detection toolbox and benchmark[J]. arXiv: 1906.07155v1, 2019. [30] CHEN CH Y, GONG W G, CHEN Y L, et al. Object detection in remote sensing images based on a scene-contextual feature pyramid network[J]. Remote Sensing, 2019, 11(3): 339. doi: 10.3390/rs11030339 -

下载:

下载: