-

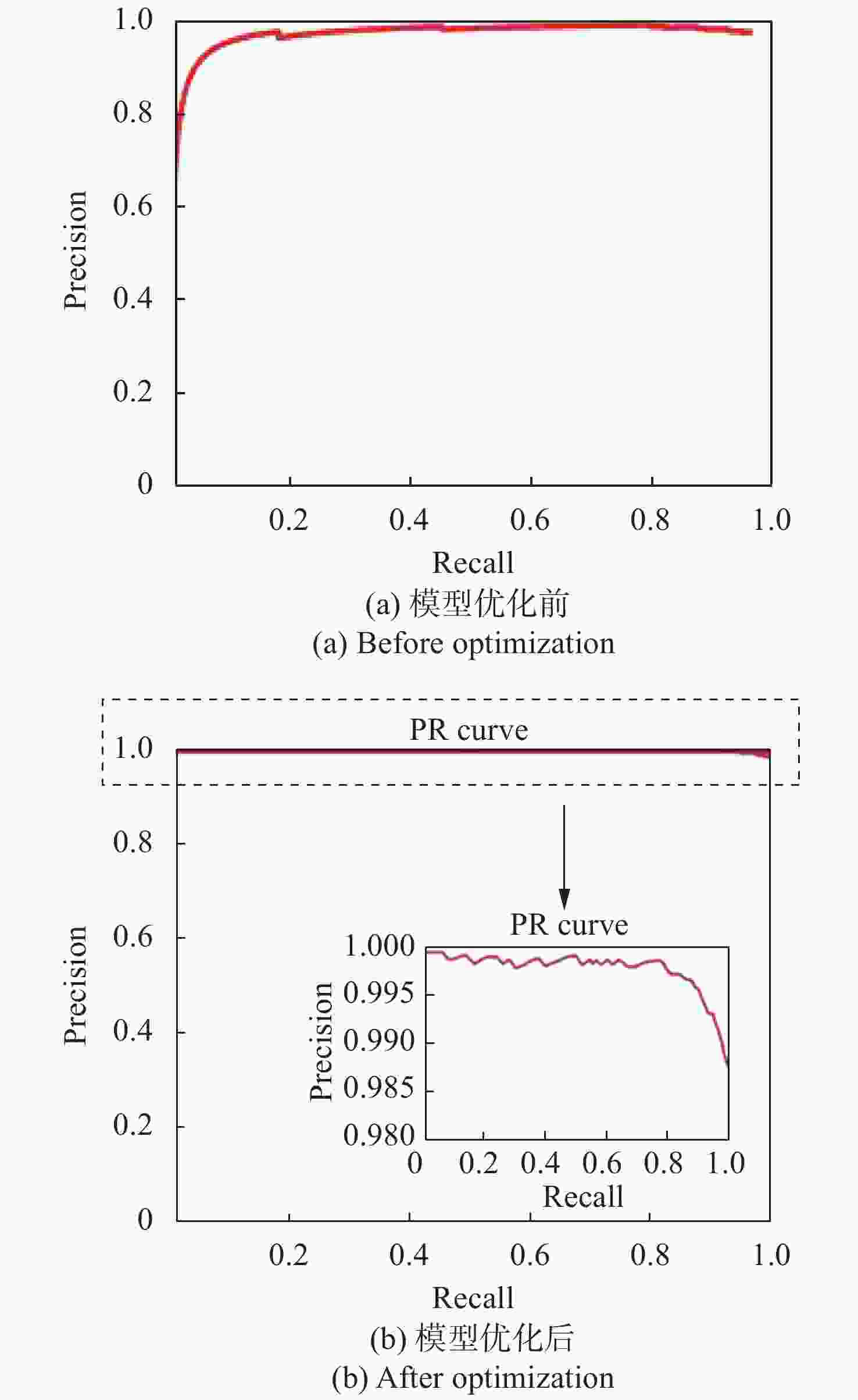

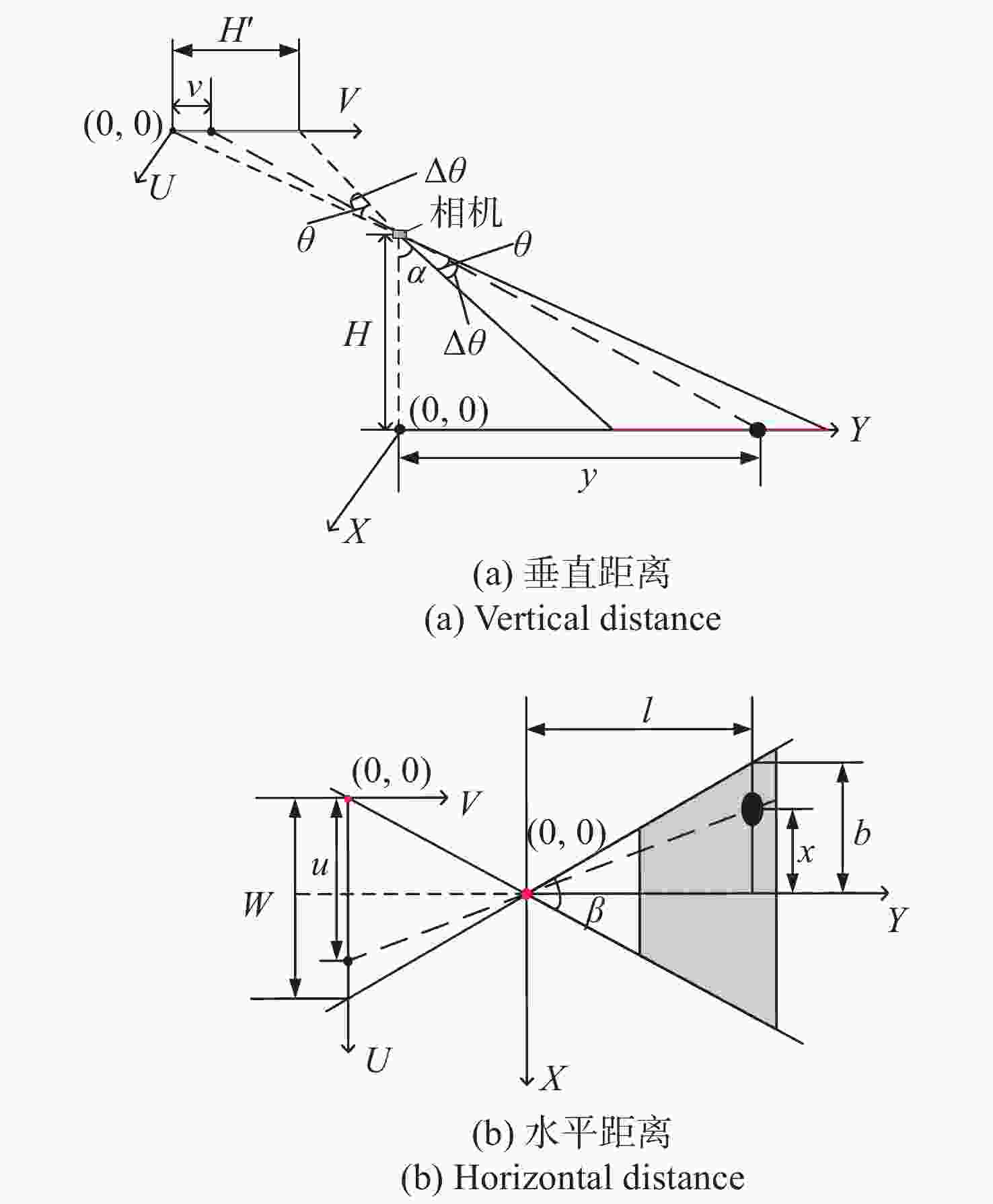

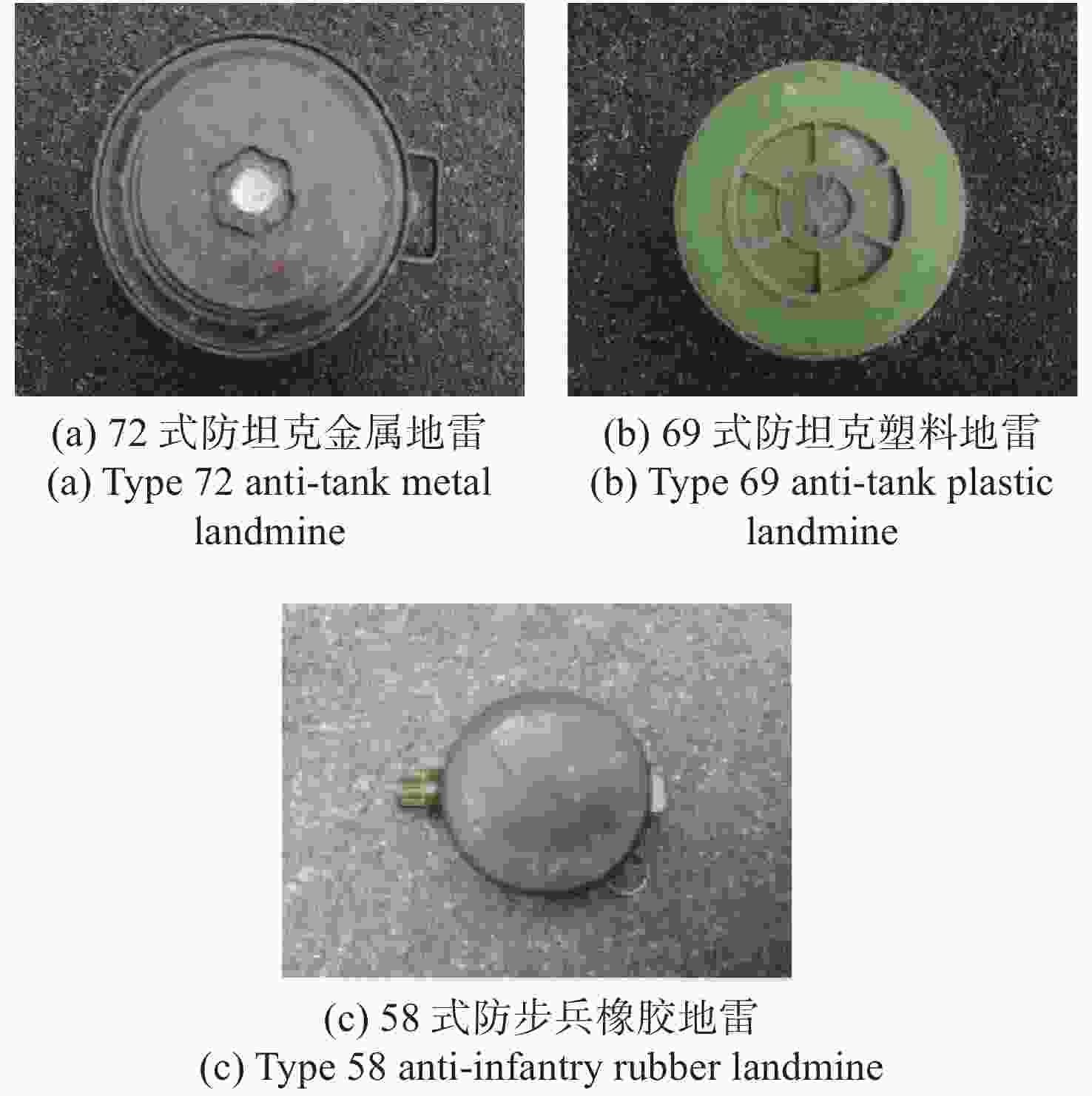

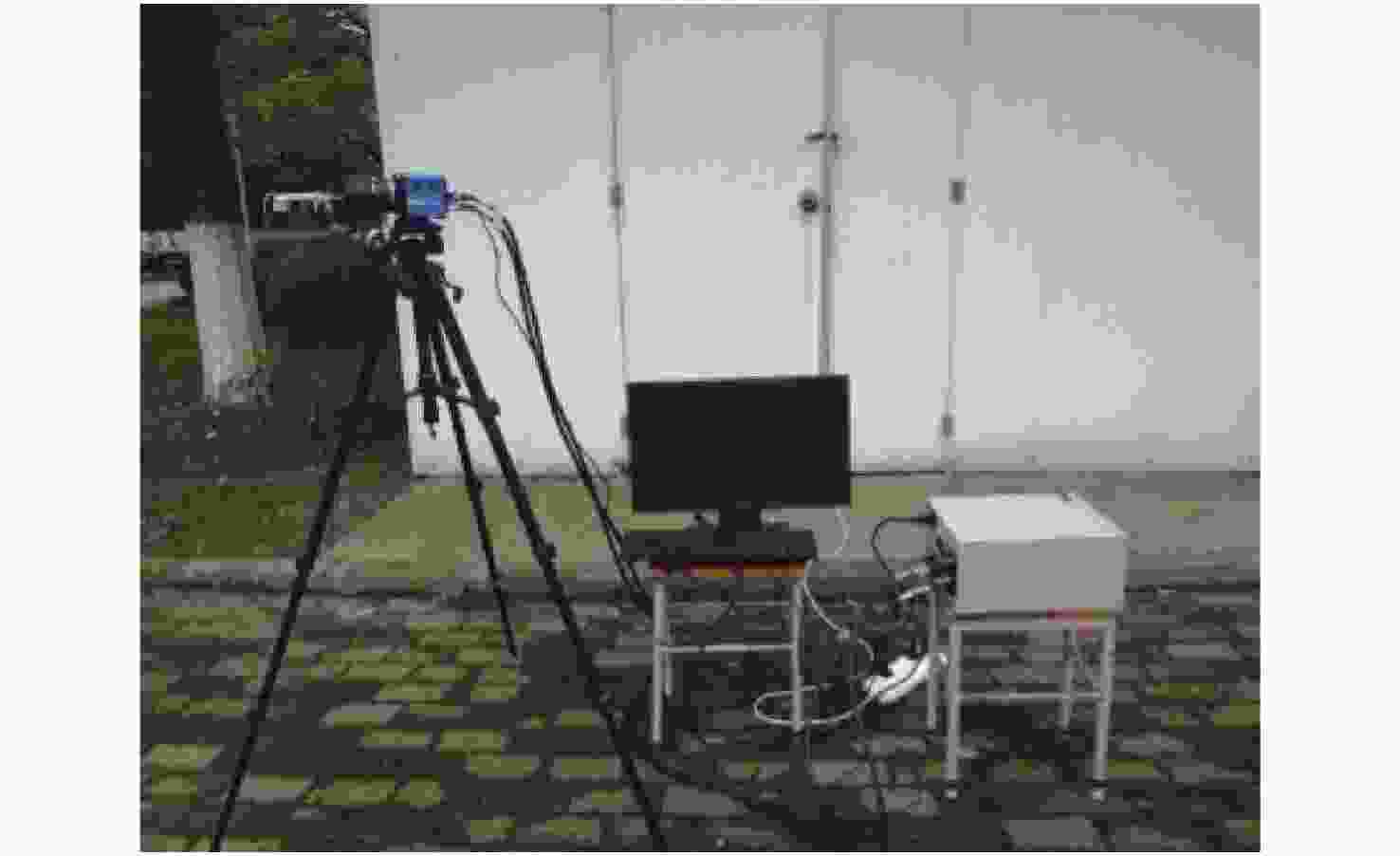

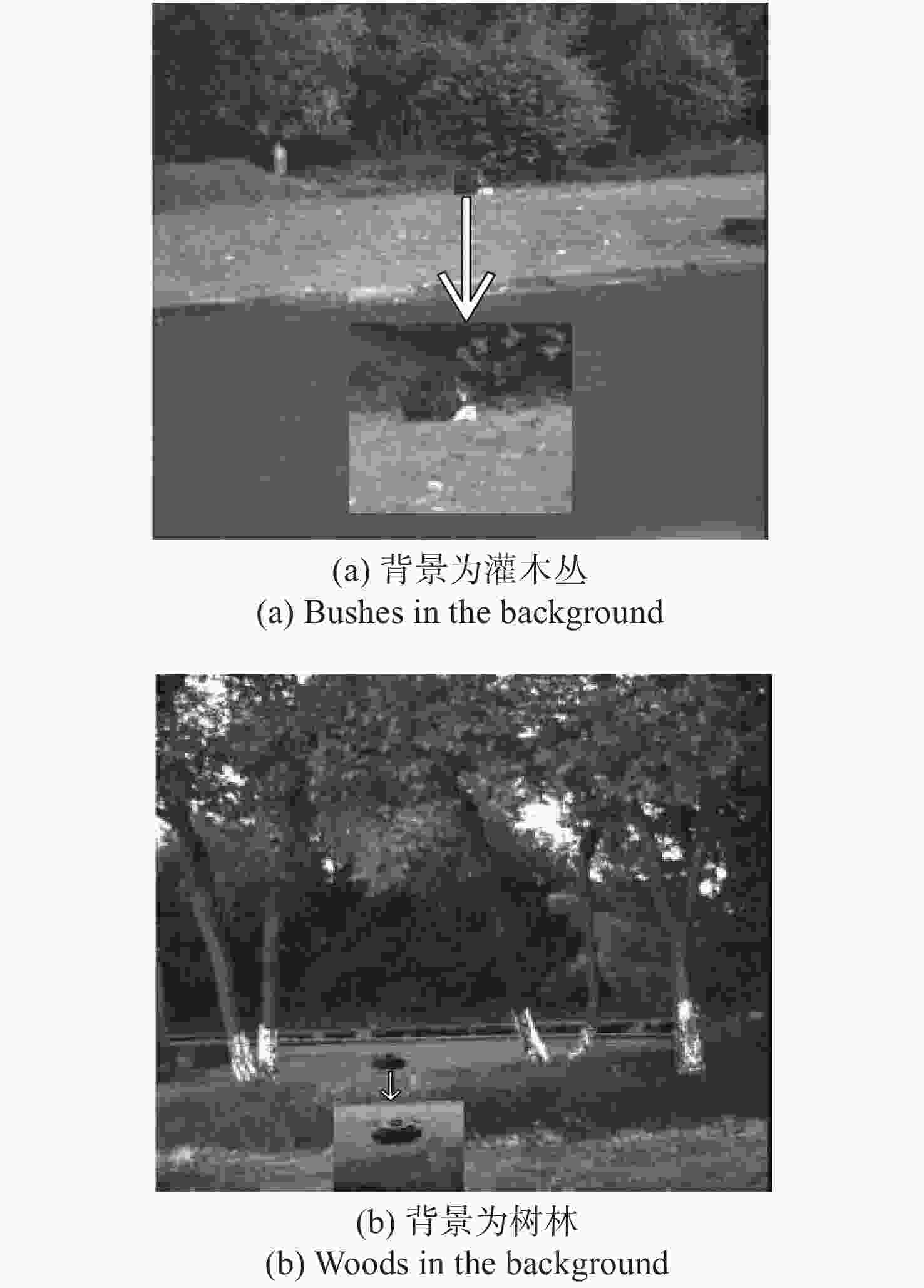

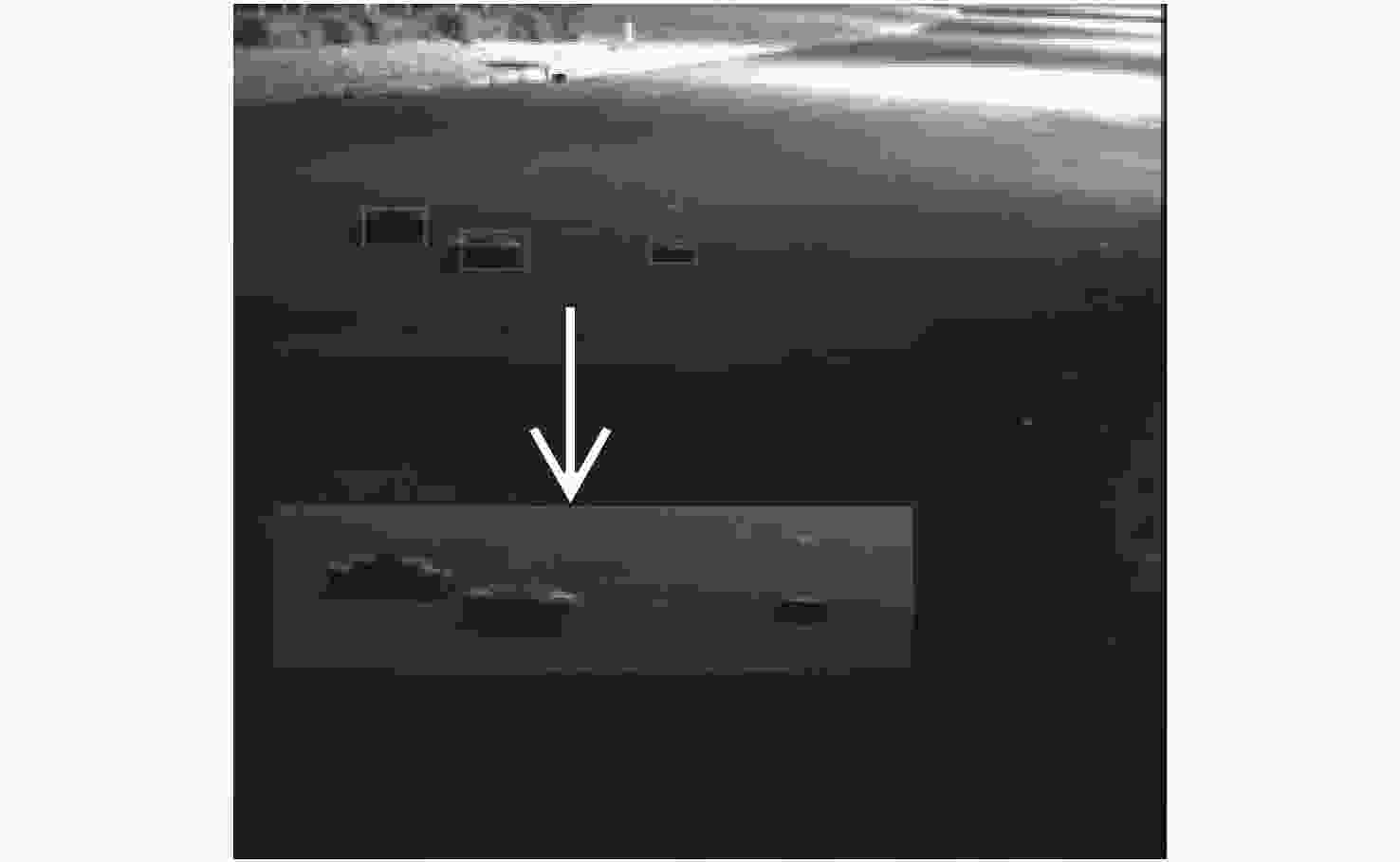

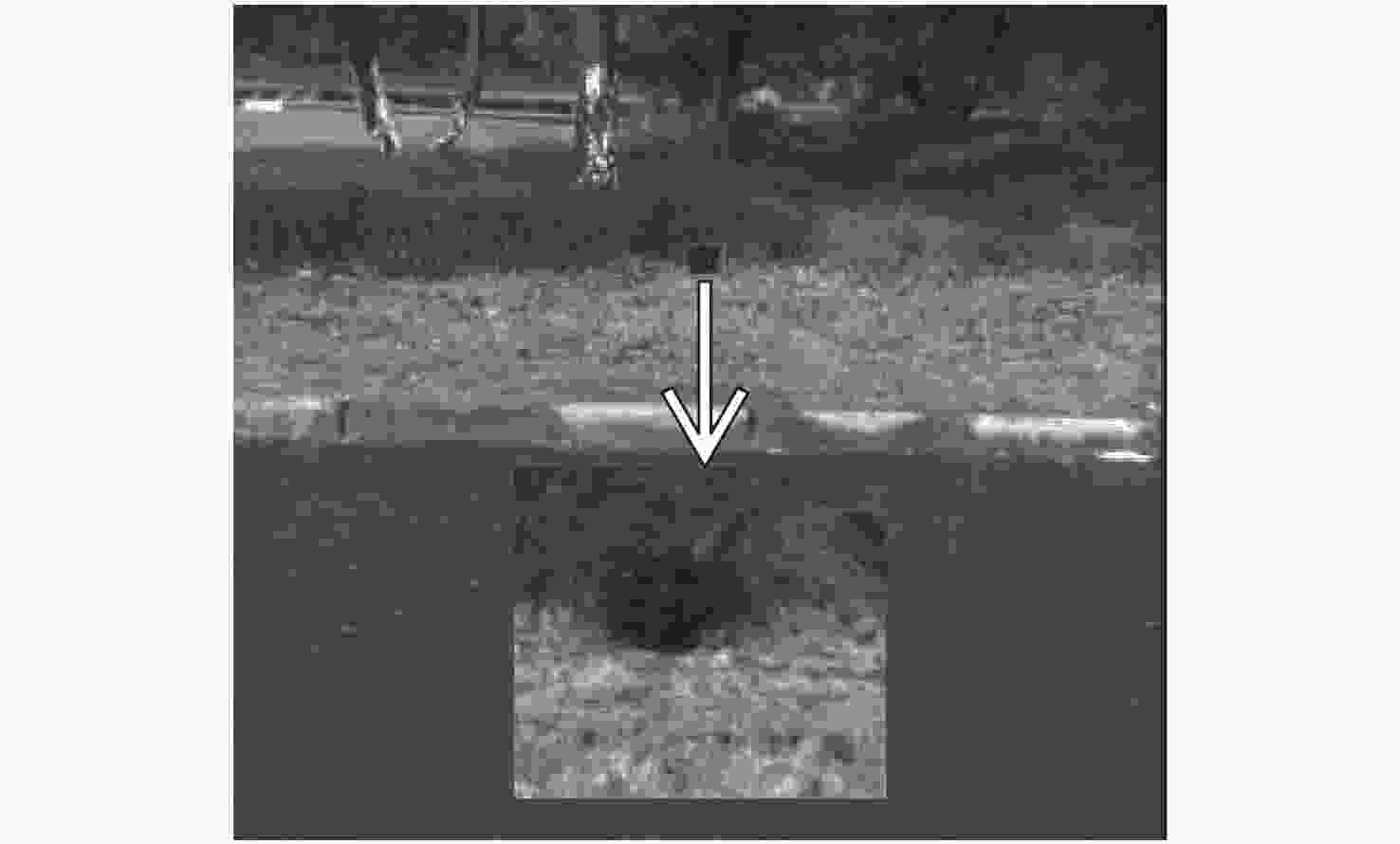

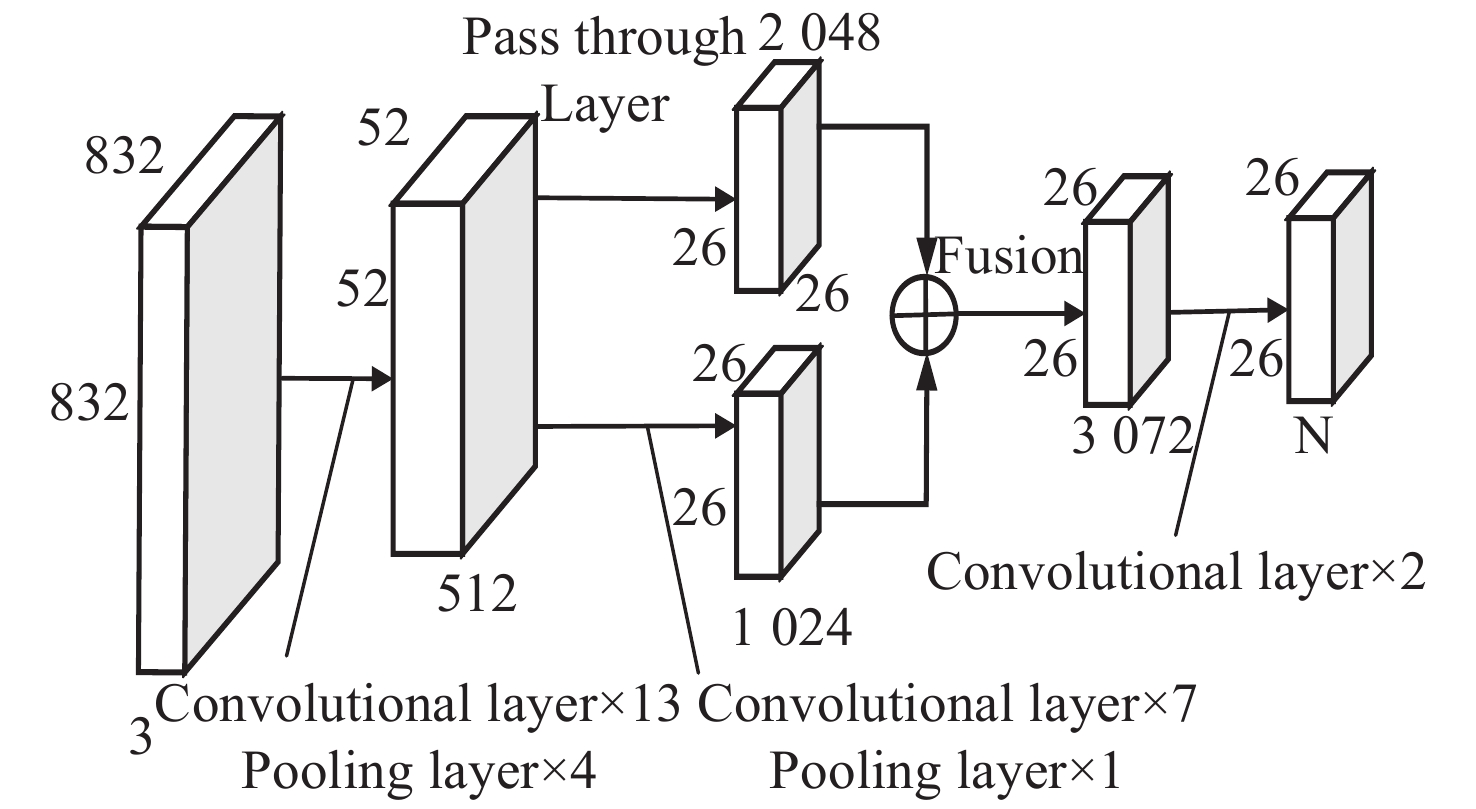

摘要: 本文提出一种基于机器学习的抛撒地雷的夜视智能探测方法。首先,根据YOLO系列机器学习算法,设计并优化了抛撒地雷的智能检测网络模型;其次,根据几何光学成像的相似性原理,研究抛撒地雷的测距模型。最后,搭建抛撒地雷的夜视智能探测系统进行实验测试分析。实验结果显示,优化后抛撒地雷智能探测网络模型的准确度达到98.97%、召回率达到99.22%、均值平均精度为99.2%;在给定的实验条件下,利用优化后的抛撒地雷测距模型,对抛撒地雷的距离测算误差为±10 cm,表明利用机器学习可以用于对抛撒地雷进行智能探测。Abstract: Night vision intelligent detection method of scatterable landmines based on machine learning is presented. Firstly, the intelligent detection network model of scatterable landmines is designed and optimized based on the YOLO series algorithm. Then, the model measuring the distance between scatterable landmines and detection equipment is proposed based on the similarity principle of geometric optical imaging. Finally, a night vision intelligent detection system for scatterable landmines is built, tested and analyzed. The experimental results show that the optimized intelligent detection network model can detect scatterable landmines with an accuracy of 98.97%, a recall rate of 99.22%, and a mean average accuracy of 99.2%. Under the given experimental conditions, the optimized scatterable landmine ranging model has an error of ±10 cm in the calculated distance of scatterable landmines. The study shows that machine learning can perform intelligent and long-distance detection of scatterable landmines.

-

表 1 训练参数

Table 1. Training parameters

参数名称 参数值 网络权重更新的batch数目 64 网络实际训练细分批次数 8 网络训练图片的宽 832 网络训练图片的高 832 动量参数 0.9 权重衰减系数 0.0005 学习率 0.001 迭代次数 100200 表 2 测试集测试时相关指标

Table 2. Relevant indexes during test set testing

Instance number TureMines FalseMines Recall Precision Map Before optimization 387 374 11 96.64% 97.14% 95.286% After optimization 387 384 4 99.22% 98.97% 99.2% 表 3 抛撒地雷测距实验数据

Table 3. Experimental data of distance measurement for scatterable landmines

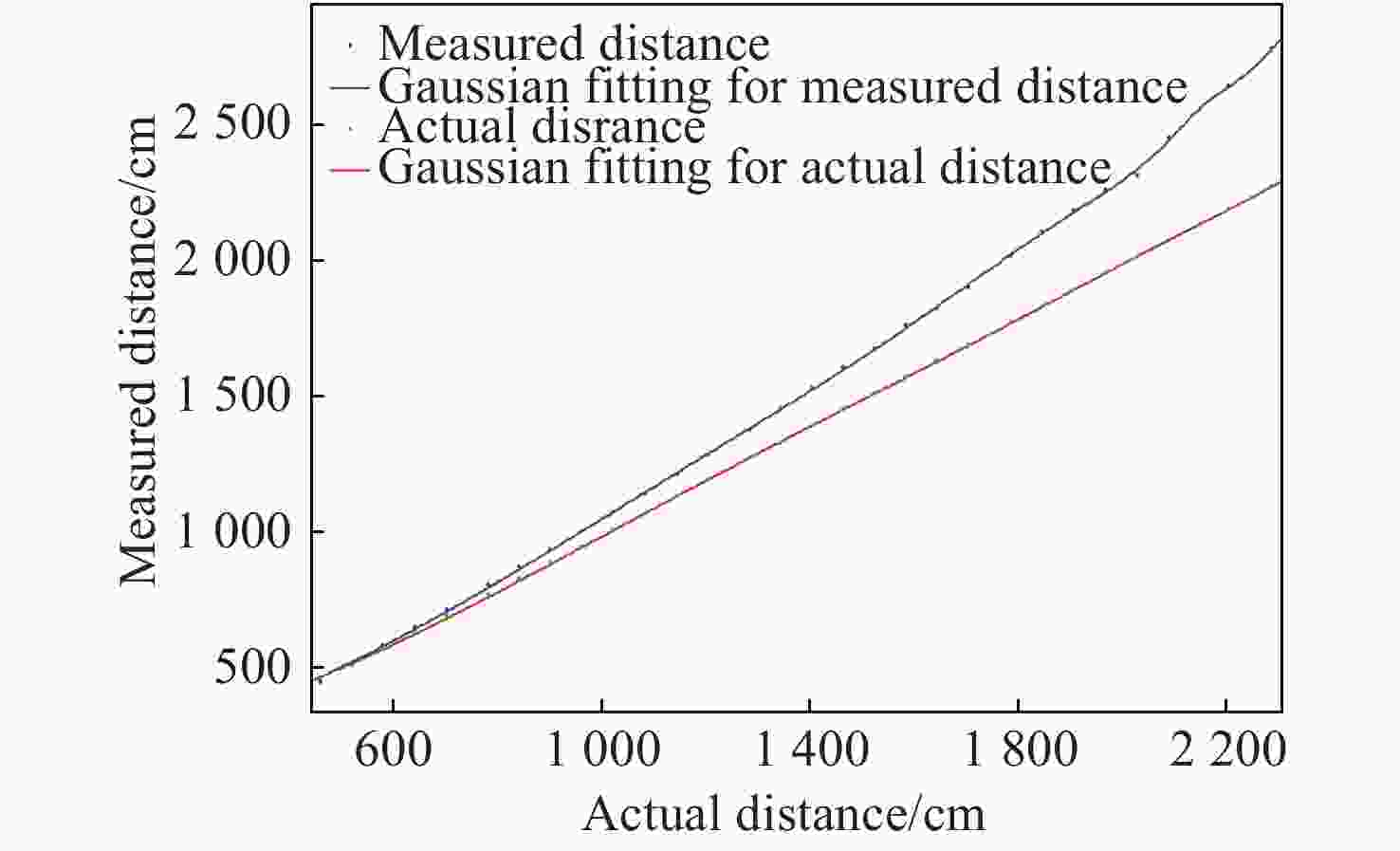

测量

次数激光测距仪

测量距离/cm优化前算法

测量距离/cm误差值/cm 误差 1 461.3 465.9 4.6 0.99% 2 582.0 595.3 13.3 2.28% 3 641.5 662.4 20.9 3.26% 4 782.6 818.0 35.4 4.52% 5 960.5 1014.9 54.4 5.66% 6 1083.8 1155.5 71.7 6.62% 7 1284.8 1387.6 102.8 8.00% 8 1343.4 1470.0 126.6 9.42% 9 1464.5 1618.9 154.4 10.54% 10 1584.1 1775.3 191.2 12.07% 11 1786.5 2033.2 246.7 13.81% 12 1844.4 2119.9 275.5 14.94% 13 1906.7 2199.7 293.0 15.37% 14 2088.4 2466.8 378.4 18.12% 15 2147.9 2657.8 509.9 23.73% 16 2285.4 2791.9 506.5 22.16% 表 4 优化算法后抛撒地雷测距实验数据

Table 4. Experimental data of the distance between the scatterable landmine and the camera after optimizing the algorithm

测量次数 激光测距仪测量距离/cm 优化后算法测量距离/cm 误差值/cm 误差 1 461.3 484.2 22.9 4.96% 2 582.0 584.5 2.5 0.43% 3 641.5 640.0 −1.5 −0.23% 4 782.6 775.5 −7.1 −0.91% 5 960.5 954.5 −6.0 −0.62% 6 1083.8 1082.2 −1.6 −0.15% 7 1284.8 1283.8 −1.0 −0.08% 8 1343.4 1351.5 8.1 0.60% 9 1464.5 1469.0 4.5 0.31% 10 1584.1 1588.2 4.1 0.26% 11 1786.5 1784.8 −1.7 −0.09% 12 1844.4 1851.8 7.4 0.40% 13 1906.7 1912.9 6.2 0.32% 14 2088.4 2097.0 8.5 0.41% 15 2147.9 2153.0 4.9 0.23% 16 2285.4 2285.6 0.2 0.01% -

[1] DANIELS D J. A review of GPR for landmine detection[J]. Sensing and Imaging:An International Journal, 2006, 7(3): 90-123. doi: 10.1007/s11220-006-0024-5 [2] LIANG F L, ZHANG H H, WANG Y M, et al.. Landmine-enhanced imaging based on migratory scattering in ultra-wideband synthetic aperture radar[C]. Proceedings of 2013 IEEE International Conference on Signal Processing, Communication and Computing, IEEE, 2013: 1-4. [3] KASBAN H, ZAHRAN O, ELARABY S M, et al. A comparative study of landmine detection techniques[J]. Sensing and Imaging:An International Journal, 2010, 11(3): 89-112. doi: 10.1007/s11220-010-0054-x [4] ŠIPOŠ D, GLEICH D. A lightweight and low-power UAV-borne ground penetrating radar design for landmine detection[J]. Sensors, 2020, 20(8): 2234. doi: 10.3390/s20082234 [5] BAUR J, STEINBERG G, NIKULIN A, et al. Applying deep learning to automate UAV-based detection of scatterable landmines[J]. Remote Sensing, 2020, 12(5): 859. doi: 10.3390/rs12050859 [6] KOSITSKY J, COSGROVE R, AMAZEEN C A, et al. Results from a forward-looking GPR mine detection system[J]. Proceedings of SPIE, 2002, 4742: 2002. [7] MONTIEL-ZAFRA V, CANADAS-QUESADA F J, VERA-CANDEAS P, et al. A novel method to remove GPR background noise based on the similarity of non-neighboring regions[J]. Journal of Applied Geophysics, 2017, 144: 188-203. doi: 10.1016/j.jappgeo.2017.07.010 [8] TAKAHASHI Y, MISAWA T, MASUDA K, et al. Development of landmine detection system based on the measurement of radiation from landmines[J]. Applied Radiation and Isotopes, 2010, 68(12): 2327-2334. doi: 10.1016/j.apradiso.2010.03.021 [9] BROSINSKY C A, EIRICH R, DIGNEY B L, et al. The application of telematics in the Canadian landmine detection capability[J]. IFAC Proceedings Volumes, 2001, 34(9): 227-233. doi: 10.1016/S1474-6670(17)41710-1 [10] FREELAND R S, MILLER M L, YODER R E, et al. Forensic application of FM-CW and pulse radar[J]. Journal of Environmental and Engineering Geophysics, 2003, 8(2): 97-103. doi: 10.4133/JEEG8.2.97 [11] NICKEL U, CHAUMETTE E, LARZABAL P. Estimation of extended targets using the generalized monopulse estimator: extension to a mixed target model[J]. IEEE Transactions on Aerospace and Electronic Systems, 2013, 49(3): 2084-2096. doi: 10.1109/TAES.2013.6558043 [12] CHRZANOWSKI K. Review of night vision technology[J]. Opto-Electronics Review, 2013, 21(2): 153-181. [13] BOURREE L E. Performance of PHOTONIS’ low light level CMOS imaging sensor for long range observation[J]. Proceedings of SPIE, 2014, 9100: 910004. [14] GROSS E, GINAT R, NESHER O. Low light level CMOS sensor for night vision systems[J]. Proceedings of SPIE, 2015, 9541: 945107. [15] GADE R, MOESLUND T B. Thermal cameras and applications: a survey[J]. Machine Vision and Applications, 2014, 25(1): 245-262. doi: 10.1007/s00138-013-0570-5 [16] LIU L, OUYANG W L, WANG X G, et al. Deep learning for generic object detection: a survey[J]. International Journal of Computer Vision, 2020, 128(2): 261-318. doi: 10.1007/s11263-019-01247-4 [17] GIRSHICK R. Fast R-CNN[C]. Proceedings of 2015 IEEE International Conference on Computer Vision, IEEE, 2015: 1440-1448. [18] LIU W, ANGUELOV D, ERHAN D, et al.. SSD: single shot MultiBox detector[C]. Proceedings of the 14th European Conference on Computer Vision, Springer, 2016: 21-37. [19] REDMON J, FARHADI A. YOLO9000: better, faster, stronger[C]. Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 2017: 6517-6525. [20] FELZENSZWALB P F, GIRSHICK R B, MCALLESTER D, et al. Object detection with discriminatively trained part-based models[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2010, 32(9): 1627-1645. doi: 10.1109/TPAMI.2009.167 [21] LU SH Y, WANG B ZH, WANG H J, et al. A real-time object detection algorithm for video[J]. Computers &Electrical Engineering, 2019, 77: 398-408. [22] HAMMAM A A, SOLIMAN M M, HASSANIEN A E. Real-time multiple spatiotemporal action localization and prediction approach using deep learning[J]. Neural Networks, 2020, 128: 331-344. doi: 10.1016/j.neunet.2020.05.017 [23] DOU Q, CHEN H, YU L Q, et al. Multilevel contextual 3-D CNNs for false positive reduction in pulmonary nodule detection[J]. IEEE Transactions on Biomedical Engineering, 2017, 64(7): 1558-1567. doi: 10.1109/TBME.2016.2613502 [24] DONG ZH, WU Y W, PEI M T, et al. Vehicle type classification using a semisupervised convolutional neural network[J]. IEEE Transactions on Intelligent Transportation Systems, 2015, 16(4): 2247-2256. doi: 10.1109/TITS.2015.2402438 [25] DIRO A A, CHILAMKURTI N. Distributed attack detection scheme using deep learning approach for Internet of Things[J]. Future Generation Computer Systems, 2018, 82: 761-768. doi: 10.1016/j.future.2017.08.043 [26] FRIGUI H, ZHANG L J, GADER P, et al. An evaluation of several fusion algorithms for anti-tank landmine detection and discrimination[J]. Information Fusion, 2012, 13(2): 161-174. doi: 10.1016/j.inffus.2009.10.001 [27] KACHACH R, CAÑAS J M. Hybrid three-dimensional and support vector machine approach for automatic vehicle tracking and classification using a single camera[J]. Journal of Electronic Imaging, 2016, 25(3): 033021. doi: 10.1117/1.JEI.25.3.033021 [28] HE K M, ZHANG X Y, REN SH Q, et al.. Deep residual learning for image recognition[C]. Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 2016: 770-778. [29] SMIRNOV E A, TIMOSHENKO D M, ANDRIANOV S N. Comparison of regularization methods for ImageNet classification with deep convolutional neural networks[J]. AASRI Procedia, 2014, 6: 89-94. doi: 10.1016/j.aasri.2014.05.013 [30] ITAKURA K, HOSOI F. Automatic tree detection from three-dimensional images reconstructed from 360° spherical camera using YOLO v2[J]. Remote Sensing, 2020, 12(6): 988. doi: 10.3390/rs12060988 [31] LECHGAR H, BEKKAR H, RHINANE H. Detection of cities vehicle fleet using Yolo V2 and aerial images[J]. The International Archives of the Photogrammetry,Remote Sensing and Spatial Information Sciences, 2019, XLII-4/W12: 121-126. doi: 10.5194/isprs-archives-XLII-4-W12-121-2019 [32] KIM C, KIM H M, LYUH C G, et al.. Implementation of Yolo-v2 image recognition and other testbenches for a CNN accelerator[C]. Proceedings of 2019 IEEE 9th International Conference on Consumer Electronics, IEEE, 2019: 242-247. [33] WANG L H, YANG Y, SHI J CH. Measurement of harvesting width of intelligent combine harvester by improved probabilistic Hough transform algorithm[J]. Measurement, 2020, 151: 107130. doi: 10.1016/j.measurement.2019.107130 -

下载:

下载: