Improved YOLOv4 for dangerous goods detection in X-ray inspection combined with atrous convolution and transfer learning

-

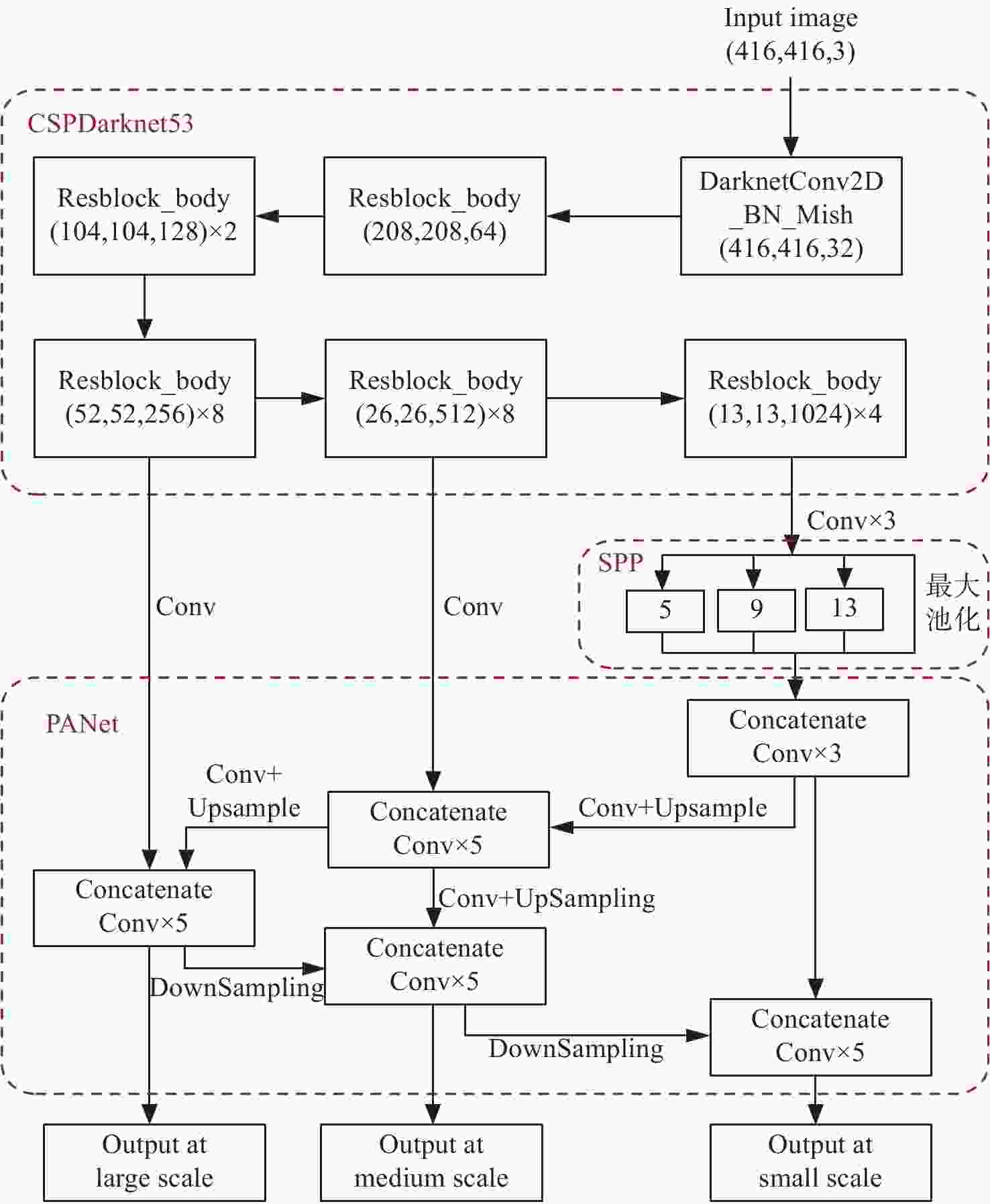

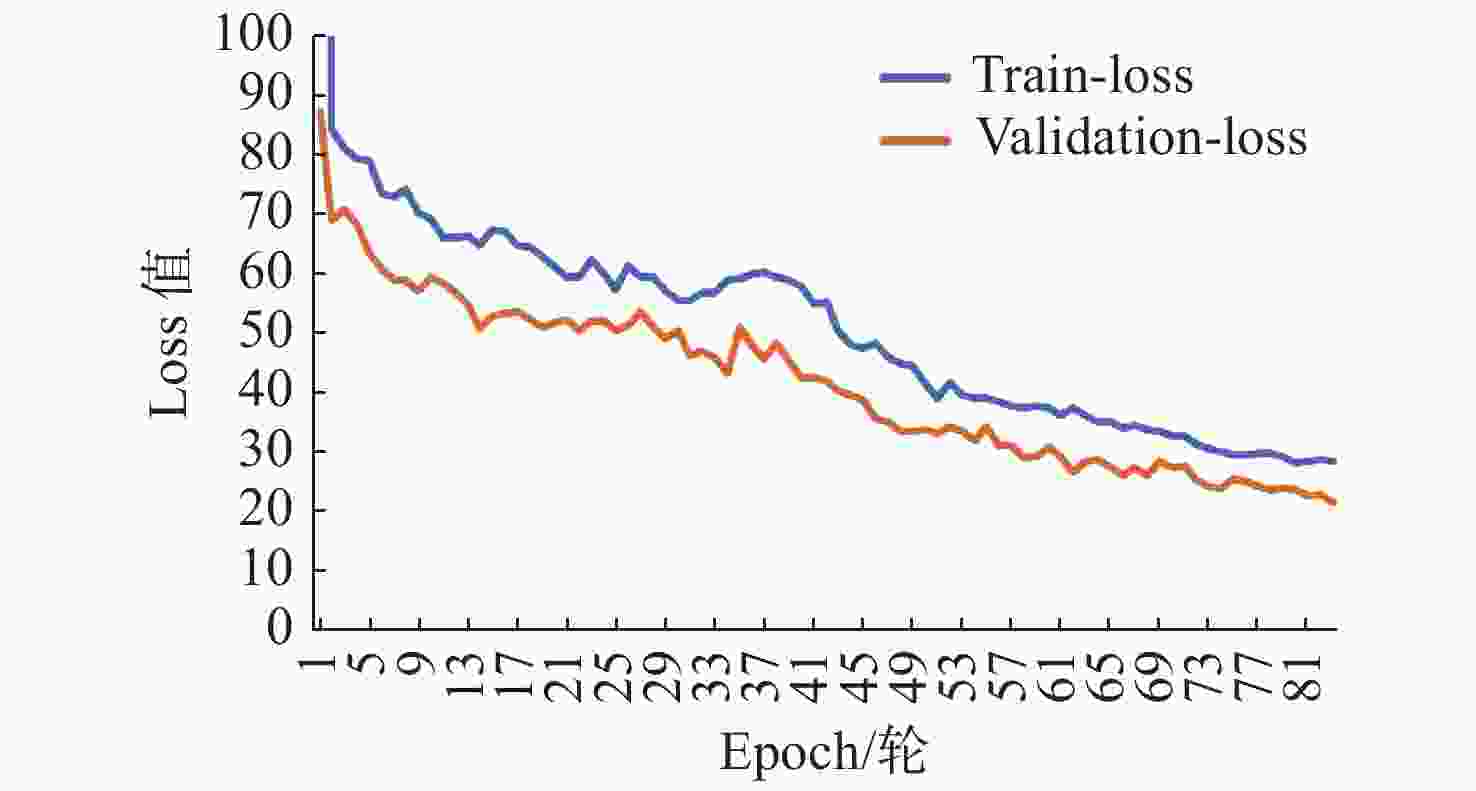

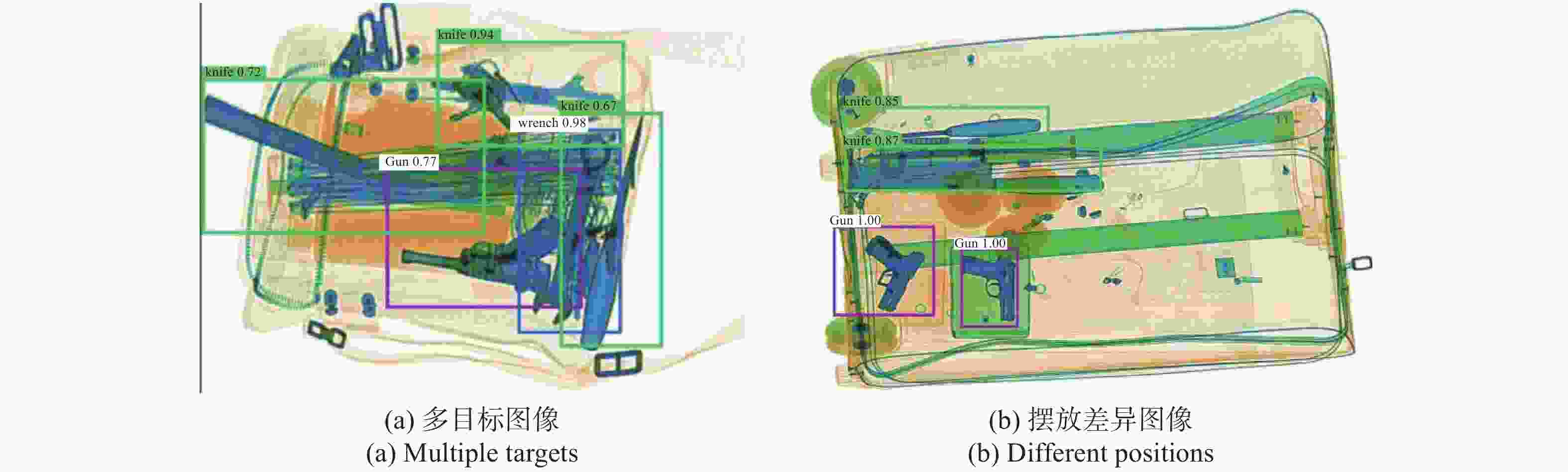

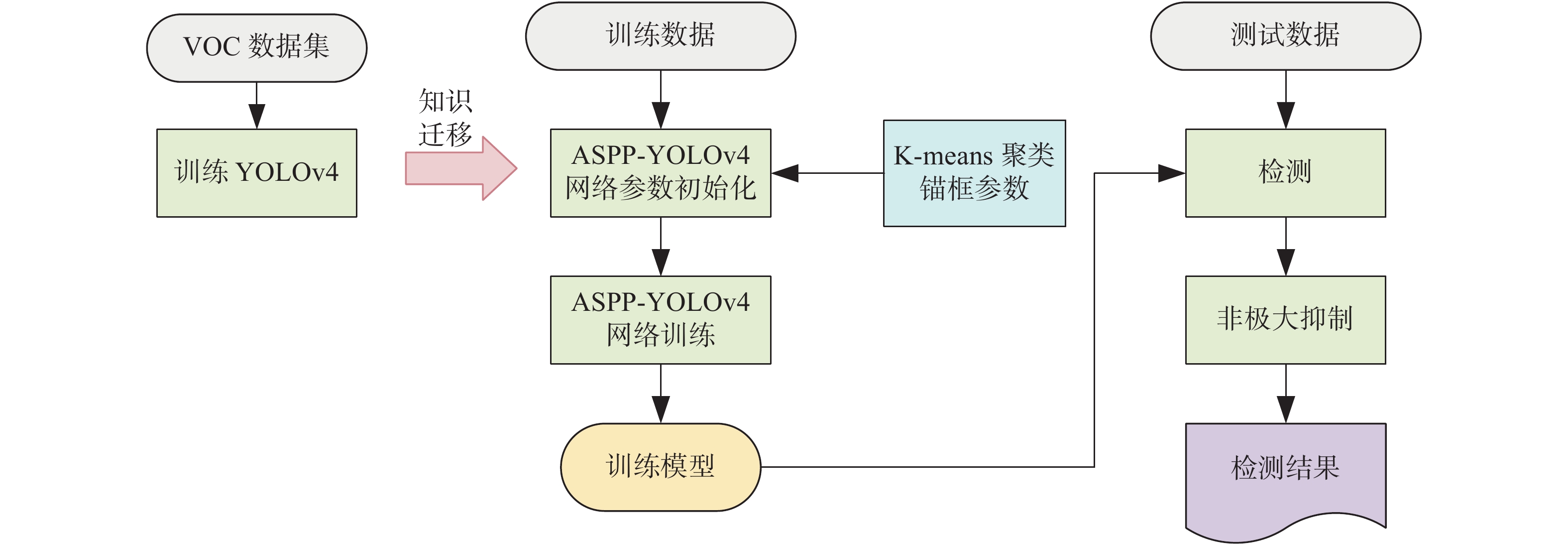

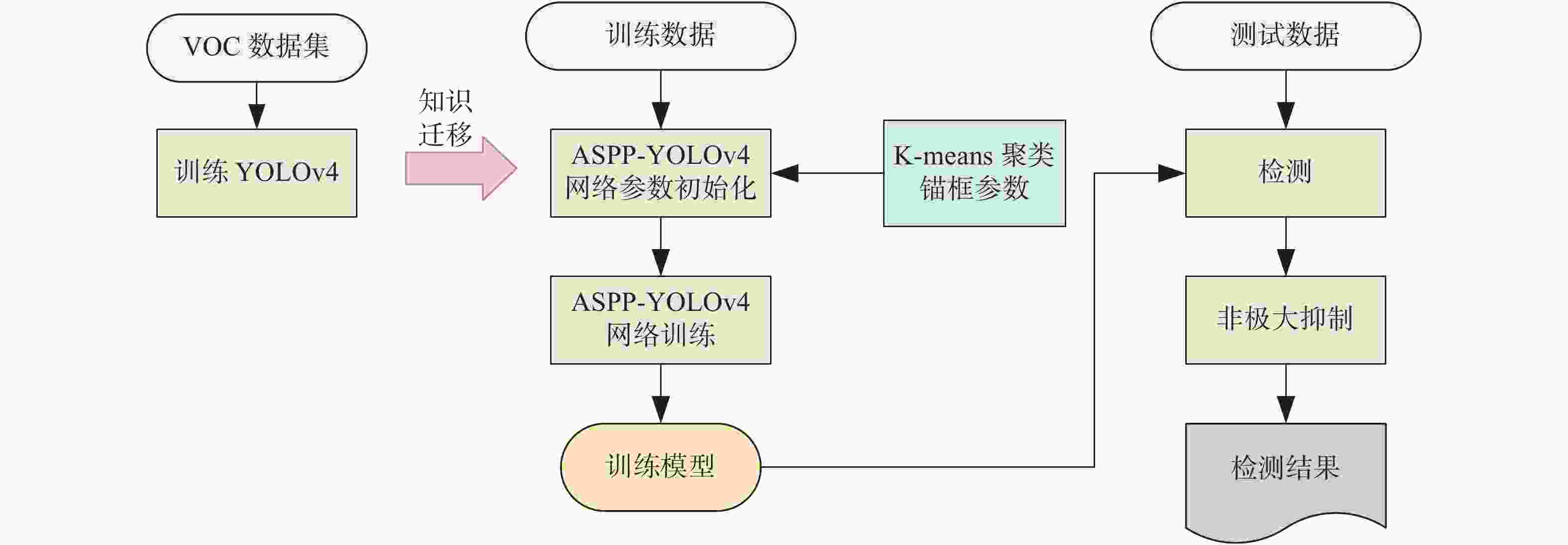

摘要: 由于X光安检图像存在背景复杂,重叠遮挡现象严重,危险品摆放方式、形状差异较大等问题,导致检测难度较高。针对上述问题,本文在YOLOv4的基础上,结合空洞卷积对其网络结构进行改进,加入空洞空间金字塔池化(Atrous Space Pyramid Pooling, ASPP)模型,以此增大感受野,聚合多尺度上下文信息。然后,通过K-means聚类方法生成更适合X光安检危险品检测的初始候选框。其中,模型训练时采用余弦退火优化学习率,进一步加速模型收敛,提高模型检测精度。实验结果表明,本文提出的ASPP-YOLOv4检测算法在SIXRay数据集上的mAP达到85.23%。该方法能有效减少X光安检图像中危险品的误检率,提高小目标危险品的检测能力。Abstract: In response to the complex backgrounds of X-ray security images, serious overlap and occlusion phenomena, and the large differences in the placement and shape of dangerous goods, this paper improves the network structure of YOLOv4 for dangerous objects detection by combining atrous convolution with the Atrous Space Pyramid Pooling (ASPP) model to increase receptive field and aggregate multi-scale context information. Then, the K-means clustering method is used to generate an initial candidate frame that is more suitable for dangerous goods detection in X-ray inspection images. Cosine annealing is used to optimize the learning rate in model training to further accelerate model convergence and improve model detection accuracy. The experimental results show that the proposed ASPP-YOLOv4 in this paper can obtain an mAP of 85.23% on the SIXRay dataset. The model can effectively reduce the false detection rate of dangerous goods in X-ray security images and improve the detection ability of small targets.

-

Key words:

- X-ray security images /

- YOLOv4 /

- atrous convolution /

- spatial pyramid pooling /

- cosine annealing

-

表 1 锚框计算结果

Table 1. Calculation results of the anchor

特征图 感受野 anchor 13×13 大 (124×111) (171×61) (200×151) 26×26 中 (75×34) (82×188) (93×75) 52×52 小 (24×78) (50×67) (62×111) 表 2 余弦退火衰减过程

Table 2. Cosine annealing decay process

算法:余弦退火衰减算法 输入:训练epoch $E_{\rm{p} }$、训练批次${B_{\rm{s}}}$、预热期$ w\_epoch $、预先设置学习率$ \eta {}_{base} $、最大学习率$\eta _{{\rm{max}}}$、最小学习率$\eta _{{\rm{min}}}$、训练样本数$S_{\rm{c}}$; 输出:当前训练学习率$ \eta _t^{} $ 步骤: (1) 初始化总步长$Step{s_{{\rm{total}}} } = \left( { {E_p} \times {S_c} } \right)/{B_s}$

预热步长$Step{s_{{\rm{warmup}}} } = \left( {w \times {S_{\rm{c}}} } \right)/{B_{\rm{s}}}$(2) Repeat: 在每次重启之后执行: 更新当前执行的步数$step{}_{{\rm{global}}}$,并记录当前学习率 更新学习率 if $Steps{}_{{\rm{global}}} \lt Steps{}_{{\rm{warmup}}}$: 根据${\eta _t} = \left( {({\eta _{ {\rm{base} } } } - {\eta _{ {\rm{warmup} } } })/Step{s_{ {\rm{warmup} } } } } \right) \times Step{s_{ {\rm{global} } } } + {\eta _{ {\rm{warmup} } } }$计算线性增长的学习率${\eta _{{\rm{warmup}}} }$ else: 根据${\eta _t} = \dfrac{1}{2} \times {\eta _{{\rm{base}}} } \times \cos\;\left( {1 + \left( {{\text{π}} \times \dfrac{ {(Step{s_{{\rm{global}}} } - Step{s_{{\rm{warmup}}} })} }{ {Step{s_{{\rm{total}}} } - Step{s_{{\rm{warmup}}} } } } } \right)} \right)$计算余弦退火的学习率 ${\eta _t} = \min({\eta _t},{\eta _{\min} })$ 表 3 训练超参数设计

Table 3. Design of the training hyperparameters

状态 名称 参数 冻结主干网络 batch_size 8 epoch 50 最大学习率 1e-3 最小学习率 1e-6 Warmup_epoch 10 解冻主干网络 batch_size 2 epoch 50 最大学习率 1e-4 最小学习率 1e-6 Warmup_epoch 10 表 4 不同模型的AP比较

Table 4. Comparison of AP for different networks (%)

方法 AP mAP Gun Knife Wrench Pliers Scissors YOLOv3 93.18 78.00 68.55 79.69 76.97 79.28 M2Det 95.49 75.70 70.17 83.00 82.96 81.47 SSD 94.91 77.87 74.82 84.51 82.69 82.96 YOLOv4 94.40 81.69 77.38 84.50 77.55 83.11 ASPP-YOLOv4 95.78 81.39 77.84 87.36 83.76 85.23 表 5 ASPP-YOLOv4的性能分析

Table 5. The performance of ASPP-YOLOv4

类别 AP Precision Recall F1-Measure Gun 95.78% 98.44% 85.32% 0.91 Knife 81.39% 91.48% 67.40% 0.78 Wrench 77.84% 81.61% 71.05% 0.76 Pliers 87.36% 93.15% 75.79% 0.84 Scissors 83.76% 86.28% 76.23% 0.81 表 6 YOLOv4改进前后检测性能对比

Table 6. Comparison of YOLOv4 performance before and after improvement

方法 mAP Precision Recall F1-Measure YOLOv4 83.11% 90.35% 73.00% 0.80 ASPP-YOLOv4 85.23% 90.20% 75.16% 0.82 -

[1] 鞠默然, 罗海波, 刘广琦, 等. 采用空间注意力机制的红外弱小目标检测网络[J]. 光学 精密工程,2021,29(4):843-853. doi: 10.37188/OPE.20212904.0843JU M R, LUO H B, LIU G Q, et al. Infrared dim and small target detection network based on spatial attention mechanism[J]. Optics and Precision Engineering, 2021, 29(4): 843-853. (in Chinese) doi: 10.37188/OPE.20212904.0843 [2] 马立, 巩笑天, 欧阳航空. Tiny YOLOV3目标检测改进[J]. 光学 精密工程,2020,28(4):988-995.MA L, GONG X T, OUYANG H K. Improvement of Tiny YOLOV3 target detection[J]. Optics and Precision Engineering, 2020, 28(4): 988-995. (in Chinese) [3] MERY D, SVEC E, ARIAS M, et al. Modern computer vision techniques for X-ray testing in baggage inspection[J]. IEEE Transactions on Systems,Man,and Cybernetics:Systems, 2017, 47(4): 682-692. doi: 10.1109/TSMC.2016.2628381 [4] AYDIN I, KARAKOSE M, AKIN E. A new approach for baggage inspection by using deep convolutional neural networks[C]. 2018 International Conference on Artificial Intelligence and Data Processing (AIDP), IEEE, 2018: 1-6. [5] MORRIS T, CHIEN T, GOODMAN E. Convolutional neural networks for automatic threat detection in security X-ray images[C]. 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), IEEE, 2018: 285-292. [6] AKCAY S, KUNDEGORSKI M E, WILLCOCKS C G, et al. Using deep convolutional neural network architectures for object classification and detection within X-ray baggage security imagery[J]. IEEE Transactions on Information Forensics and Security, 2018, 13(9): 2203-2215. doi: 10.1109/TIFS.2018.2812196 [7] AKÇAY S, ATAPOUR-ABARGHOUEI A, BRECKON T P. Skip-GANomaly: skip connected and adversarially trained encoder-decoder anomaly detection[C]. Proceedings of 2019 International Joint Conference on Neural Networks (IJCNN), IEEE, 2019: 1-8. [8] GALVEZ R L, DADIOS E P, BANDALA A A, et al.. Threat object classification in X-ray images using transfer learning[C]. Proceedings of 2018 IEEE 10th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM), IEEE, 2018: 1-5. [9] 唐浩漾, 王燕, 张小媛, 等. 基于特征金字塔的X光机危险品检测算法[J]. 西安邮电大学学报,2020,25(2):58-63.TANG H Y, WANG Y, ZHANG X Y, et al. Dangerous goods detection algorithm by X-ray machine based on feature pyramid[J]. Journal of Xi'an University of Posts and Telecommunications, 2020, 25(2): 58-63. (in Chinese) [10] 张友康, 苏志刚, 张海刚, 等. X光安检图像多尺度违禁品检测[J]. 信号处理,2020,36(7):1096-1106.ZHANG Y K, SU ZH G, ZHANG H G, et al. Multi-scale prohibited item detection in X-ray security image[J]. Journal of Signal Processing, 2020, 36(7): 1096-1106. (in Chinese) [11] 郭守向, 张良. Yolo-C: 基于单阶段网络的X光图像违禁品检测[J]. 激光与光电子学进展,2021,58(8):0810003.GUO SH X, ZHANG L. Yolo-C: one-stage network for prohibited items detection within X-ray images[J]. Laser &Optoelectronics Progress, 2021, 58(8): 0810003. (in Chinese) [12] ZHU Y, ZHANG Y T, ZHANG H G, et al. Data augmentation of X-ray images in baggage inspection based on generative adversarial networks[J]. IEEE Access, 2020, 8: 86536-86544. doi: 10.1109/ACCESS.2020.2992861 [13] 陈科峻, 张叶. 基于YOLO-v3模型压缩的卫星图像船只实时检测[J]. 液晶与显示,2020,35(11):1168-1176. doi: 10.37188/YJYXS20203511.1168CHEN K J, ZHANG Y. Real-time ship detection in satellite images based on YOLO-v3 model compression[J]. Chinese Journal of Liquid Crystals and Displays, 2020, 35(11): 1168-1176. (in Chinese) doi: 10.37188/YJYXS20203511.1168 [14] REDMON J, DIVVALA S, GIRSHICK R, et al.. You only look once: unified, real-time object detection[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 2016: 779-788. [15] 刘杨帆, 曹立华, 李宁, 等. 基于YOLOv4的空间红外弱目标检测[J]. 液晶与显示,2021,36(4):615-623. doi: 10.37188/CJLCD.2020-0227LIU Y F, CAO L H, LI N, et al. Detection of space infrared weak target based on YOLOv4[J]. Chinese Journal of Liquid Crystals and Displays, 2021, 36(4): 615-623. (in Chinese) doi: 10.37188/CJLCD.2020-0227 [16] BOCHKOVSKIY A, WANG C Y, LIAO H Y M.YOLOv4: optimal speed and accuracy of object detection[J/OL]. arXiv: 2004.10934, 2020(2020-04-23). https://arxiv.org/abs/2004.10934. [17] MIAO C J, XIE L X, WAN F, et al.. SIXray: A large-scale security inspection X-ray benchmark for prohibited item discovery in overlapping images[C]. Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 2019: 2114-2123. [18] REDMON J, FARHADI A.YOLOv3: an incremental improvement[J]. arXiv e-prints arXiv: 1804.02767, 2018. [19] ZHAO Q J, SHENG T, WANG Y T, et al. M2Det: a single-shot object detector based on multi-level feature pyramid network[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2019, 33(1): 9259-9266. [20] LIU W, ANGUELOV D, ERHAN D, et al.. SSD: single shot multibox detector[C]. 14th European Conference on Computer Vision (CVPR), Springer, 2016: 21-37. -

下载:

下载: