An improved algorithm for monocular camera edge spectrum based ranging by defocused images

-

摘要:

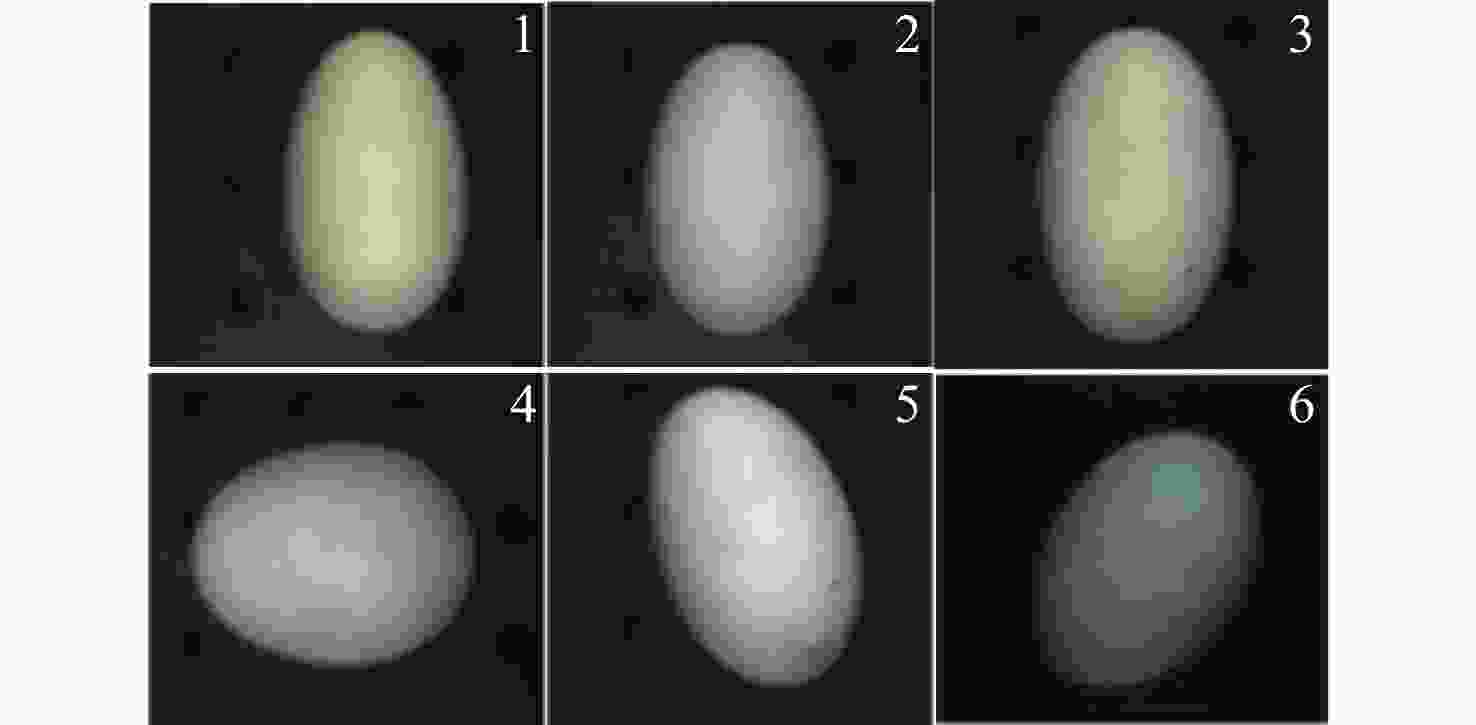

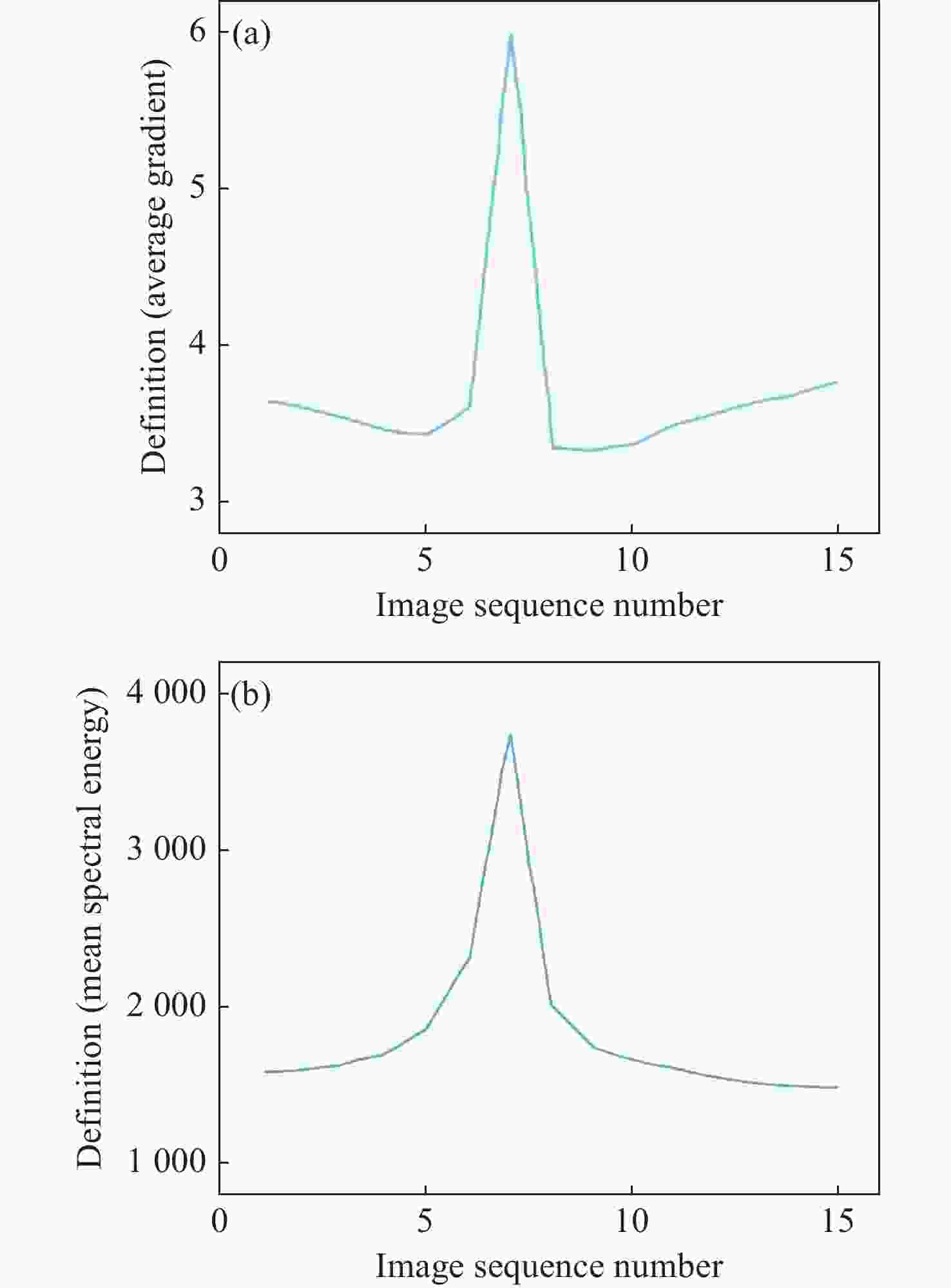

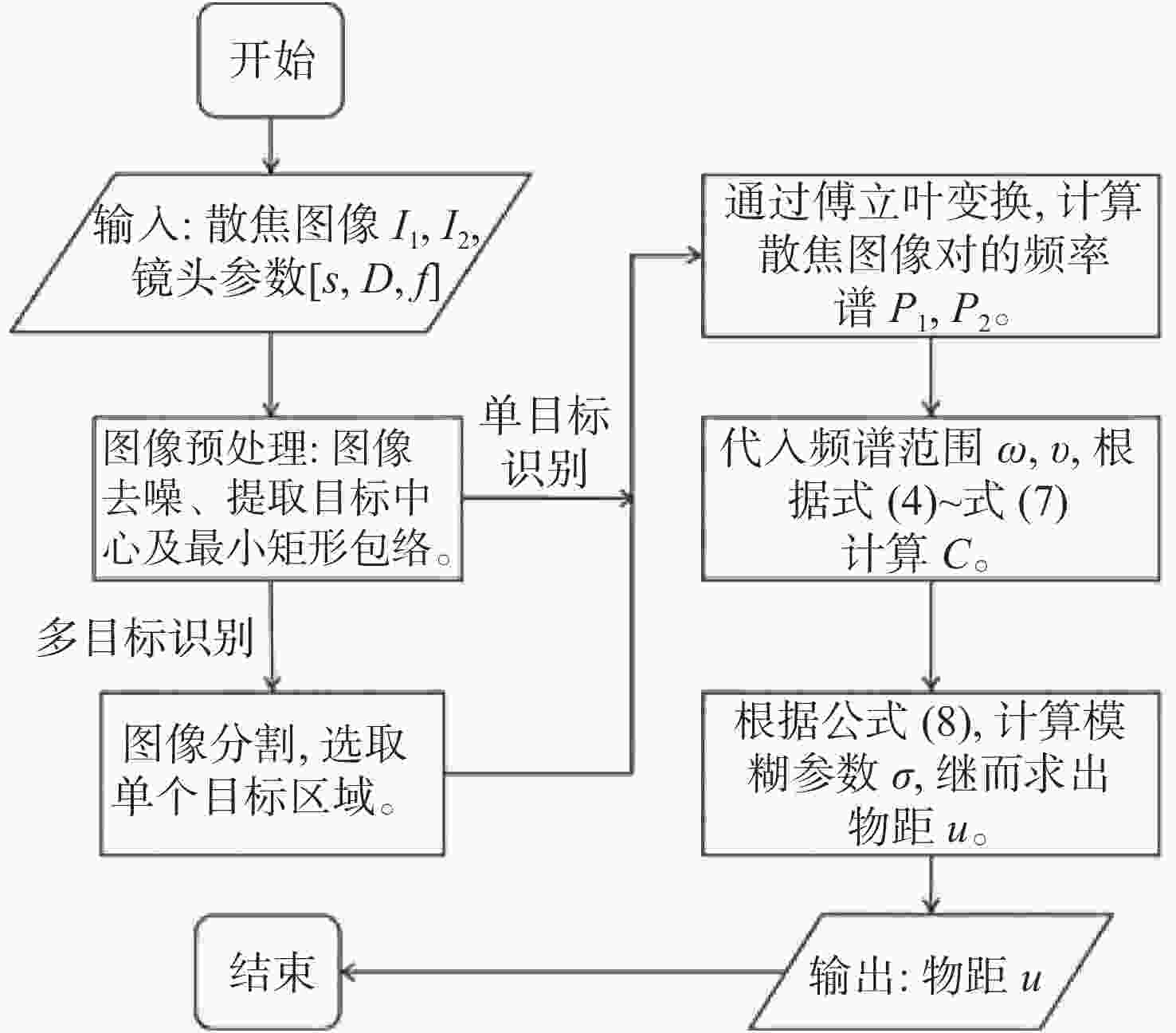

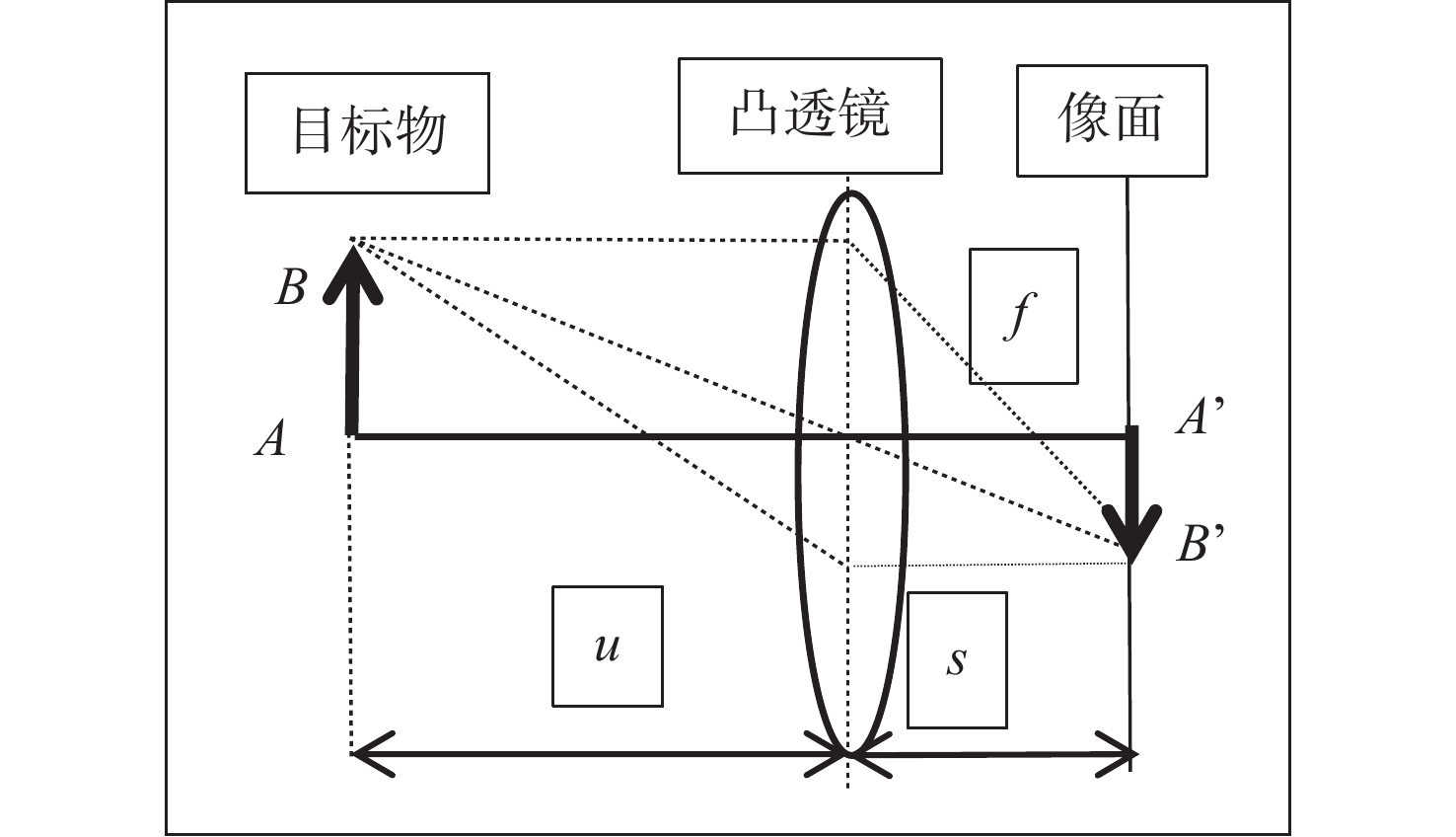

为了实现基于单目相机的弱或无表面纹理特征目标精确测距,提出了一种基于保留边缘频谱信息的改进散焦图像测距算法。通过对比以傅立叶变换和拉普拉斯变换为计算核心的两种经典散焦测距理论,构建相应的清晰度评价函数,根据灵敏度更好的频谱清晰度函数选择基于频谱的散焦测距法,并根据频谱清晰度函数在保留目标边缘信息的基础上选择频域计算范围,从而进行测距。为验证算法的可行性,本文采用6组不同的鸭蛋样本,获取不同光圈、不同距离的散焦图像,利用该改进算法求解鸭蛋到相机镜头的距离。实验结果表明,基于边缘频谱保留的散焦图像测距改进算法具有良好的测距效果,相关系数为0.986,均方根误差为11.39 mm,并发现对于斜放拍摄的鸭蛋图像进行图像旋转处理后,可有效地提升测距能力,均方根误差从11.39 mm下降至8.76 mm,平均相对误差从2.85%下降至2.28%,相关系数提升至0.99。基本满足了弱或无表面纹理特征目标测距的稳定、精度等要求。

Abstract:In order to achieve accurate target ranging of weak or non surface texture features using a monocular camera, an improved defocused image ranging algorithm based on preserving edge spectral information is presented. By comparing two classical defocal ranging theories with Fourier transform and Laplace transform as the foundational principals of calculation, a corresponding definition evaluation function is constructed. We select the method based on the spectrum definition function with better sensitivity, and select the calculation range of the frequency domain by retaining the information on the target edge. To verify the feasibility of the algorithm, 6 sets of different duck egg samples are used to obtain scattered focus images of different apertures and distances, and the improved algorithm was used to solve the distance of the duck eggs from the camera lens. The experimental results show that the improved algorithm based on the edge spectrum preservation has a good ranging effect with a correlation coefficient of 0.986 and Root Mean Square Error (RMSE) of 11.39 mm. It is found that the range ability can be effectively improved after the image rotation processing of the duck egg image taken at an oblique angle, with the RMSE is reduced from 11.39 mm to 8.76 mm, the average relative error is reduced from 2.85% to 2.28% and the correlation coefficient reaches 0.99. The proposed algorithm fundamentally meets the requirements of stability and high accuracy in ranging targets with weak or non surface texture features.

-

Key words:

- machine vision /

- defocused image ranging /

- edge spectrum /

- image processing /

- non/weak-texture

-

图 5 (a)与(e)为鸭蛋图像,(b)与(f)为原频谱图,(c)与(g)为边缘保留频率截取图,(d)与(h)为频率截取后的频谱图像对应的时域图像

Figure 5. (a) and (e) are egg images, (b) and (f) are original spectral images, (c) and (g) are frequency-intercepted spectral images, (d) and (h) are time-domain images corresponding to the spectral images after frequency interception

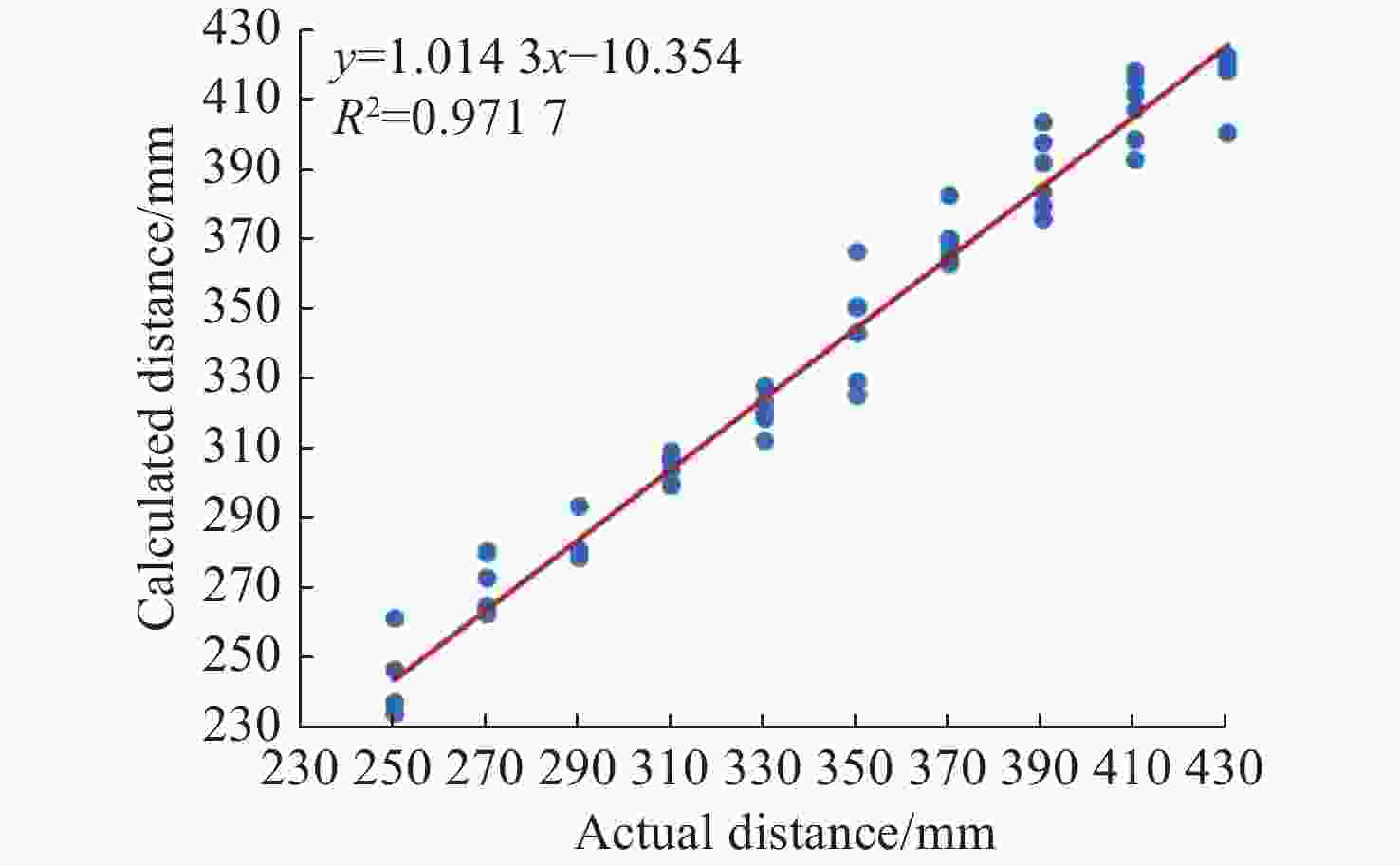

表 1 实际距离与计算距离实验结果

Table 1. The results of observed distance and calculated distance

序号 鸭蛋特征 最大误差

(mm)均方根误差

(mm)平均相对

误差(%)1 绿壳、竖放 10.90 6.59 1.63 2 白壳、竖放 16.60 10.22 2.87 3 白壳、竖放 16.99 10.65 2.98 4 白壳、横放 13.92 8.30 2.24 5 白壳、斜放 29.44 16.93 4.31 6 绿壳、斜放 24.75 12.73 3.08 总计 29.44 11.39 2.85 表 2 图像旋转前后测距结果对比

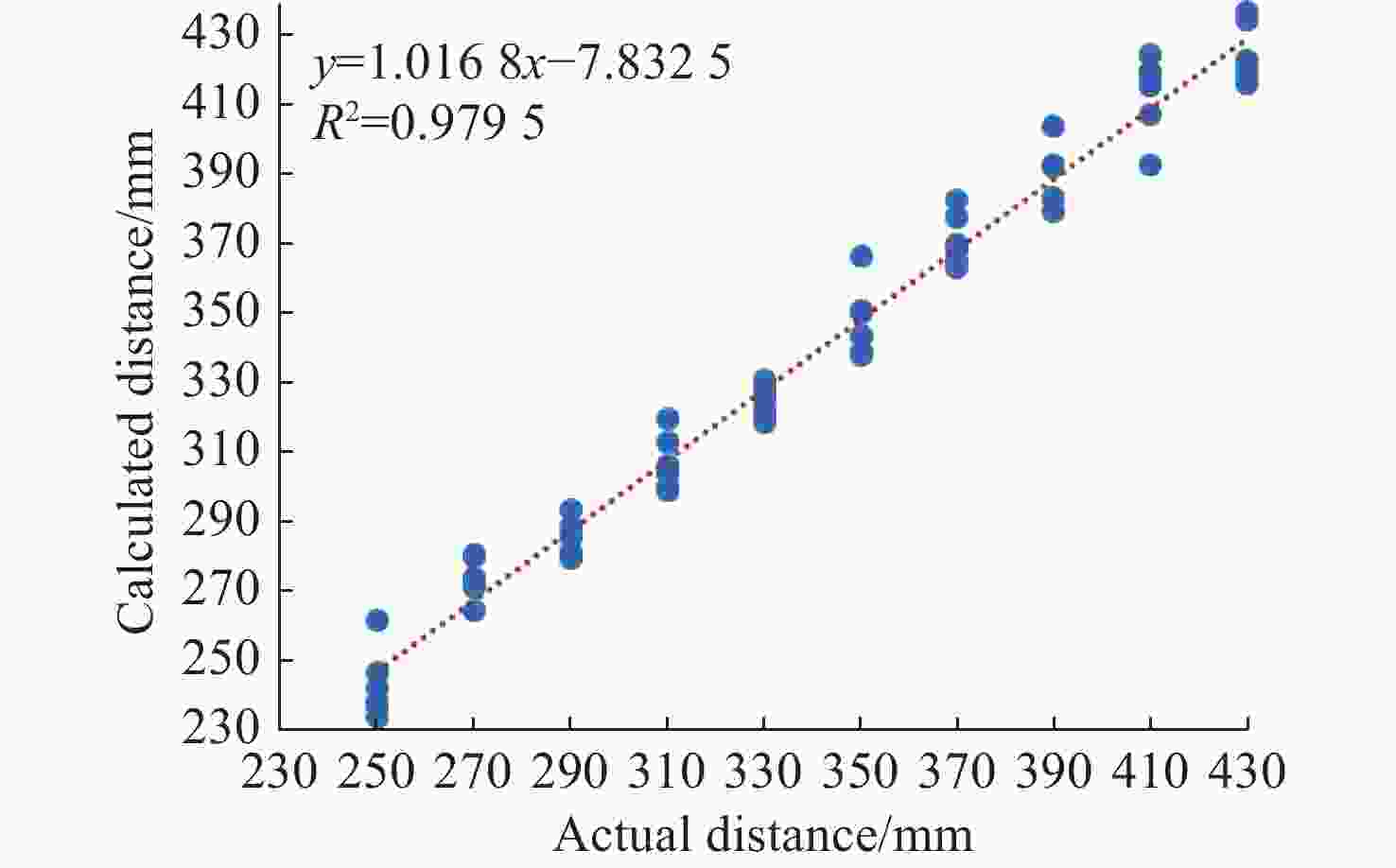

Table 2. The ranging results before and after image rotating

序号 处理方式 最大误差

(mm)均方根误差

(mm)平均相对

误差(%)5 原始图像 29.44 16.93 4.31 图像旋转后 13.55 8.72 2.25 6 原始图像 24.75 12.73 3.08 图像旋转后 14.82 5.93 1.73 总计 原始图像 29.44 11.39 2.85 图像旋转后 16.99 8.76 2.28 表 3 拟合模型所得结果

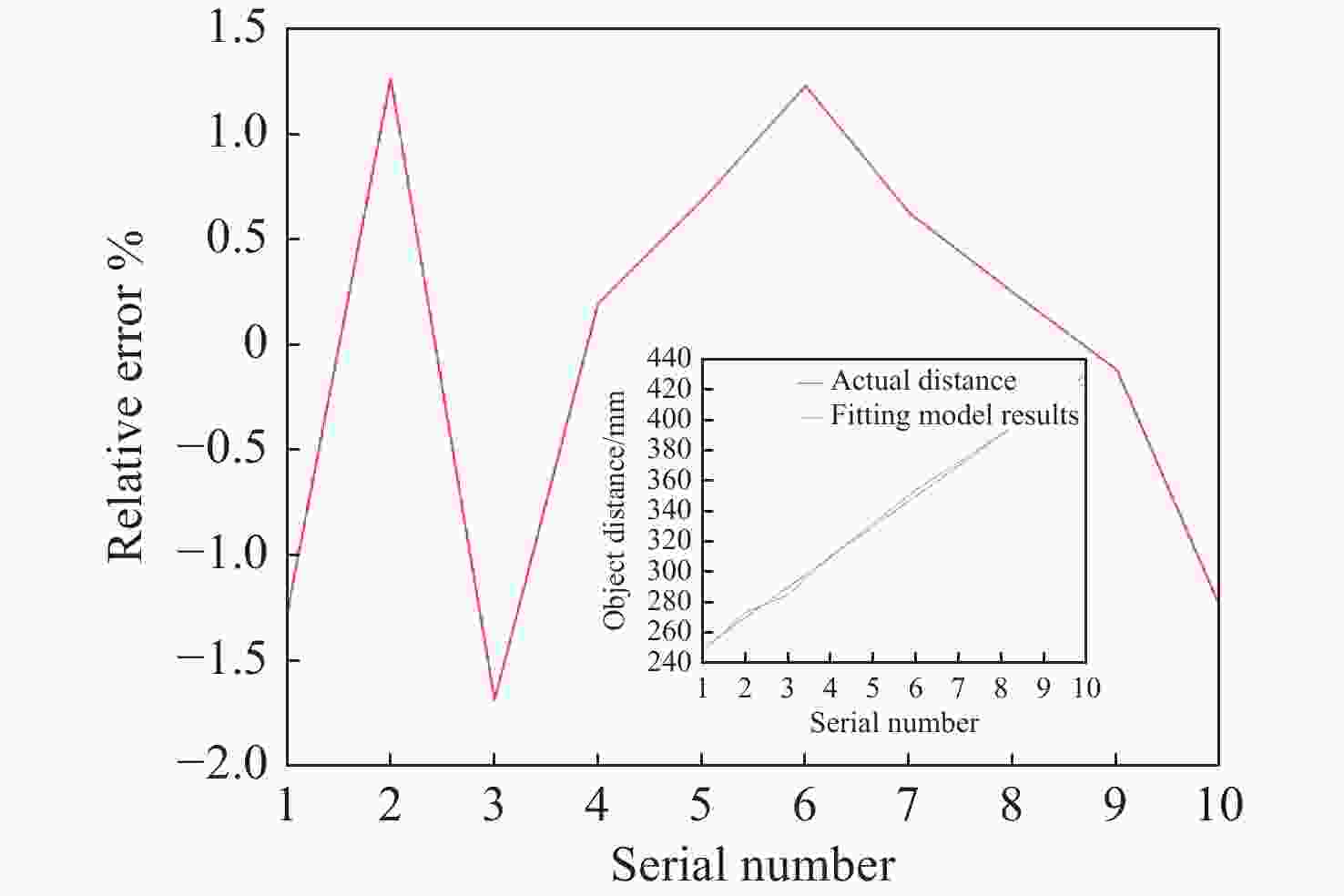

Table 3. The results of fitting model

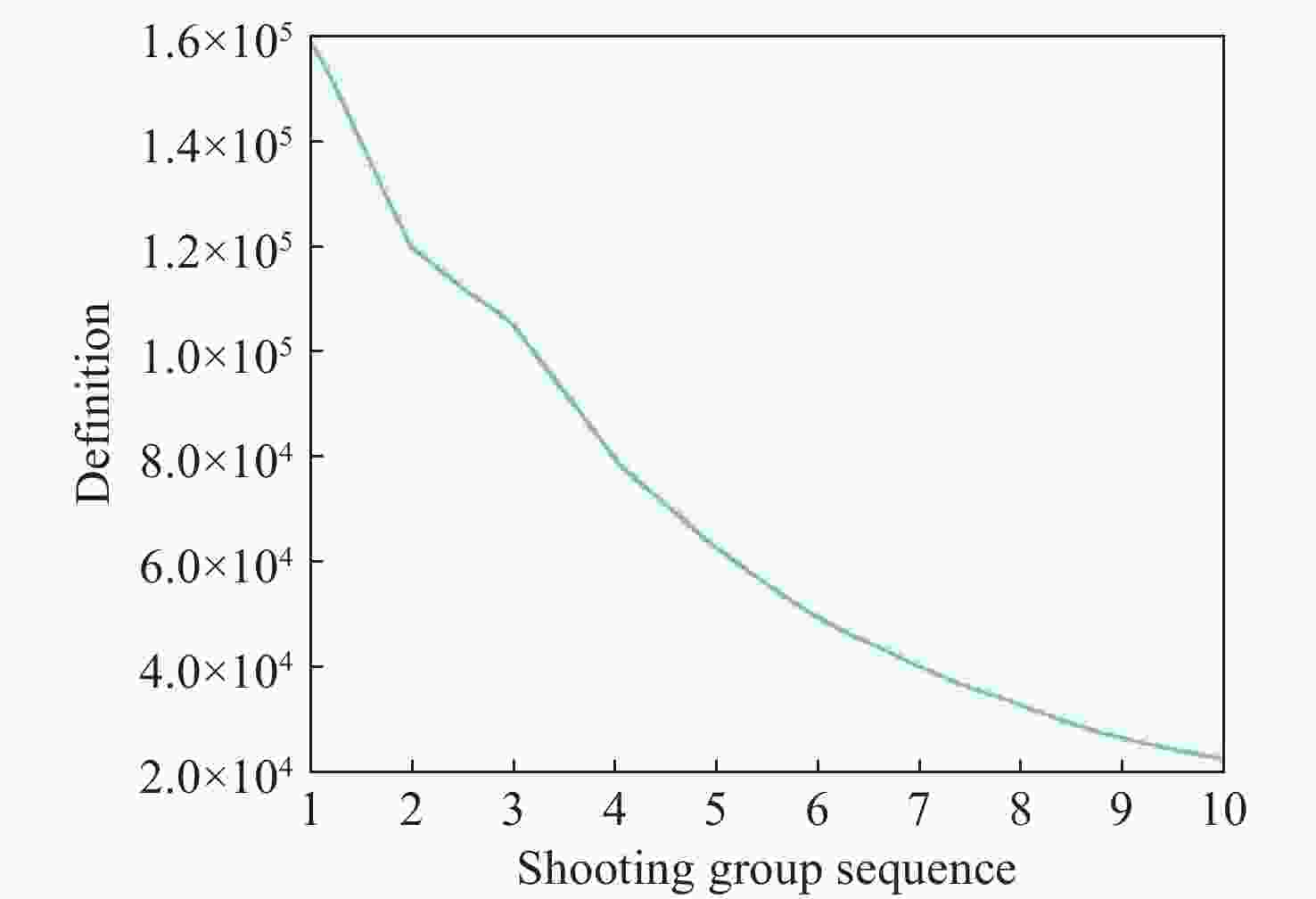

序号 实际距离/mm 计算距离/mm 误差/mm 相对误差/% 1 250 246.82 −3.18 −1.27 2 270 273.41 3.41 1.26 3 290 285.12 −4.88 −1.68 4 310 310.62 0.62 0.20 5 330 332.27 2.27 0.69 6 350 354.30 4.30 1.23 7 370 372.32 2.32 0.63 8 390 390.98 0.98 0.25 9 410 409.52 −0.48 −0.12 10 430 424.64 −5.36 −1.25 表 4 拟合模型参数表

Table 4. Parameters of fitting model

α β SSE R2 RMSE −91.97 (−96.21,−87.74) 1349 (1302,1395) 104.9 0.9968 3.62 -

[1] GONGAL A, AMATYA S, KARKEE M, et al. Sensors and systems for fruit detection and localization: a review[J]. Computers and Electronics in Agriculture, 2015, 116: 8-19. doi: 10.1016/j.compag.2015.05.021 [2] 蔡明兵, 刘晶红, 徐芳. 无人机侦察多目标实时定位技术研究[J]. 中国光学,2018,11(5):812-821. doi: 10.3788/co.20181105.0812CAI M B, LIU J H, XU F. Multi-targets real-time location technology for UAV reconnaissance[J]. Chinese Optics, 2018, 11(5): 812-821. (in Chinese) doi: 10.3788/co.20181105.0812 [3] MIRHAJI H, SOLEYMANI M, ASAKEREH A, et al. Fruit detection and load estimation of an orange orchard using the YOLO models through simple approaches in different imaging and illumination conditions[J]. Computers and Electronics in Agriculture, 2021, 191: 106533. doi: 10.1016/j.compag.2021.106533 [4] 张石磊, 崔宇, 邢慕增, 等. 光场成像目标测距技术[J]. 中国光学,2020,13(6):1332-1342. doi: 10.37188/CO.2020-0043ZHANG SH L, CUI Y, XING M Z, et al. Light field imaging target ranging technology[J]. Chinese Optics, 2020, 13(6): 1332-1342. (in Chinese) doi: 10.37188/CO.2020-0043 [5] 熊锐. 基于数字图像处理的显微自动对焦技术研究[D]. 成都: 中国科学院大学(中国科学院光电技术研究所), 2021.XIONG R. Study on microscopic autofocus technology based on digital image processing[D]. Chengdu: The Institute of Optics and Electronics, The Chinese Academy of Sciences, 2021. (in Chinese) [6] 李喆, 李建增, 胡永江, 等. 基于频谱预处理与改进霍夫变换的离焦模糊盲复原算法[J]. 图学学报,2018,39(5):909-916.LI ZH, LI J Z, HU Y J, et al. Blind restoration of focus blur based on spectrum preprocessing and improved Hough transform[J]. Journal of Graphics, 2018, 39(5): 909-916. (in Chinese) [7] RASHWAN H A, CHAMBON S, GURDJOS P, et al. Using curvilinear features in focus for registering a single image to a 3D object[J]. IEEE Transactions on Image Processing, 2019, 28(9): 4429-4443. doi: 10.1109/TIP.2019.2911484 [8] SUBBARAO M. Direct recovery of depth-map I: differential methods[C]. Proceedings of the IEEE Computer Society Workshop on Computer Vision, IEEE, 1987: 58-65. [9] SUBBARAO M, SURYA G. Depth from defocus: a spatial domain approach[J]. International Journal of Computer Vision, 1994, 13(3): 271-294. doi: 10.1007/BF02028349 [10] RAJAGOPALAN A N, CHAUDHURI S, MUDENAGUDI U. Depth estimation and image restoration using defocused stereo pairs[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2004, 26(11): 1521-1525. doi: 10.1109/TPAMI.2004.102 [11] 王海娟. 基于散焦图像的深度估计的研究[D]. 青岛: 中国海洋大学, 2011.WANG H J. Study on the estimate of depth based on defocus image[D]. Qingdao: Ocean University of China, 2011. (in Chinese) [12] 马艳娥. 基于散焦图像测距的目标尺寸测量技术研究[D]. 太原: 中北大学, 2012.MA Y E. Research of objective measuring technique based on image distance measurement by defocusing[D]. Taiyuan: North University of China, 2012. (in Chinese) [13] 薛松, 王文剑. 基于超像素分割的单幅散焦图像深度恢复方法[J]. 计算机科学与探索,2018,12(7):1162-1168. doi: 10.3778/j.issn.1673-9418.1705042XUE S, WANG W J. Depth estimation from single defocused image based on superpixel segmentation[J]. Journal of Frontiers of Computer Science and Technology, 2018, 12(7): 1162-1168. (in Chinese) doi: 10.3778/j.issn.1673-9418.1705042 [14] 薛松, 王文剑. 基于高斯-柯西混合模型的单幅散焦图像深度恢复方法[J]. 计算机科学,2017,44(1):32-36. doi: 10.11896/j.issn.1002-137X.2017.01.006XUE S, WANG W J. Depth estimation from single defocused image based on Gaussian-Cauchy mixed model[J]. Computer Science, 2017, 44(1): 32-36. (in Chinese) doi: 10.11896/j.issn.1002-137X.2017.01.006 [15] 韩丽燕, 王黎明, 刘宾. 一种基于边缘扩散函数描述散焦程度的测距算法[J]. 传感器世界,2011,17(2):9-11. doi: 10.3969/j.issn.1006-883X.2011.02.002HAN L Y, WANG L M, LIU B. Depth measuring method based on using edge spread function to describe defocusing degrees of images[J]. Sensor World, 2011, 17(2): 9-11. (in Chinese) doi: 10.3969/j.issn.1006-883X.2011.02.002 [16] 袁红星, 吴少群, 安鹏, 等. 对象引导的单幅散焦图像深度提取方法[J]. 电子学报,2014,42(10):2009-2015. doi: 10.3969/j.issn.0372-2112.2014.10.022YUAN H X, WU SH Q, AN P, et al. Object guided depth map recovery from a single defocused image[J]. Acta Electronica Sinica, 2014, 42(10): 2009-2015. (in Chinese) doi: 10.3969/j.issn.0372-2112.2014.10.022 [17] ERKAN U, ENGINOĞLU S, THANH D N H, et al. Adaptive frequency median filter for the salt and pepper denoising problem[J]. IET Image Processing, 2020, 14(7): 1291-1302. doi: 10.1049/iet-ipr.2019.0398 [18] 刘聪, 董文飞, 蒋克明, 等. 基于改进分水岭分割算法的致密荧光微滴识别[J]. 中国光学,2019,12(4):783-790. doi: 10.3788/co.20191204.0783LIU C, DONG W F, JIANG K M, et al. Recognition of dense fluorescent droplets using an improved watershed segmentation algorithm[J]. Chinese Optics, 2019, 12(4): 783-790. (in Chinese) doi: 10.3788/co.20191204.0783 [19] PENTLAND A, SCHEROCK S, DARRELL T, et al. Simple range cameras based on focal error[J]. Journal of the Optical Society of America A, 1994, 11(11): 2925-2934. doi: 10.1364/JOSAA.11.002925 [20] 葛鹏, 游耀堂. 基于稀疏表示的光场图像超分辨率重建[J]. 激光与光电子学进展,2022,59(2):0210001.GE P, YOU Y T. Super-resolution reconstruction of light field images via sparse representation[J]. Laser &Optoelectronics Progress, 2022, 59(2): 0210001. (in Chinese) -

下载:

下载: