-

摘要:

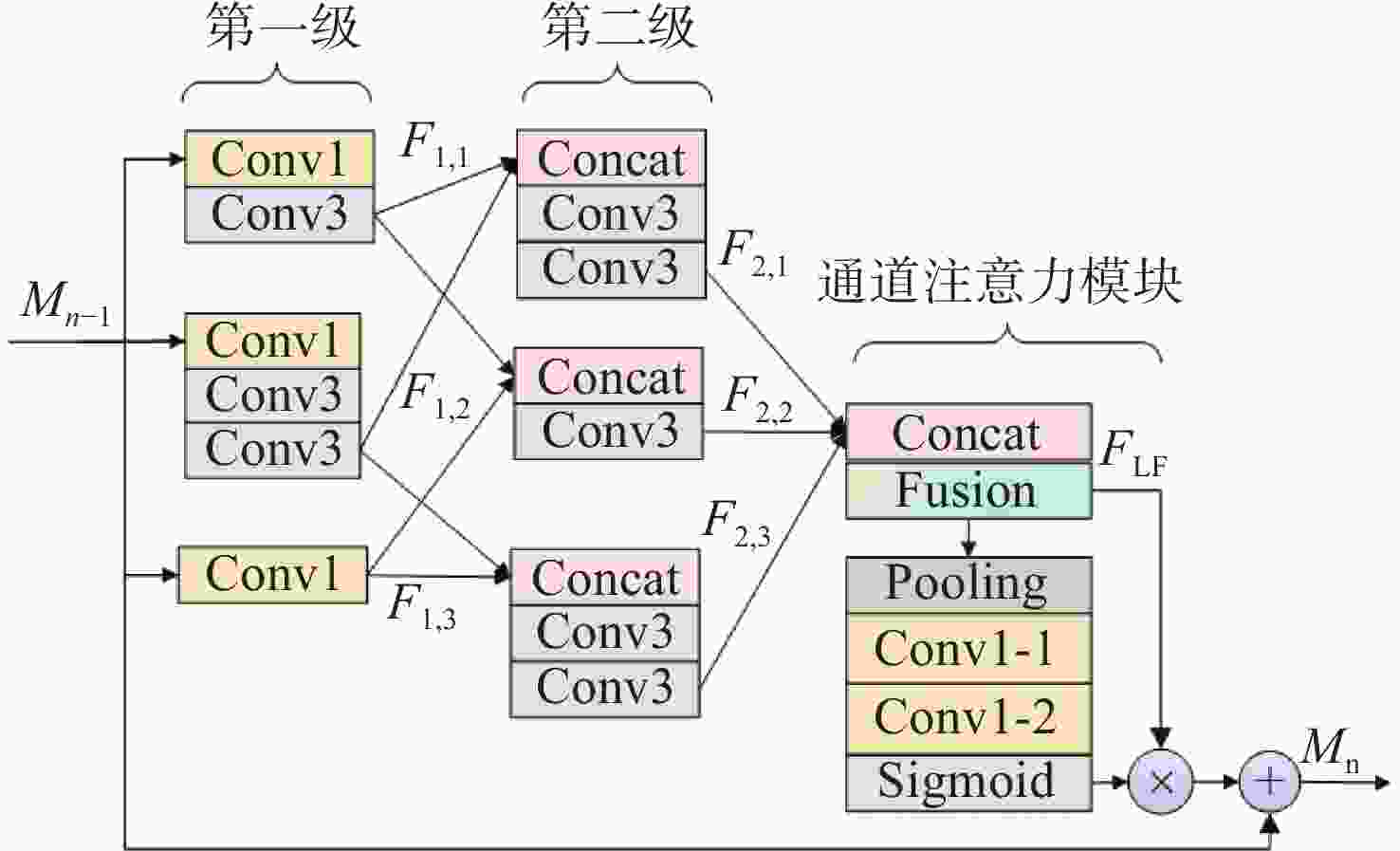

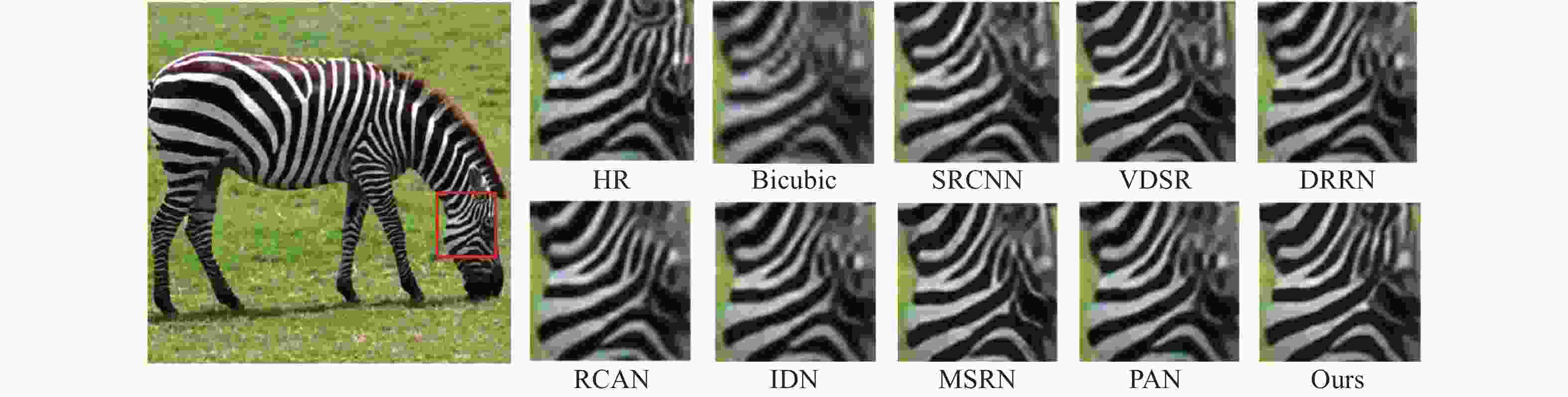

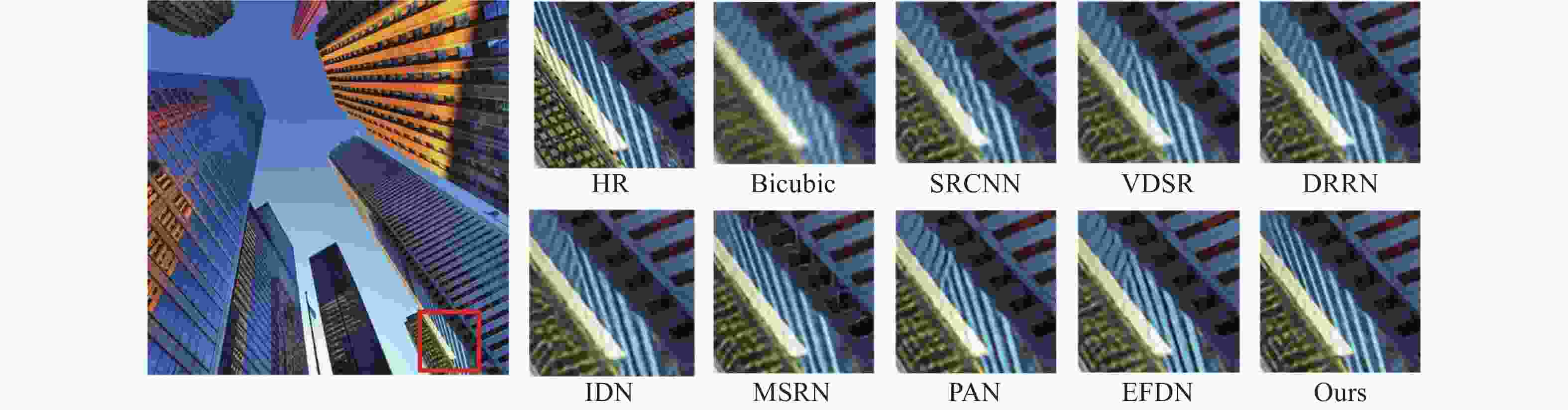

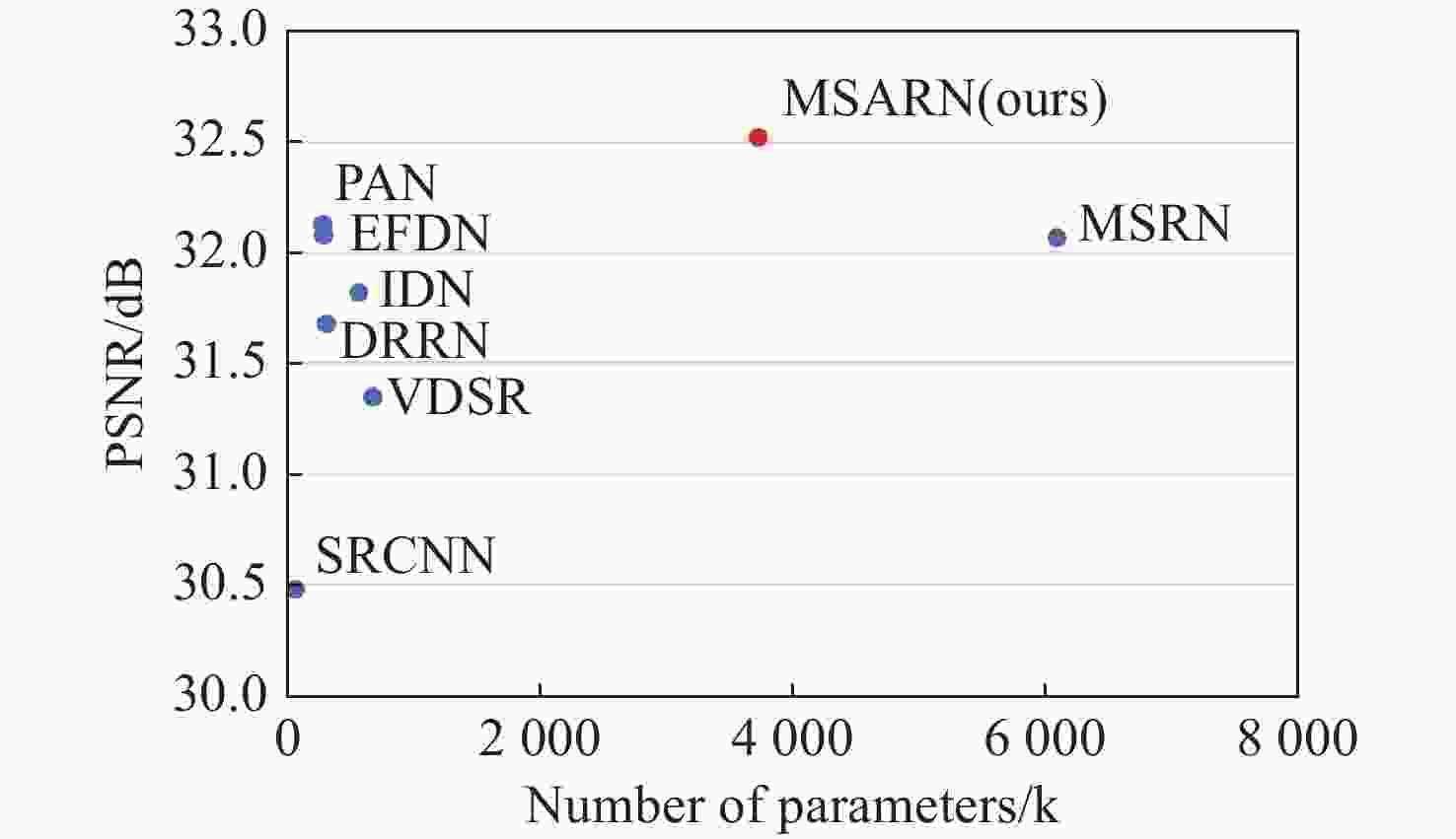

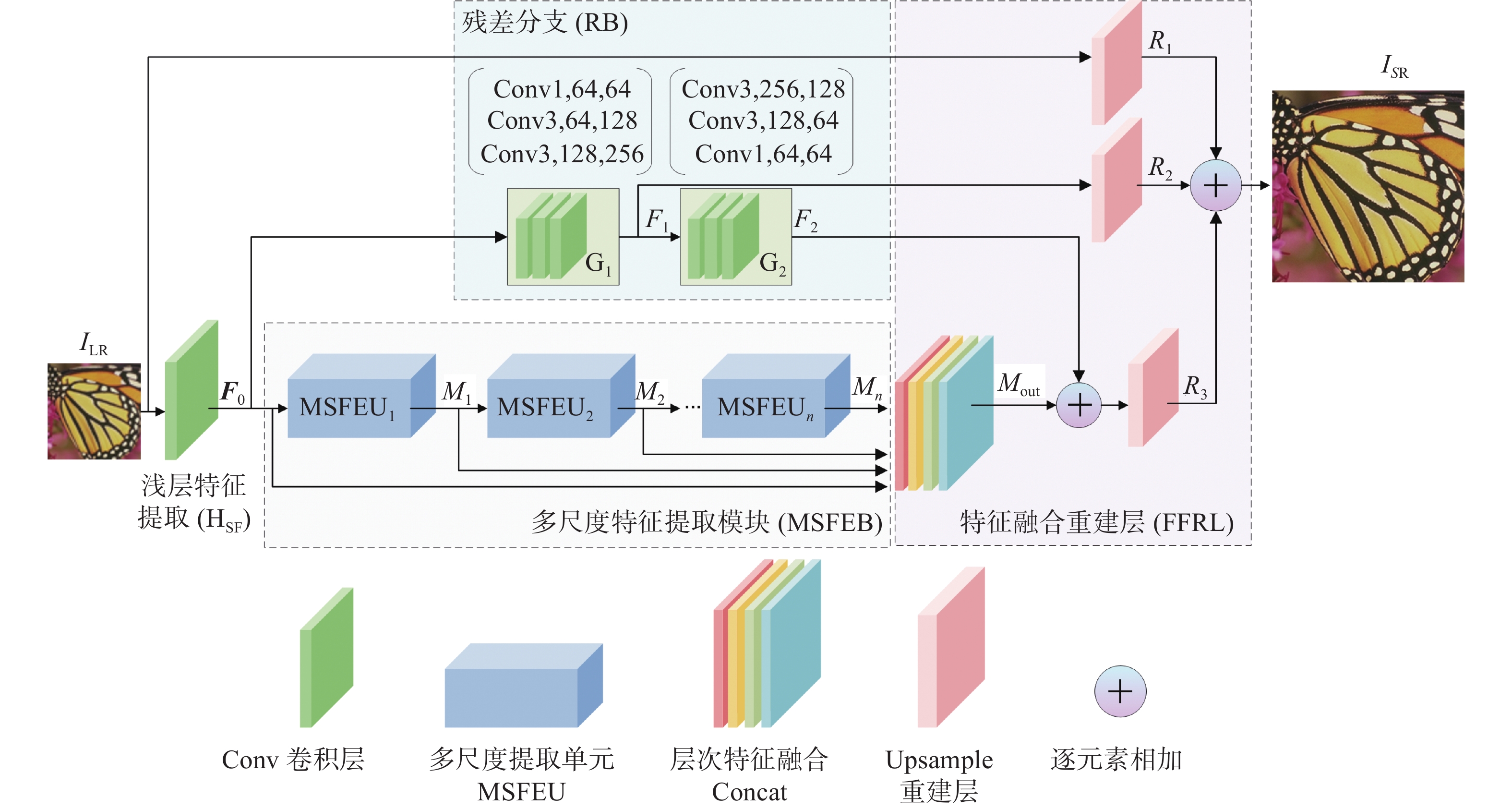

光学成像分辨率受衍射极限、探测器尺寸等诸多因素限制。为了获得细节更丰富、纹理更清晰的超分辨率图像,本文提出了一种多尺度特征注意力融合残差网络。首先,使用一层卷积提取图像的浅层特征,之后,通过级联的多尺度特征提取单元提取多尺度特征,多尺度特征提取单元中引入通道注意力模块自适应地校正特征通道的权重,以提高对高频信息的关注度。将网络中的浅层特征和每个多尺度特征提取单元的输出作为全局特征融合重建的层次特征。最后,利用残差分支引入浅层特征和多级图像特征,重建出高分辨率图像。算法使用Charbonnier损失函数使训练更加稳定,收敛速度更快。在国际基准数据集上的对比实验表明:该模型的客观指标优于大多数最先进的方法。尤其在Set5数据集上,4倍重建结果的PSNR指标提升了0.39 dB,SSIM指标提升至0.8992,且算法主观视觉效果更好。

Abstract:The resolution of optical imaging is limited by the diffraction limit, system detector size and many other factors. To obtain images with richer details and clearer textures, a multi-scale feature attention fusion residual network was proposed. Firstly, shallow features of the image were extracted using a layer of convolution and then the multi-scale features were extracted by a cascade of multi-scale feature extraction units. The local channel attention module is introduced in the multi-scale feature extraction unit to adaptively correct the weights of feature channels and improve the attention to high frequency information. The shallow features and the output of each multi-scale feature extraction unit were used as hierarchical features for global feature fusion reconstruction. Finally, the hight-resolution image was reconstructed by introducing shallow features and multi-level image features using the residual branch. Charbonnier loss was adopted to make the training more stable and converge faster. Comparative experiments on the international benchmark datasets show that the model outperforms most state-of-the-art methods on objective metrics. Especially on the Set5 data set, the PSNR index of the 4× reconstruction result is increased by 0.39 dB, and the SSIM index is increased to 0.8992, and the subjective visual effect of the algorithm is better.

-

表 1 多尺度特征提取单元参数

Table 1. Parameters of the multi-scale feature extraction units

所属

模块组件名 卷积核

大小输入尺寸 输出尺寸 第一级 Conv1 1×1 H×W×64 H×W×32 Conv3 3×3 H×W×32 H×W×32 第二级 Conv3 3×3 H×W×64 H×W×64 通道注意力 Fusion 1×1 H×W×192 H×W×64 Pooling — H×W×64 1×1×64 Conv1-1 1×1 1×1×64 1×1×4 Conv1-2 1×1 1×1×4 1×1×64 表 2 不同模块的有效性验证

Table 2. Validation of different modules

模型名字 CA RB FFRL PSNR/SSIM/TIME MSARNSC × √ × 27.62/0.7682/ 0.11s MSARNDB × √ × 27.67/0.7751/0.16s MSARNIB × √ × 27.78/0.7767/0.13s MSARNFFRL- √ √ × 28.26/0.7789/0.15s MSARN √ √ √ 28.64 /0.7840/0.14s 表 3 残差分支与通道注意力有效性验证

Table 3. Validation of residual branch and channel attention

模块名字 CA RB FFRL PSNR/SSIM MSARNRB- √ × √ 28.57/0.7802 MSARNCA- × √ √ 28.35/0.7778 MSARN √ √ √ 28.64 /0.7840 表 4 不同损失函数的PSNR比较

Table 4. PSNR comparison of different loss functions

放大比例 损失函数 Set5 Set14 ×2 L2 37.84 33.50 Charbonnier 38.13 33.89 ×3 L2 33.91 30.03 Charbonnier 34.05 30.40 ×4 L2 31.53 28.26 Charbonnier 31.67 28.41 表 5 不同超分辨率模型重建PSNR/SSIM比较

Table 5. PSNR/SSIM comparison of different super-resolution models

放大比例 方法 Set5 Set14 BSD100 Urban100 ×2 Bicubic 33.68/0.9265 30.24/0.8691 29.56/0.8435 26.88/0.8405 SRCNN 36.66/0.9542 32.45/0.9067 31.56/0.8879 29.51/0.8946 VDSR 37.52/0.9587 33.05/0.9127 31.90/0.8960 30.77/0.9141 DRRN 37.74/0.9597 33.23/0.9136 32.05/0.8973 31.23/0.9188 IDN 37.83/0.9600 33.30/0.9148 32.08/0.8985 31.27/0.9196 MSRN 38.08/0.9605 33.74/0.9170 32.23/0.9013 32.22/0.9326 PAN 38.00/0.9605 33.59/0.9181 32.18/0.8997 32.01/0.9273 EFDN 38.00/0.9604 33.57/0.9179 32.18/0.8998 32.05/0.9275 本文 38.43/0.9626 34.05/0.9213 32.32/0.9028 32.28/0.9338 ×3 Bicubic 30.40/0.8686 27.54/0.7741 27.21/0.7389 24.46/0.7349 SRCNN 32.75/0.9090 29.29/0.8215 28.41/0.7863 26.24/0.7991 VDSR 33.66/0.9213 29.78/0.8318 28.83/0.7976 27.14/0.8279 DRRN 34.03/0.9244 29.96/0.8349 28.95/0.8004 27.53/0.8377 IDN 34.11/0.9253 29.99/0.8354 28.95/0.8013 27.42/0.8359 MSRN 34.38/0.9262 30.34/0.8395 29.08/0.8041 28.08/0.8554 PAN 34.40/0.9271 30.36/0.8423 29.11/0.8050 28.11/0.8511 本文 34.61/0.9284 30.33/0.8480 29.25/0.8076 28.39/0.8607 ×4 Bicubic 28.43/0.8109 26.00/0.7023 25.96/0.6678 23.14/0.6574 SRCNN 30.48/0.8628 27.50/0.7513 26.90/0.7103 24.52/0.7226 VDSR 31.35/0.8838 28.02/0.7678 27.29/0.7252 25.18/0.7525 DRRN 31.68/0.8888 28.21/0.7720 27.38/0.7284 25.44/0.7638 IDN 31.82/0.8903 28.25/0.7730 27.41/0.7297 25.41/0.7632 MSRN 32.07/0.8903 28.60/0.7751 27.52/0.7273 26.04/0.7896 PAN 32.13/0.8948 28.61/0.7822 27.59/0.7363 26.11/0.7854 EFDN 32.08/0.8931 28.58/0.7809 27.56/0.7354 26.00/0.7815 本文 32.52/0.8992 28.85/0.7840 27.70/0.7410 26.21/0.7866 -

[1] 左超, 陈钱. 分辨率、超分辨率与空间带宽积拓展—从计算光学成像角度的一些思考[J]. 中国光学(中英文),2022,15(6):1105-1166.ZUO CH, CHEN Q. Resolution, super-resolution and spatial bandwidth product expansion——some thoughts from the perspective of computational optical imaging[J]. Chinese Optics, 2022, 15(6): 1105-1166. (in Chinese) [2] 吴靖, 叶晓晶, 黄峰, 等. 基于深度学习的单帧图像超分辨率重建综述[J]. 电子学报,2022,50(9):2265-2294.WU J, YE X J, HUANG F, et al. A review of single image super-resolution reconstruction based on deep learning[J]. Acta Electronica Sinica, 2022, 50(9): 2265-2294. (in Chinese) [3] 李洪安, 郑峭雪, 陶若霖, 等. 基于深度学习的图像超分辨率研究综述[J]. 图学学报,2023,44(1):1-15.LI H A, ZHENG Q X, TAO R L, et al. Review of image super-resolution based on deep learning[J]. Journal of Graphics, 2023, 44(1): 1-15. (in Chinese) [4] 毕勇, 潘鸣奇, 张硕, 等. 三维点云数据超分辨率技术[J]. 中国光学(中英文),2022,15(2):210-223.BI Y, PAN M Q, ZHANG SH, et al. Overview of 3D point cloud super-resolution technology[J]. Chinese Optics, 2022, 15(2): 210-223. (in Chinese) [5] 王溢琴, 董云云, 刘慧玲. 基于GoogLeNet和空间谱变换的高光谱图像超分辨率方法[J]. 光学技术,2022,48(1):93-101. doi: 10.3321/j.issn.1002-1582.2022.1.gxjs202201015WANG Y Q, DONG Y Y, LIU H L. Super-resolution method of hyperspectral image based on GoogLeNet and spatial spectrum transformation[J]. Optical Technique, 2022, 48(1): 93-101. (in Chinese) doi: 10.3321/j.issn.1002-1582.2022.1.gxjs202201015 [6] 曲海成, 王雅萱, 申磊. 多感受野特征与空谱注意力结合的高光谱图像超分辨率算法[J]. 自然资源遥感,2022,34(1):43-52.QU H CH, WANG Y X, SHEN L. Hyperspectral super-resolution combining multi-receptive field features with spectral-spatial attention[J]. Remote Sensing for Natural Resources, 2022, 34(1): 43-52. (in Chinese) [7] 柯舒婷, 陈明惠, 郑泽希, 等. 生成对抗网络对OCT视网膜图像的超分辨率重建[J]. 中国激光,2022,49(15):1507203.KE SH T, CHEN M H, ZHENG Z X, et al. Super-resolution reconstruction of optical coherence tomography retinal images by generating adversarial network[J]. Chinese Journal of Lasers, 2022, 49(15): 1507203. (in Chinese) [8] 左艳, 黄钢, 聂生东. 深度学习在医学影像智能处理中的应用与挑战[J]. 中国图象图形学报,2021,26(2):305-315. doi: 10.11834/jig.190470ZUO Y, HUANG G, NIE SH D. Application and challenges of deep learning in the intelligent processing of medical images[J]. Journal of Image and Graphics, 2021, 26(2): 305-315. (in Chinese) doi: 10.11834/jig.190470 [9] 王一宁, 赵青杉, 秦品乐, 等. 基于轻量密集神经网络的医学图像超分辨率重建算法[J]. 计算机应用,2022,42(8):2586-2592.WANG Y N, ZHAO Q SH, QIN P L, et al. Super-resolution reconstruction algorithm of medical image based on lightweight dense neural network[J]. Journal of Computer Applications, 2022, 42(8): 2586-2592. (in Chinese) [10] 耿铭昆, 吴凡路, 王栋. 轻量化火星遥感影像超分辨率重建网络[J]. 光学 精密工程,2022,30(12):1487-1498. doi: 10.37188/OPE.20223012.1487GENG M K, WU F L, WANG, D. Lightweight Mars remote sensing image super-resolution reconstruction network[J]. Optics and Precision Engineering, 2022, 30(12): 1487-1498. (in Chinese) doi: 10.37188/OPE.20223012.1487 [11] ZHANG J ZH, XU T F, LI J N, et al. Single-image super resolution of remote sensing images with real-world degradation modeling[J]. Remote Sensing, 2022, 14(12): 2895. doi: 10.3390/rs14122895 [12] 倪若婷, 周莲英. 基于卷积神经网络的人脸图像超分辨率重建方法[J]. 计算机与数字工程,2022,50(1):195-200. doi: 10.3969/j.issn.1672-9722.2022.01.037NI R T, ZHOU L Y. Face image super-resolution reconstruction method based on convolutional neural network[J]. Computer &Digital Engineering, 2022, 50(1): 195-200. (in Chinese) doi: 10.3969/j.issn.1672-9722.2022.01.037 [13] 卢峰, 周琳, 蔡小辉. 面向安防监控场景的低分辨率人脸识别算法研究[J]. 计算机应用研究,2021,38(4):1230-1234. doi: 10.19734/j.issn.1001-3695.2020.01.0074LU F, ZHOU L, CAI X H. Research on low-resolution face recognition algorithm for security surveillance scene[J]. Application Research of Computers, 2021, 38(4): 1230-1234. (in Chinese) doi: 10.19734/j.issn.1001-3695.2020.01.0074 [14] KEYS R. Cubic convolution interpolation for digital image processing[J]. IEEE Transactions on Acoustics,Speech,and Signal Processing, 1981, 29(6): 1153-1160. doi: 10.1109/TASSP.1981.1163711 [15] 黄友文, 唐欣, 周斌. 结合双注意力和结构相似度量的图像超分辨率重建网络[J]. 液晶与显示,2022,37(3):367-375. doi: 10.37188/CJLCD.2021-0178HUANG Y W, TANG X, ZHOU B. Image super-resolution reconstruction network with dual attention and structural similarity measure[J]. Chinese Journal of Liquid Crystals and Displays, 2022, 37(3): 367-375. (in Chinese) doi: 10.37188/CJLCD.2021-0178 [16] 周乐, 徐龙, 刘孝艳, 等. 基于梯度感知的单幅图像超分辨[J]. 液晶与显示,2022,37(10):1334-1344. doi: 10.37188/CJLCD.2022-0083ZHOU L, XU L, LIU X Y, et al. Gradient-aware based single image super-resolution[J]. Chinese Journal of Liquid Crystals and Displays, 2022, 37(10): 1334-1344. (in Chinese) doi: 10.37188/CJLCD.2022-0083 [17] DONG CH, LOY C C, HE K M, et al.. Learning a deep convolutional network for image super-resolution[C]. Proceedings of the 13th European Conference on Computer Vision, Springer, 2014: 184-199. [18] DONG CH, LOY C C, TANG X O. Accelerating the super-resolution convolutional neural network[C]. Proceedings of the 14th European Conference on Computer Vision, Springer, 2016: 391-407. [19] KIM J, LEE J K, LEE K M. Accurate image super-resolution using very deep convolutional networks[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 2016: 1646-1654. [20] TAI Y, YANG J, LIU X M. Image super-resolution via deep recursive residual network[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 2017: 2790-2798. [21] SHI W ZH, CABALLERO J, HUSZÁR F, et al.. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network[C]. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 2016: 1874-1883. [22] ZHANG Y L, TIAN Y P, KONG Y, et al. . Residual dense network for image super-resolution[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2018: 2472-2481. [23] LIM B, SON S, KIM H, et al. . Enhanced deep residual networks for single image super-resolution[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), IEEE, 2017: 1132-1140. [24] ZHANG Y L, LI K P, LI K, et al. . Image super-resolution using very deep residual channel attention networks[C]. Proceedings of the 15th European Conference on Computer Vision, Springer, 2018: 294-310. [25] LI J CH, FANG F M, MEI K F, et al. . Multi-scale residual network for image super-resolution[C]. Proceedings of the 15th European Conference on Computer Vision, Springer, 2018: 527-542. [26] ZHAO H Y, KONG X T, HE J W, et al. . Efficient image super-resolution using pixel attention[C]. Proceedings of the European Conference on Computer Vision, Springer, 2020: 56-72. [27] WANG Y. Edge-enhanced feature distillation network for efficient super-resolution[C]. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), IEEE, 2022: 776-784. [28] LAI W SH, HUANG J B, AHUJA N, et al.. Deep Laplacian pyramid networks for Fast and accurate super-resolution[C]. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 2017: 5835-5843. [29] SZEGEDY C, LIU W, JIA Y Q, et al.. Going deeper with convolutions[C]. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 2015: 1-9. [30] HUI ZH, WANG X M, GAO X B. Fast and accurate single image super-resolution via information distillation network[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2018: 723-731. -

下载:

下载: