-

摘要:

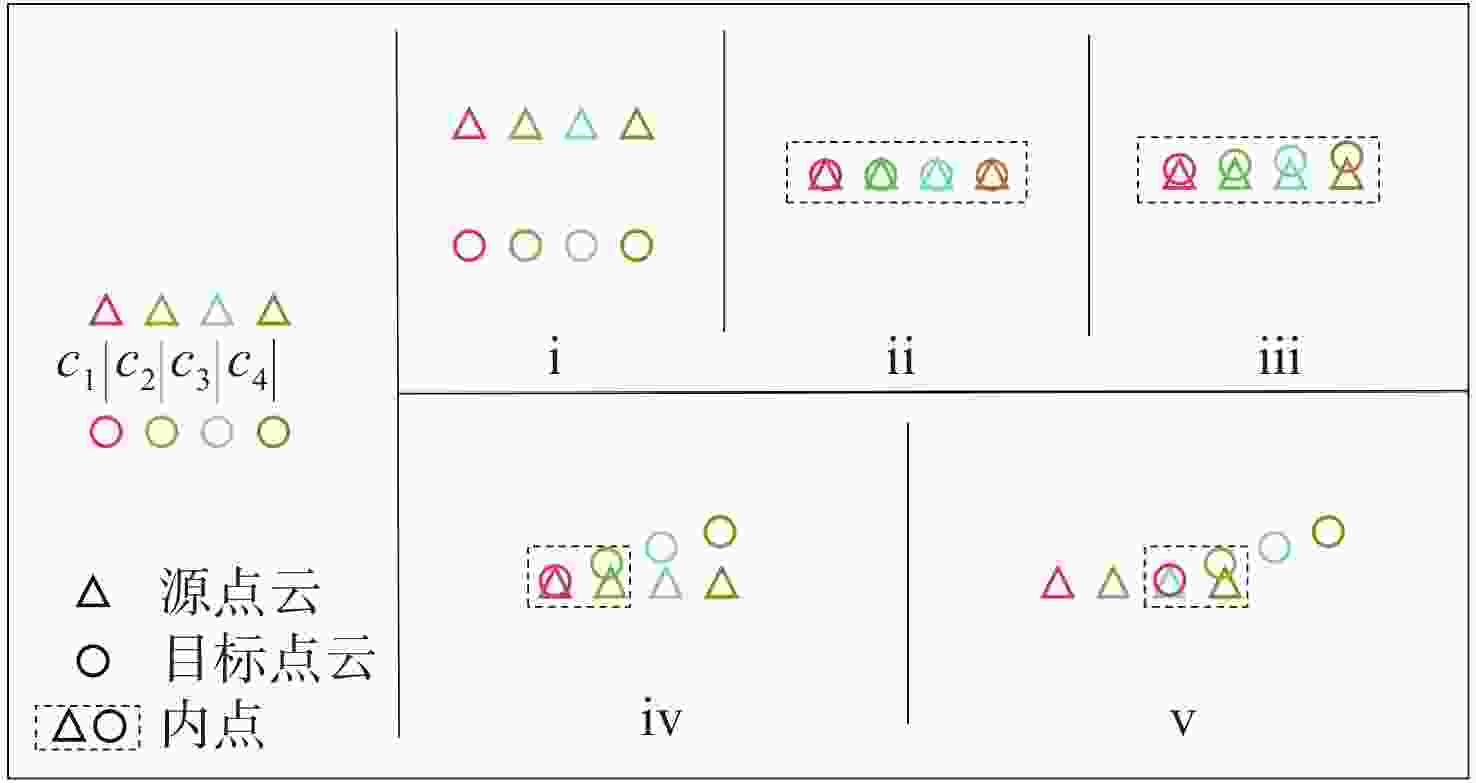

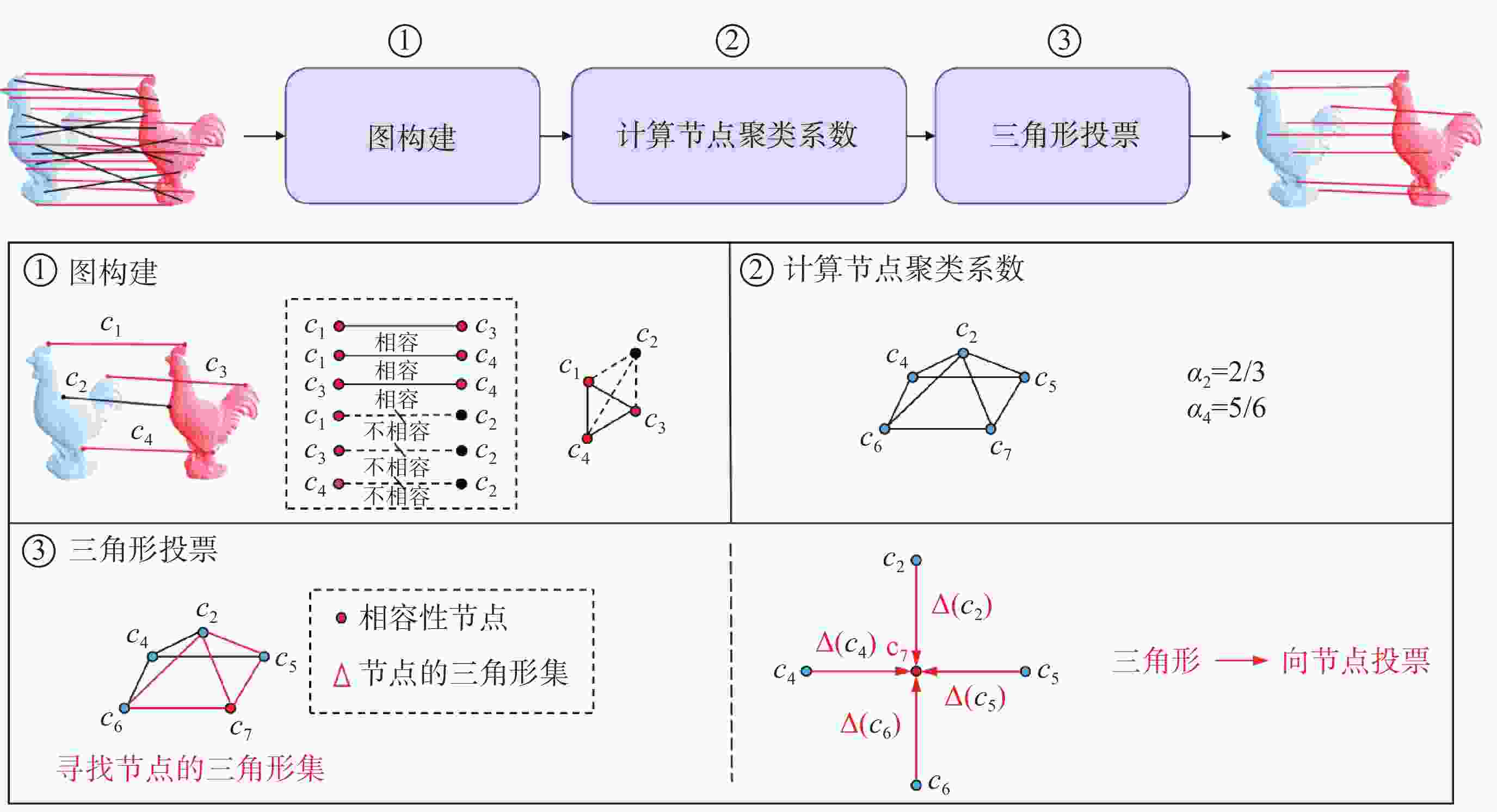

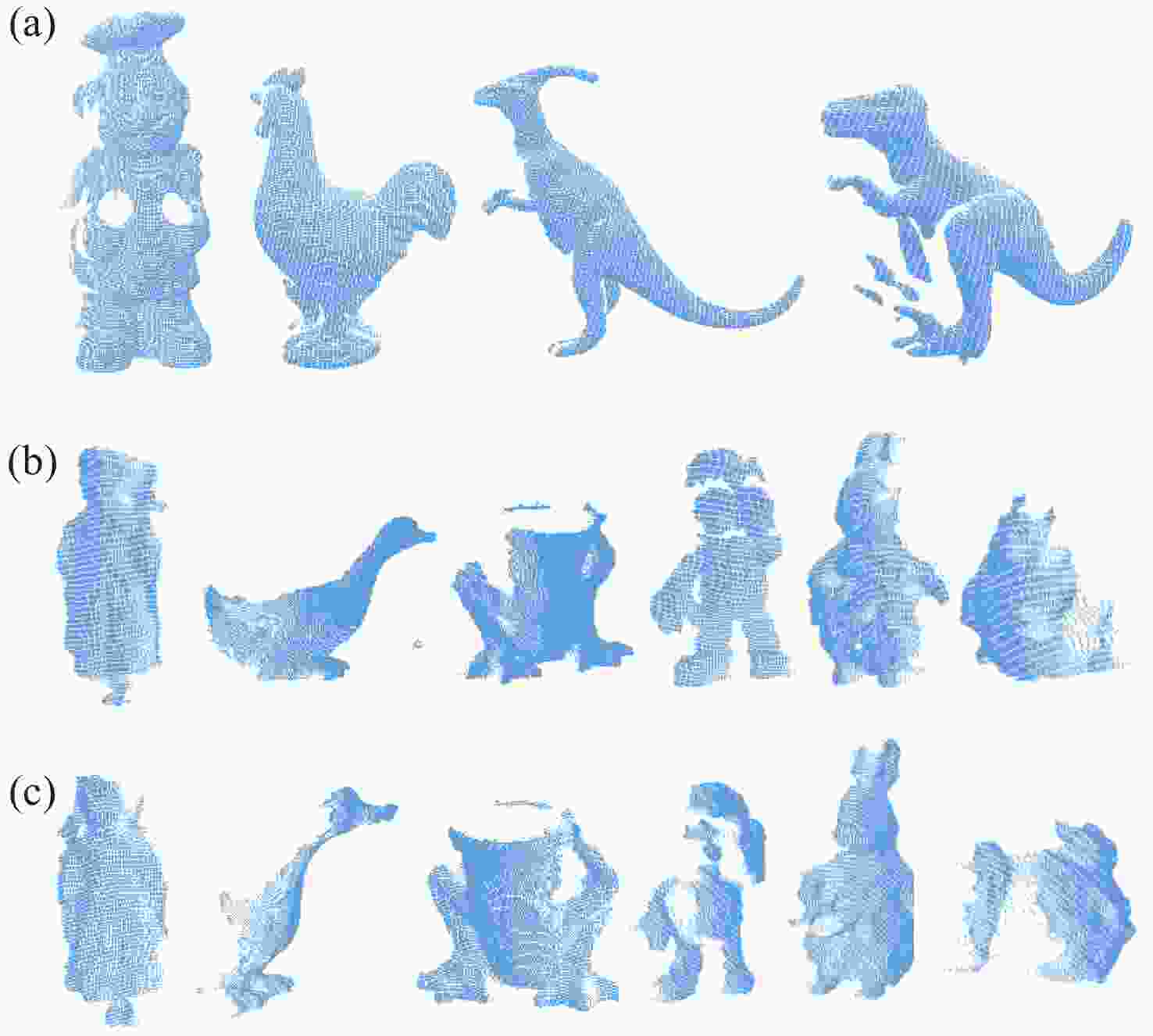

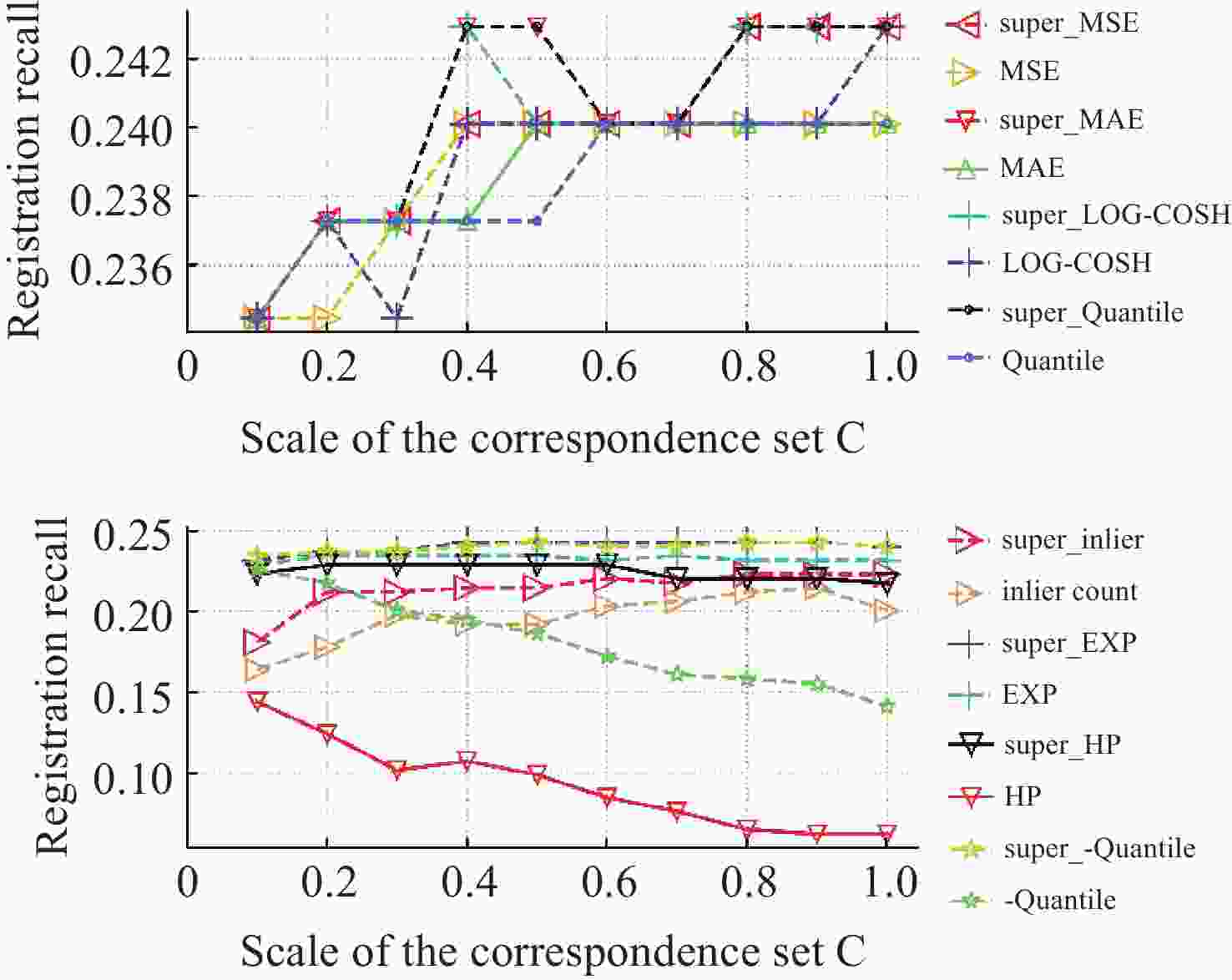

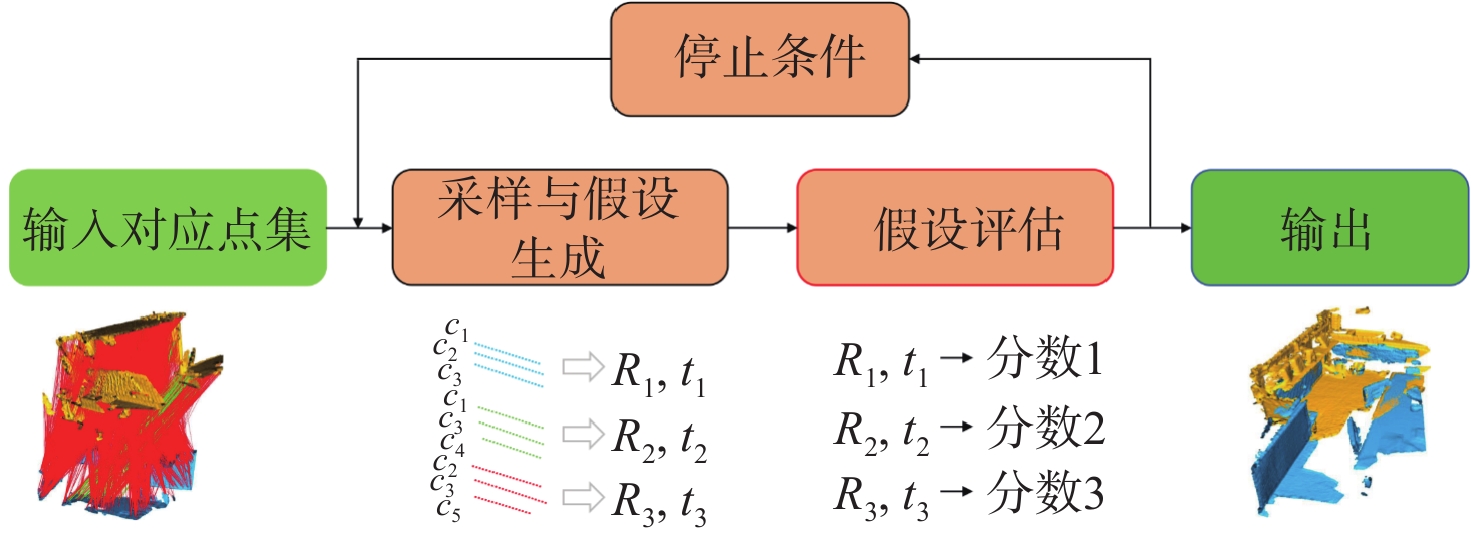

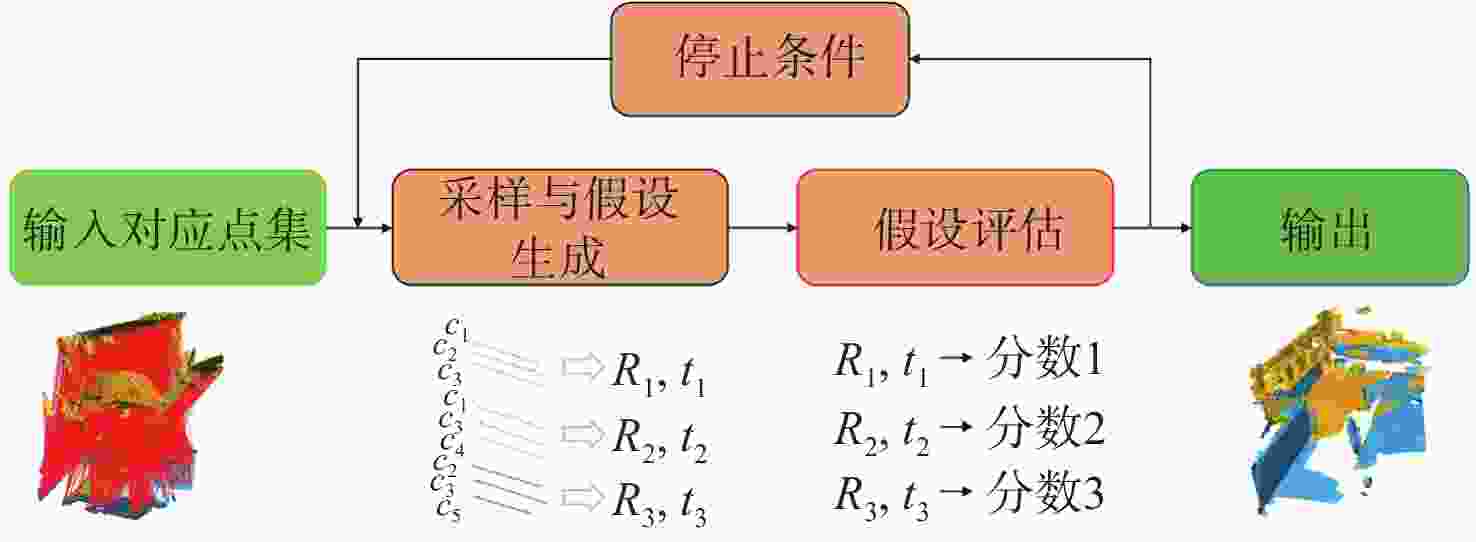

本文提出了一种创新的假设度量方法,将通过三角形投票方法获得的点对置信分数与基于点对的度量相结合。该方法核心观点是:一个好的假设应能将高置信分数的对应点精确对齐,从而产生更高的得分贡献。此外,本文还对现有基于内点的度量有效性方法加以改进,提出2种改进方法:忽略具有较小变换误差的内点距离,以及抑制由大量低置信度对应点引起的错误高分贡献。在3个数据集上的对比实验表明,所提出的度量方法能够提升所有已知的基于点对的度量准确性,并在默认参数设置下有1%~16.95%不同程度的配准性能提升和1.67%~10.79%的时间节约,在时间消耗、鲁棒性和配准性能之间实现了更好的平衡。特别地,改进的内点计数度量具有更加鲁棒和精确的配准性能。因此,本文所提出的度量能够在RANSAC的假设评估阶段识别出更正确的假设,从而实现精确的点云配准。

Abstract:This paper proposes a novel metric that integrates confidence scores of correspondences, obtained through a Triangle Voting (TV) method, with correspondence-based metrics. The proposed metric assumes that a good hypothesis aligns correspondences with high-confidence scores very closely, thereby yielding higher score contributions. We further introduce two enhancement to improve the effectiveness of inlier-based metrics with confidence scores: (1) ignoring the distance of inliers with minor transformation errors, and (2) suppressing the erroneous high-score contributions caused by numerous low-confidence correspondences. Comparative experiments conducted on three datasets demonstrate the superiority of the proposed metric over all previously known correspondence-based metrics. The proposed metric achieves registration performance enhancements ranging from 1% to 16.95% and time savings ranging from 1.67% to 10.79% under default parameter settings. Moreover, it strikes a better balance among time consumption, robustness, and registration performance. Specifically, the improved inlier count metric exhibits highly robust and accurate performance. In conclusion, the proposed metric can accurately identify the more correct hypothesis during the hypothesis evaluation stage of RANSAC, thereby enabling precise point cloud registration.

-

Key words:

- computer vision /

- point cloud registration /

- RANSAC /

- hypothesis evaluation

-

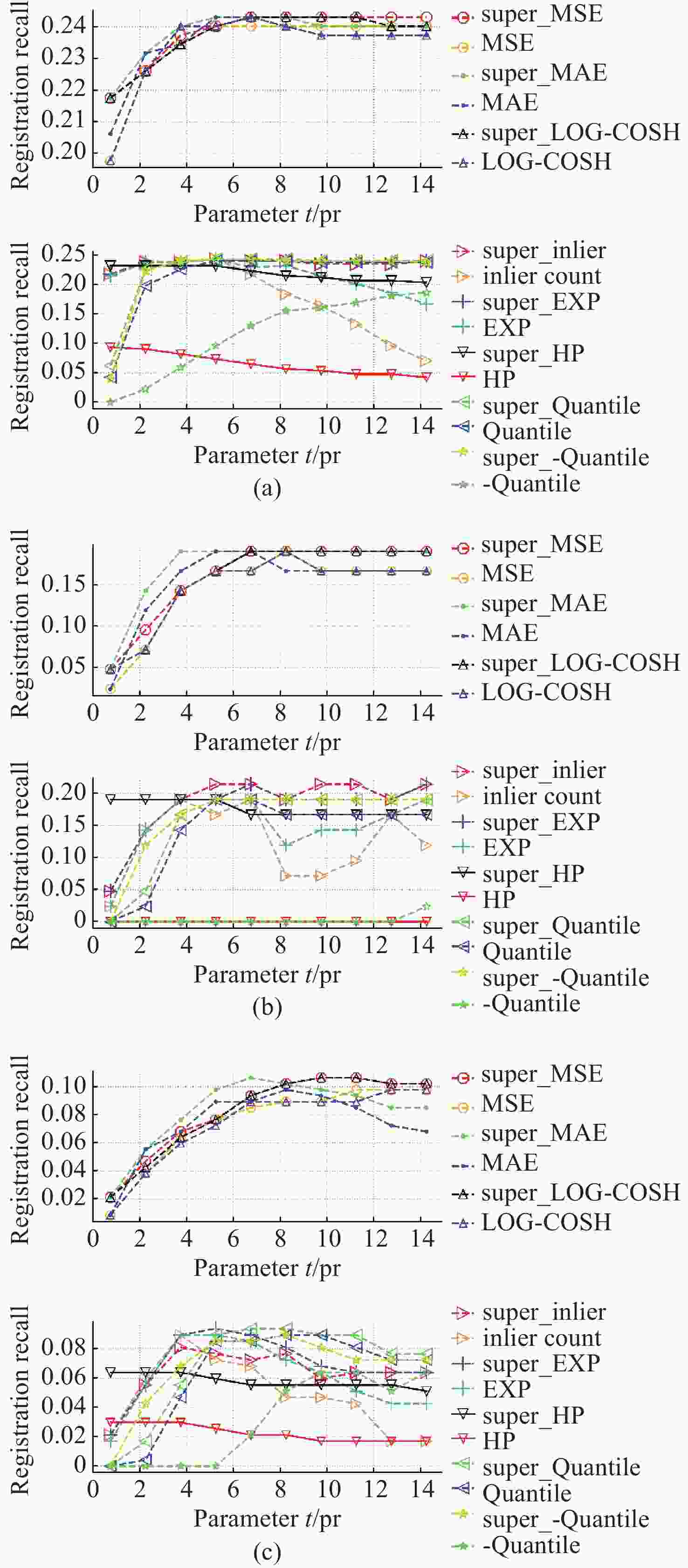

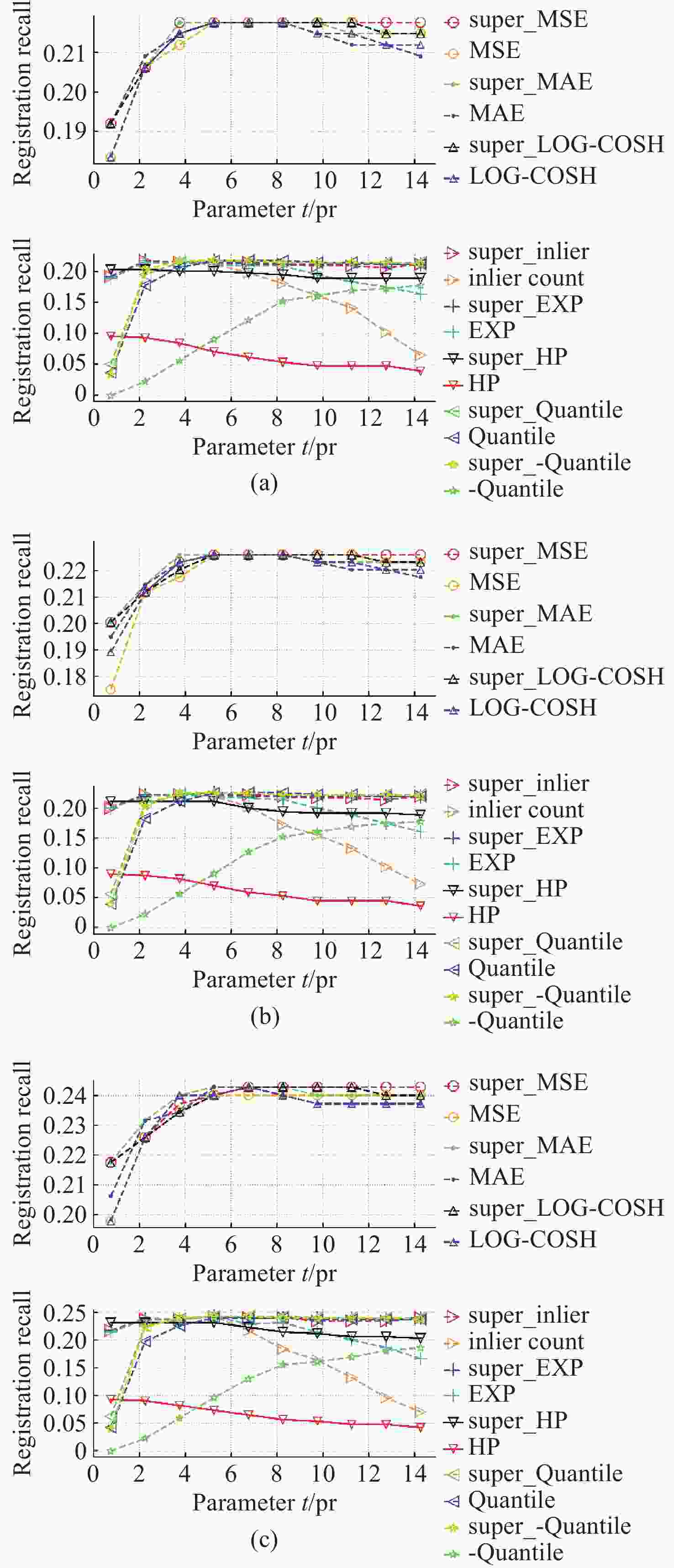

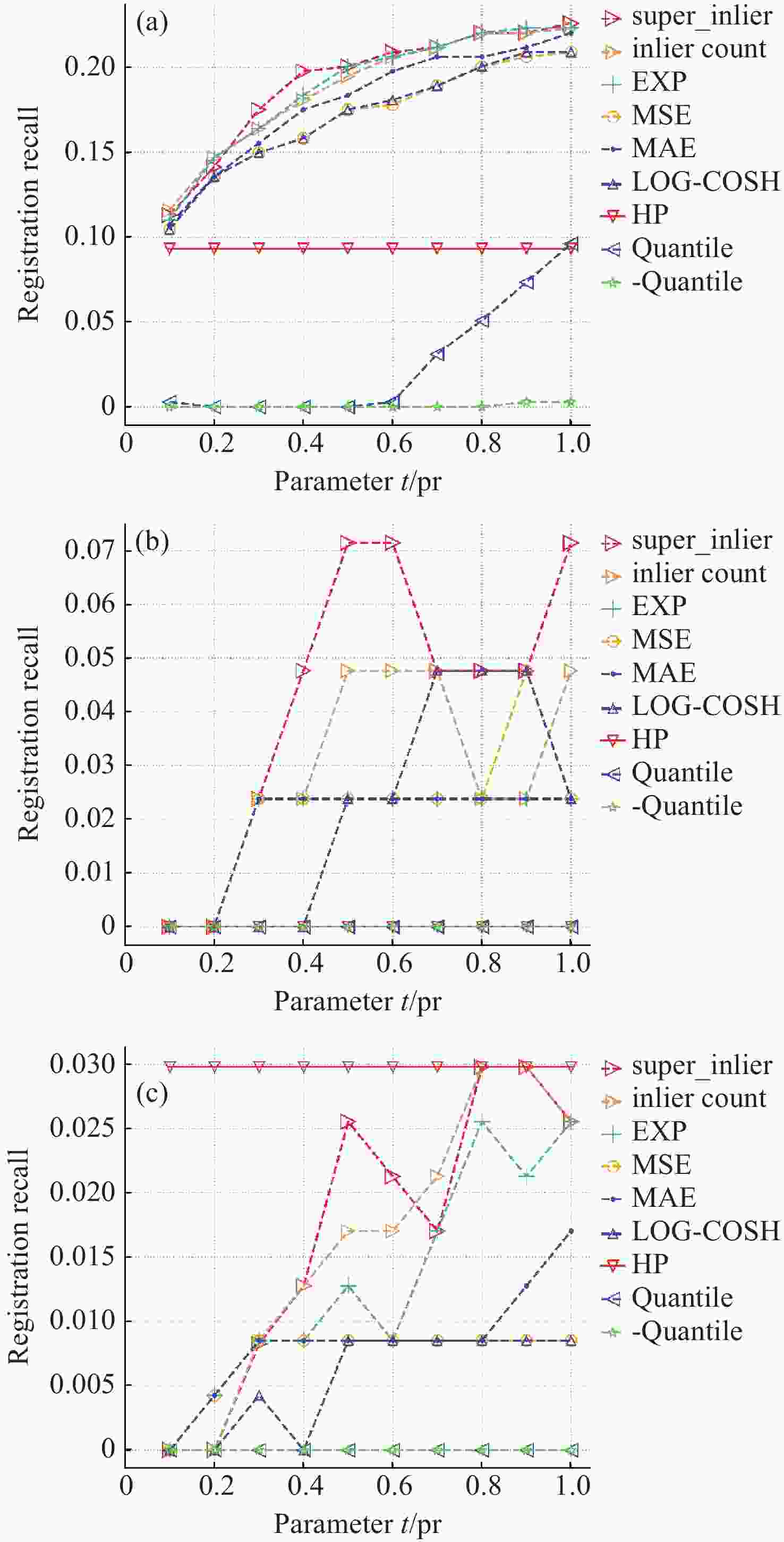

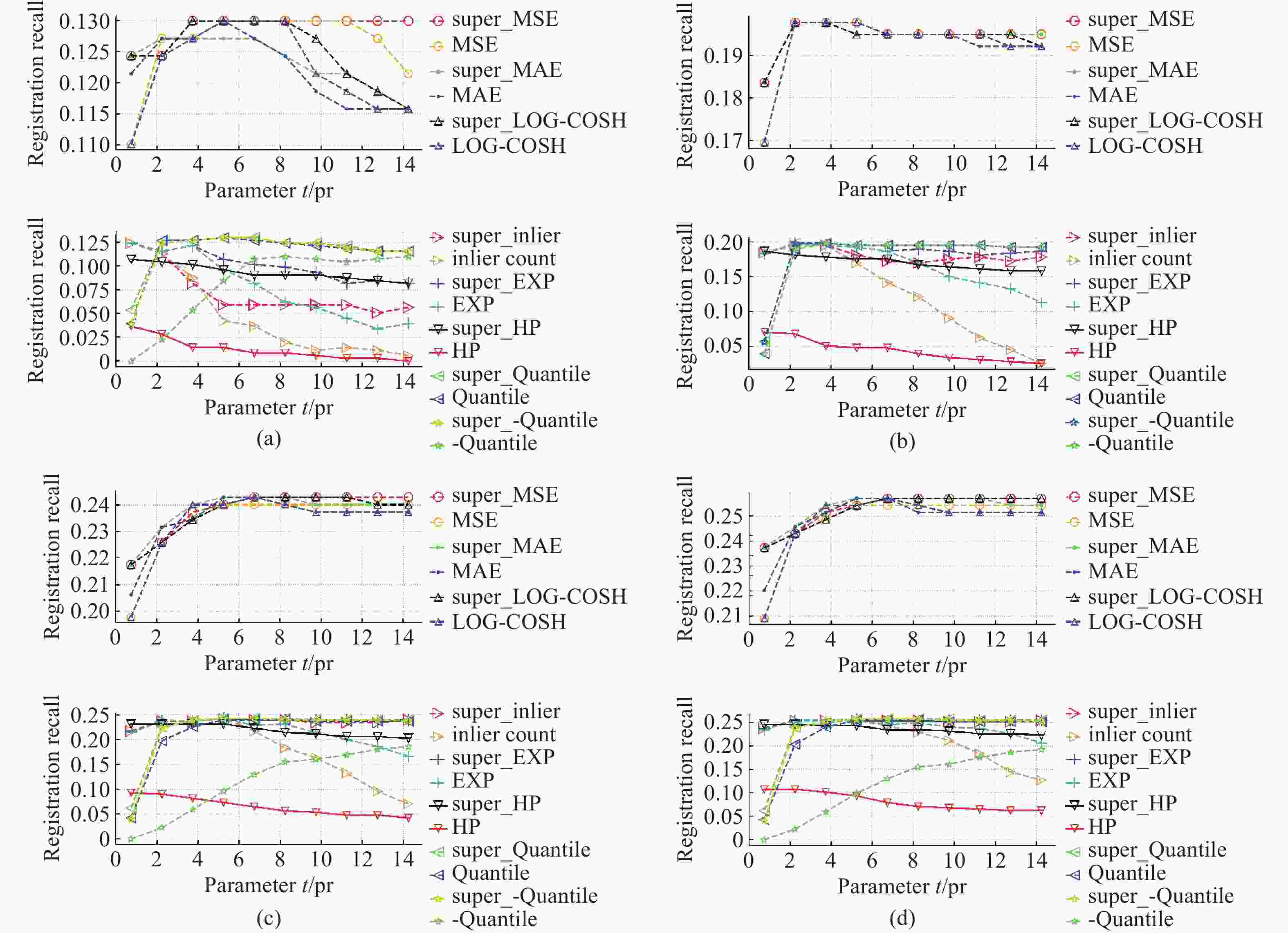

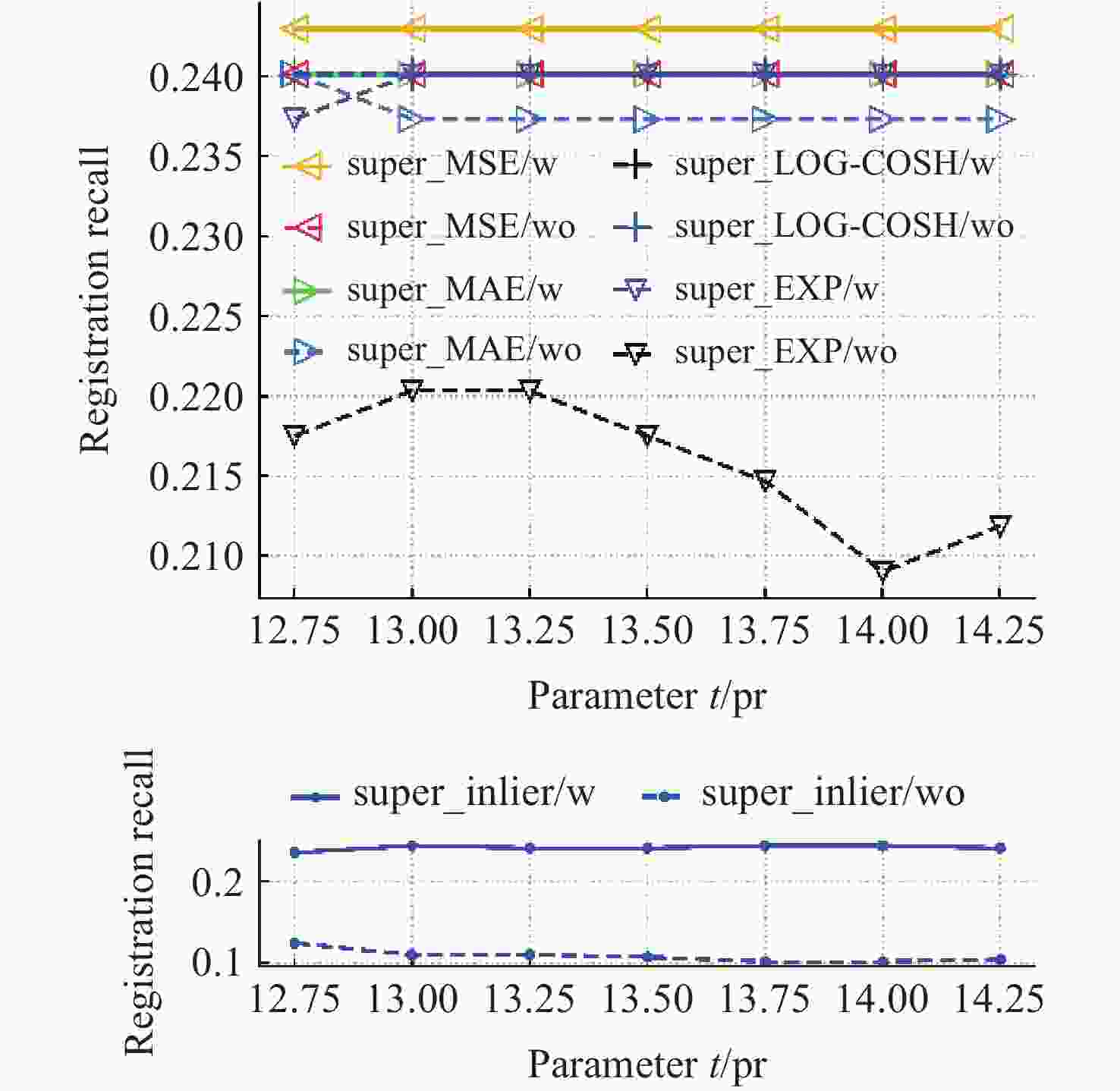

图 8 改变RMSE阈值时不同假设度量对参数

$t$ 的鲁棒性。(a)${d_{rmse}} = 1\,pr$ ; (b)${d_{rmse}} = 2\,pr$ ; (c)${d_{rmse}} = 3\,pr$ ; (d)${d_{rmse}} = 4\,pr$ Figure 8. The robustness of the metrics to parameter

$t$ when varying RMSE thresholds${d_{rmse}}$ . (a)${d_{rmse}} = 1\,pr$ ; (b)${d_{rmse}} = 2\,pr$ ; (c)${d_{rmse}} = 3\,pr$ ; (d)${d_{rmse}} = 4\,pr$ 表 1 实验数据集的属性

Table 1. Properties of experimental datasets

数据集 干扰条件 数据模态 应用场景 U3M 自遮挡、有限重叠 LiDAR 配准 BoD5 遮挡、孔洞、噪声 Kinect 目标识别 BMR 自遮挡、有限重叠、孔洞、噪声 Kinect 配准 表 2 具有不同假设评估度量的RANSAC估计器在不同数据集中筛选点云配准假设的平均时间消耗(单位:ms)

Table 2. The average time consumption of RANSAC estimators with different hypothesis evaluation metrics for filtering point cloud registration hypotheses in different datasets (Unit:ms)

假设度量 U3M BoD5 BMR 平均时间提升 MAE 3755.54 845.87 955.29 3.85% super_MAE 3616.54 801.45 920.35 MSE 3848.48 866.12 967.82 3.85% super_MSE 3655.23 820.00 921.72 LOG-COSH 3890.83 877.98 1015.79 6.73% super_LOG-COSH 3723.58 809.63 933.32 EXP 4084.22 913.05 1057.97 10.79% super_EXP 3779.09 817.13 905.55 Quantile 3990.10 877.22 1058.01 7.32% super_Quantile 3727.21 865.53 909.54 -Quantile 4058.26 913.14 1003.27 5.85% super_-Quantile 3785.36 886.88 923.48 inlier_count 3600.43 830.08 892.94 2.10% super_inlier 3584.74 785.23 888.72 HP 3855.69 845.95 970.22 1.67% super_HP 3817.90 840.45 937.29 OP 1840270.00 445224.00 145848.00 PC_Dist 1814150.00 438667.00 146138.00 -

[1] GUO Y L, WANG H Y, HU Q Y, et al. Deep learning for 3D point clouds: a survey[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(12): 4338-4364. doi: 10.1109/TPAMI.2020.3005434 [2] 朱婧怡, 杨鹏程, 孟杰, 等. 基于曲率特征的文物点云分类降采样与配准方法[J]. 中国光学(中英文),2024,17(3):572-579. doi: 10.37188/CO.2023-0115ZHU J Y, YANG P CH, MENG J, et al. A point cloud classification downsampling and registration method for cultural relics based on curvature features[J]. Chinese Optics, 2024, 17(3): 572-579. (in Chinese). doi: 10.37188/CO.2023-0115 [3] HUANG X SH, MEI G F, ZHANG J, et al. A comprehensive survey on point cloud registration[J]. arXiv preprint arXiv: 2103.02690, 2021. [4] WONG J M, KEE V, LE T, et al. SegICP: integrated deep semantic segmentation and pose estimation[C]. Proceedings of 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE, 2017: 5784-5789. [5] AZUMA R T. A survey of augmented reality[J]. Presence: Teleoperators and Virtual Environments, 1997, 6(4): 355-385. doi: 10.1162/pres.1997.6.4.355 [6] YANG J Q, HUANG ZH Q, QUAN S W, et al. RANSACs for 3D rigid registration: a comparative evaluation[J]. IEEE/CAA Journal of Automatica Sinica, 2022, 9(10): 1861-1878. doi: 10.1109/JAS.2022.105500 [7] TOMBARI F, SALTI S, DI STEFANO L. Performance evaluation of 3D keypoint detectors[J]. International Journal of Computer Vision, 2013, 102(1-3): 198-220. doi: 10.1007/s11263-012-0545-4 [8] FISCHLER M A, BOLLES R C. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography[J]. Communications of the ACM, 1981, 24(6): 381-395. doi: 10.1145/358669.358692 [9] LU W X, WAN G W, ZHOU Y, et al. DeepVCP: an end-to-end deep neural network for point cloud registration[C]. Proceedings of 2019 IEEE/CVF International Conference on Computer Vision, IEEE, 2019: 12-21. [10] HUANG SH Y, GOJCIC Z, USVYATSOV M, et al. PREDATOR: registration of 3D point clouds with low overlap[C]. Proceedings of 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2021: 4267-4276. [11] BAI X Y, LUO Z X, ZHOU L, et al. D3Feat: joint learning of dense detection and description of 3D local features[C]. Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2020: 6359-6367. [12] CHOY C, PARK J, KOLTUN V. Fully convolutional geometric features[C]. Proceedings of 2019 IEEE/CVF International Conference on Computer Vision, IEEE, 2019: 8958-8966. [13] BESL P, MCKAY N D. A method for registration of 3-D shapes[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1992, 14(2): 239-256. doi: 10.1109/34.121791 [14] CENSI A. An ICP variant using a point-to-line metric[C]. Proceedings of 2008 IEEE International Conference on Robotics and Automation, IEEE, 2008: 19-25. [15] CHEN Y, MEDIONI G. Object modelling by registration of multiple range images[J]. Image and Vision Computing, 1992, 10(3): 145-155. doi: 10.1016/0262-8856(92)90066-C [16] FÖRSTNER W, KHOSHELHAM K. Efficient and accurate registration of point clouds with plane to plane correspondences[C]. Proceedings of 2017 IEEE International Conference on Computer Vision Workshops, IEEE, 2017: 2165-2173. [17] SEGAL A V, HAEHNEL D, THRUN S. Generalized-ICP[M]//TRINKLE J, MATSUOKA Y, CASTELLANOS J A. Robotics: Science and Systems V. Cambridge: MIT Press, 2010: 435. [18] RUSU R B, COUSINS S. 3D is here: point cloud library (PCL)[C]. Proceedings of 2011 IEEE International Conference on Robotics and Automation, IEEE, 2011: 1-4. [19] BAI X Y, LUO Z X, ZHOU L, et al. PointDSC: robust point cloud registration using deep spatial consistency[C]. Proceedings of 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2021: 15854-15864. [20] CHOY C, DONG W, KOLTUN V. Deep global registration[C]. Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2020: 2514-2523. [21] PAIS G D, RAMALINGAM S, GOVINDU V M, et al. 3DRegNet: a deep neural network for 3D point registration[C]. Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2020: 7193-7203. [22] QUAN S W, MA J, HU F Y, et al. Local voxelized structure for 3D binary feature representation and robust registration of point clouds from low-cost sensors[J]. Information Sciences, 2018, 444: 153-171. doi: 10.1016/j.ins.2018.02.070 [23] YANG J Q, CAO ZH G, ZHANG Q. A fast and robust local descriptor for 3D point cloud registration[J]. Information Sciences, 2016, 346-347: 163-179. doi: 10.1016/j.ins.2016.01.095 [24] YANG J Q, HUANG ZH Q, QUAN S W, et al. Toward efficient and robust metrics for RANSAC hypotheses and 3D rigid registration[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2022, 32(2): 893-906. doi: 10.1109/TCSVT.2021.3062811 [25] YANG J Q, ZHANG X Y, FAN SH CH, et al. Mutual voting for ranking 3D correspondences[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, 46(6): 4041-4057. doi: 10.1109/TPAMI.2023.3268297 [26] ZHANG X Y, YANG J Q, ZHANG SH K, et al. 3D registration with maximal cliques[C]. Proceedings of 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2023: 17745-17754. [27] CHEN ZH, SUN K, YANG F, et al. SC2-PCR: a second order spatial compatibility for efficient and robust point cloud registration[C]. Proceedings of 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 2022: 13221-13231. [28] RUSU R B, BLODOW N, BEETZ M. Fast point feature histograms (FPFH) for 3D registration[C]. Proceedings of 2009 IEEE International Conference on Robotics and Automation, IEEE, 2009: 3212-3217. [29] RUSU R B, BLODOW N, MARTON Z C, et al. Aligning point cloud views using persistent feature histograms[C]. Proceedings of 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE, 2008: 3384-3391. [30] YANG J Q, XIAO Y, CAO ZH G. Aligning 2.5D scene fragments with distinctive local geometric features and voting-based correspondences[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2019, 29(3): 714-729. doi: 10.1109/TCSVT.2018.2813083 [31] MIAN A S, BENNAMOUN M, OWENS R A. A novel representation and feature matching algorithm for automatic pairwise registration of range images[J]. International Journal of Computer Vision, 2006, 66(1): 19-40. doi: 10.1007/s11263-005-3221-0 [32] SALTI S, TOMBARI F, DI STEFANO L. SHOT: unique signatures of histograms for surface and texture description[J]. Computer Vision and Image Understanding, 2014, 125: 251-264. doi: 10.1016/j.cviu.2014.04.011 [33] SIPIRAN I, BUSTOS B. Harris 3D: a robust extension of the Harris operator for interest point detection on 3D meshes[J]. The Visual Computer, 2011, 27(11): 963-976. doi: 10.1007/s00371-011-0610-y [34] LOWE D G. Distinctive image features from scale-invariant keypoints[J]. International Journal of Computer Vision, 2004, 60(2): 91-110. doi: 10.1023/B:VISI.0000029664.99615.94 -

下载:

下载: