-

摘要:

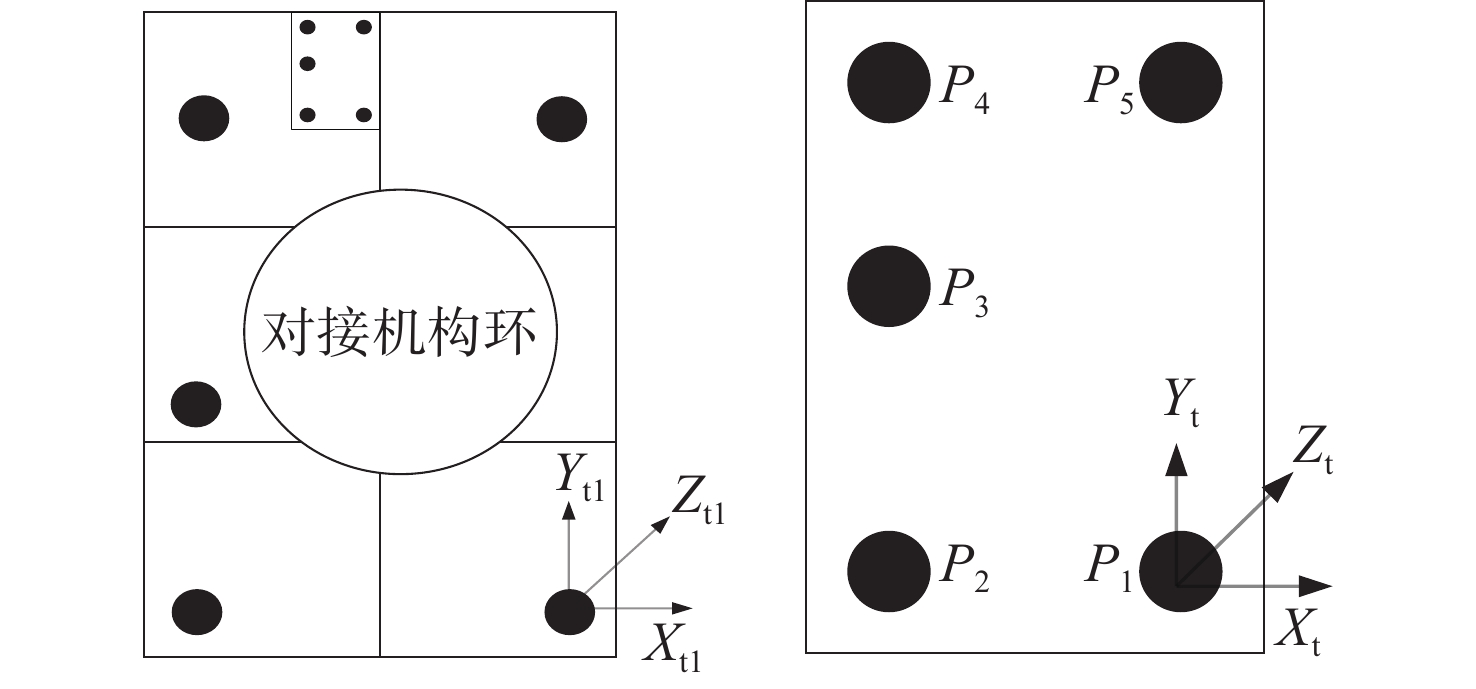

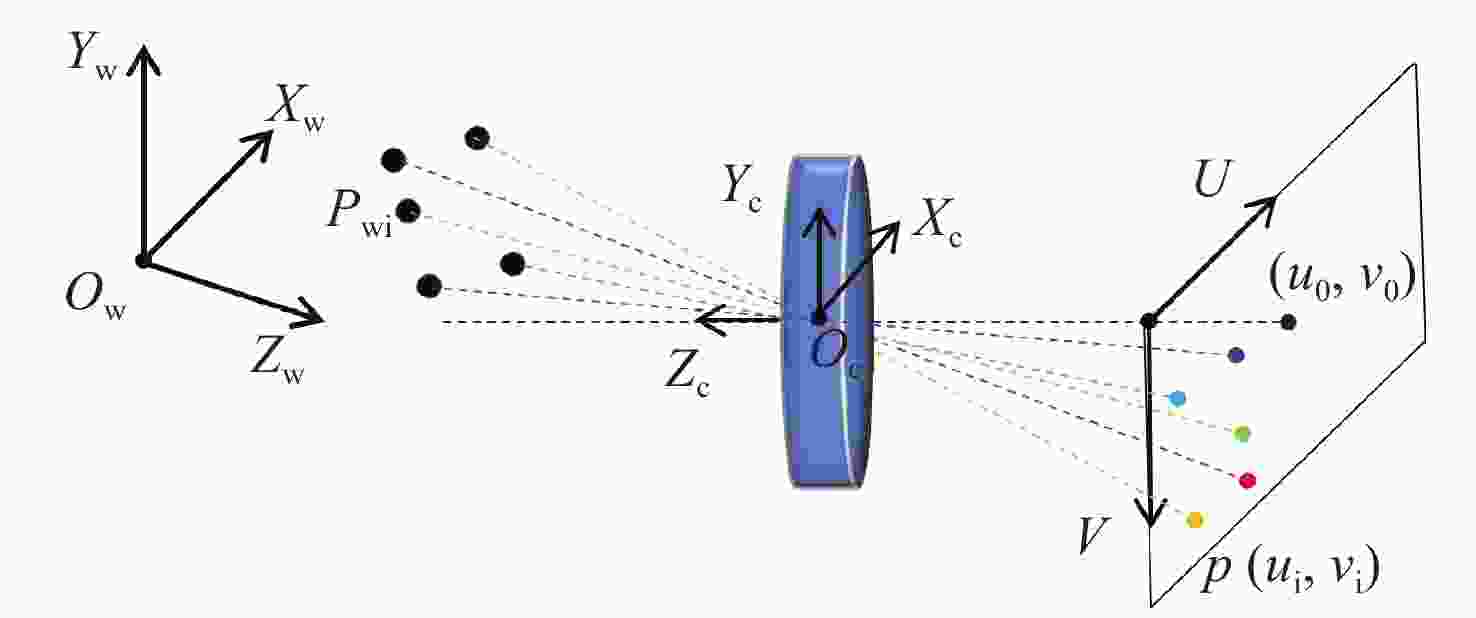

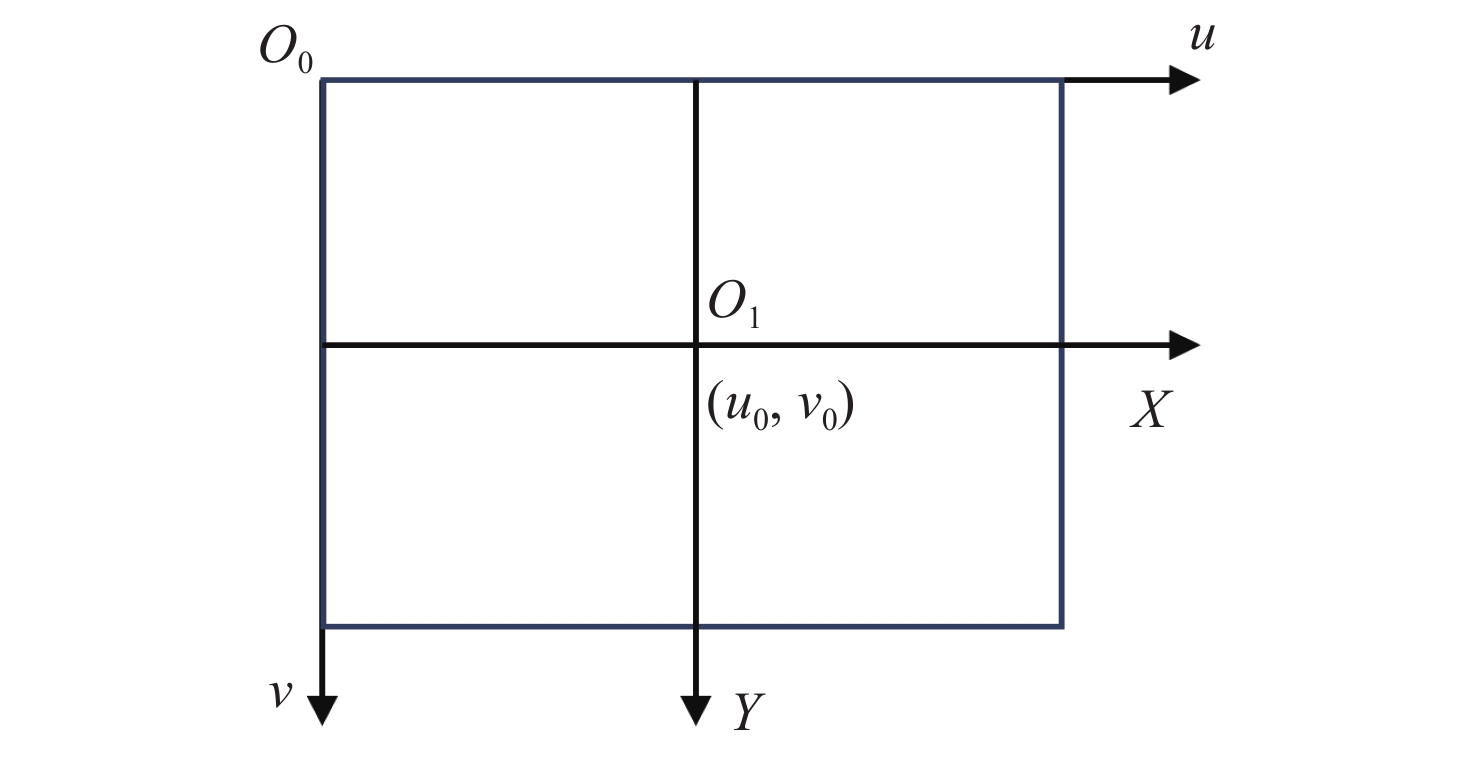

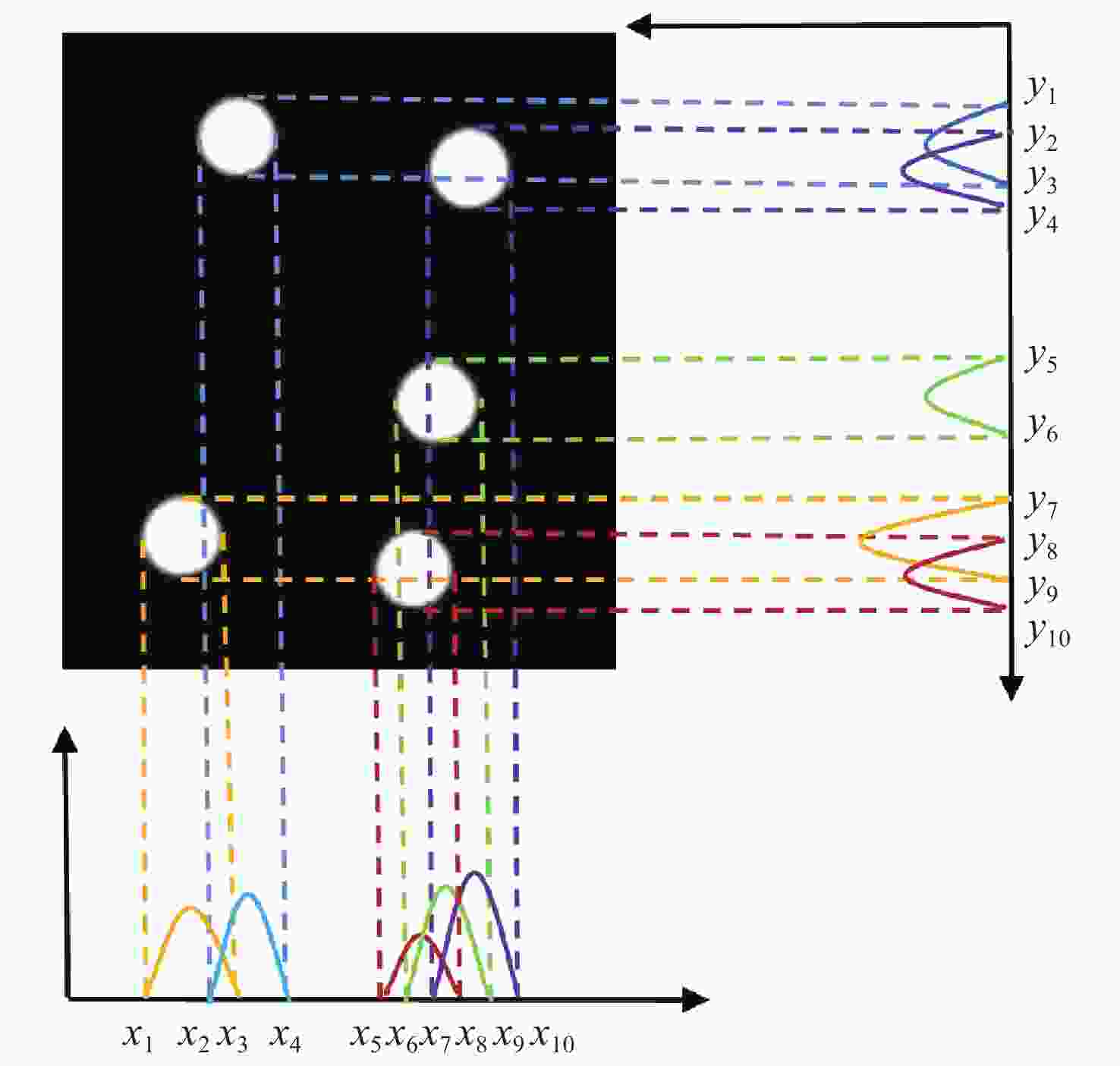

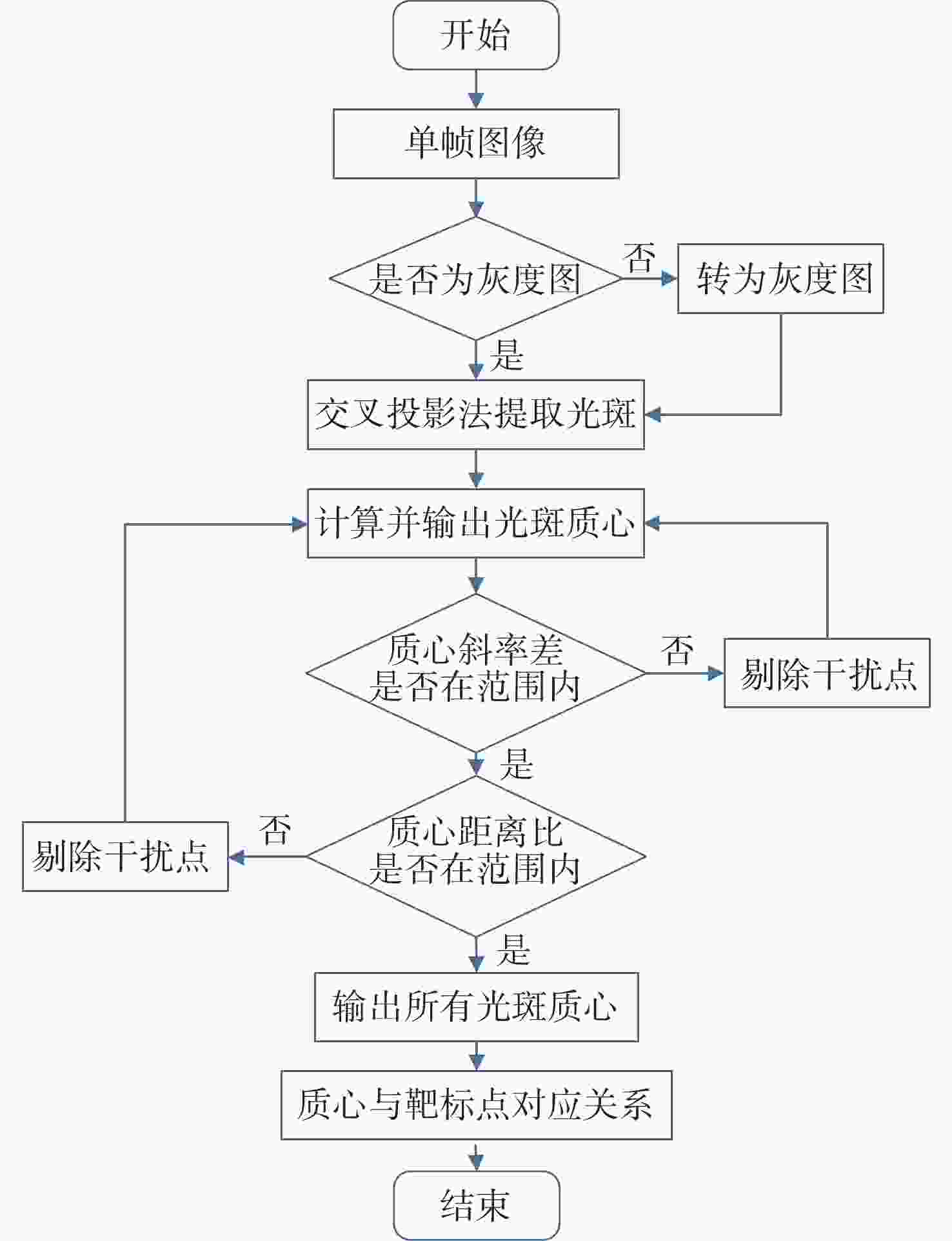

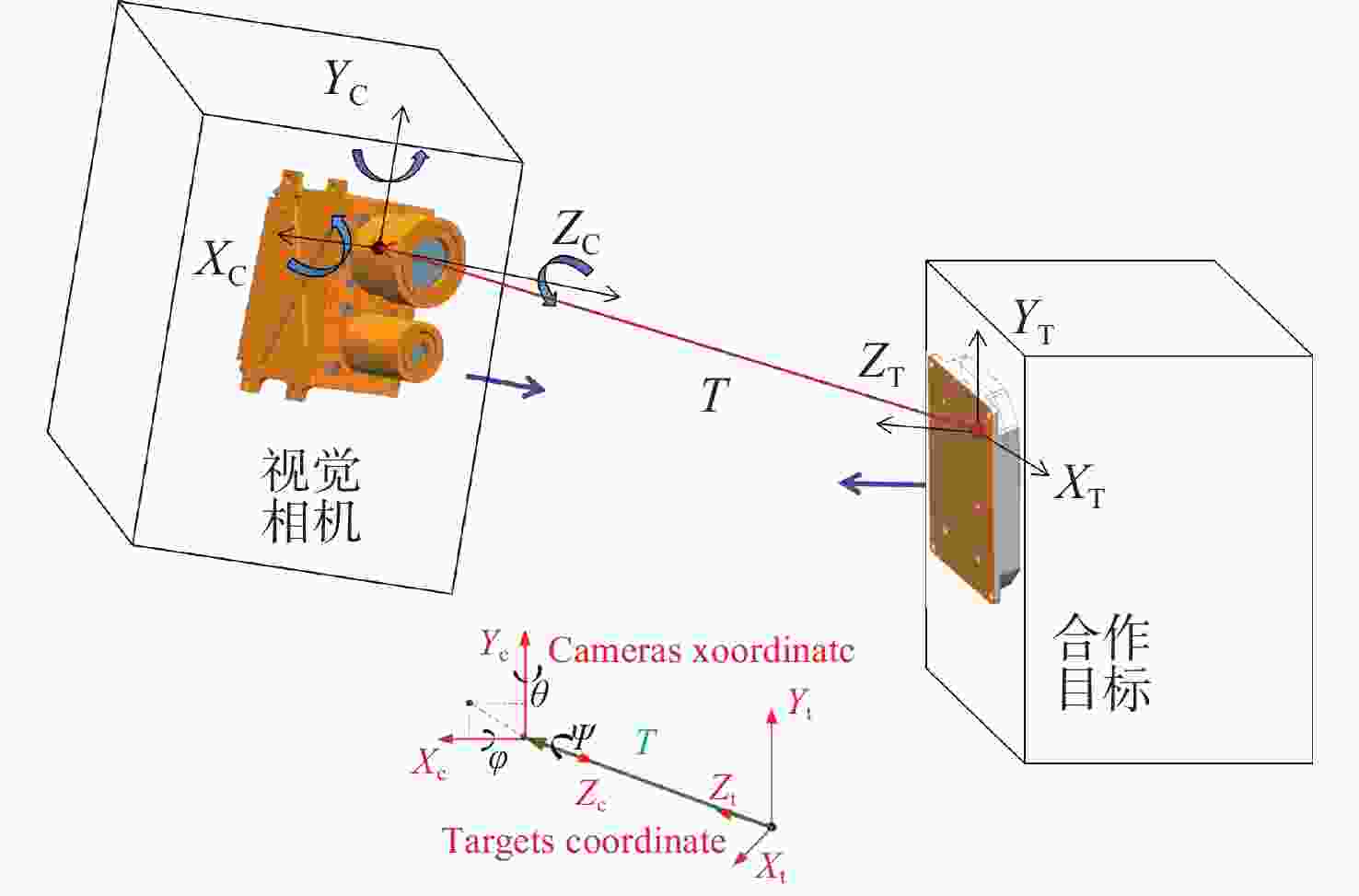

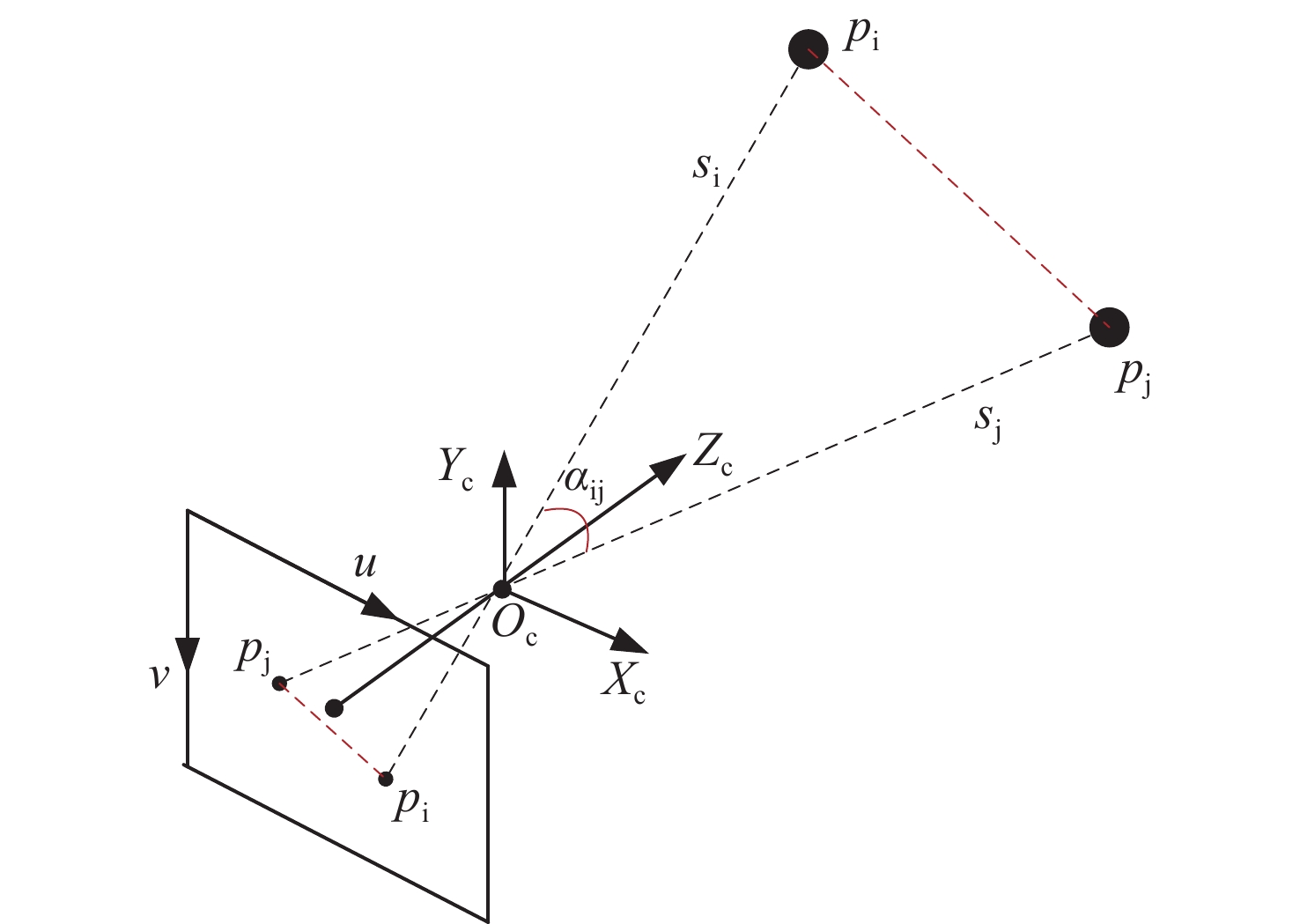

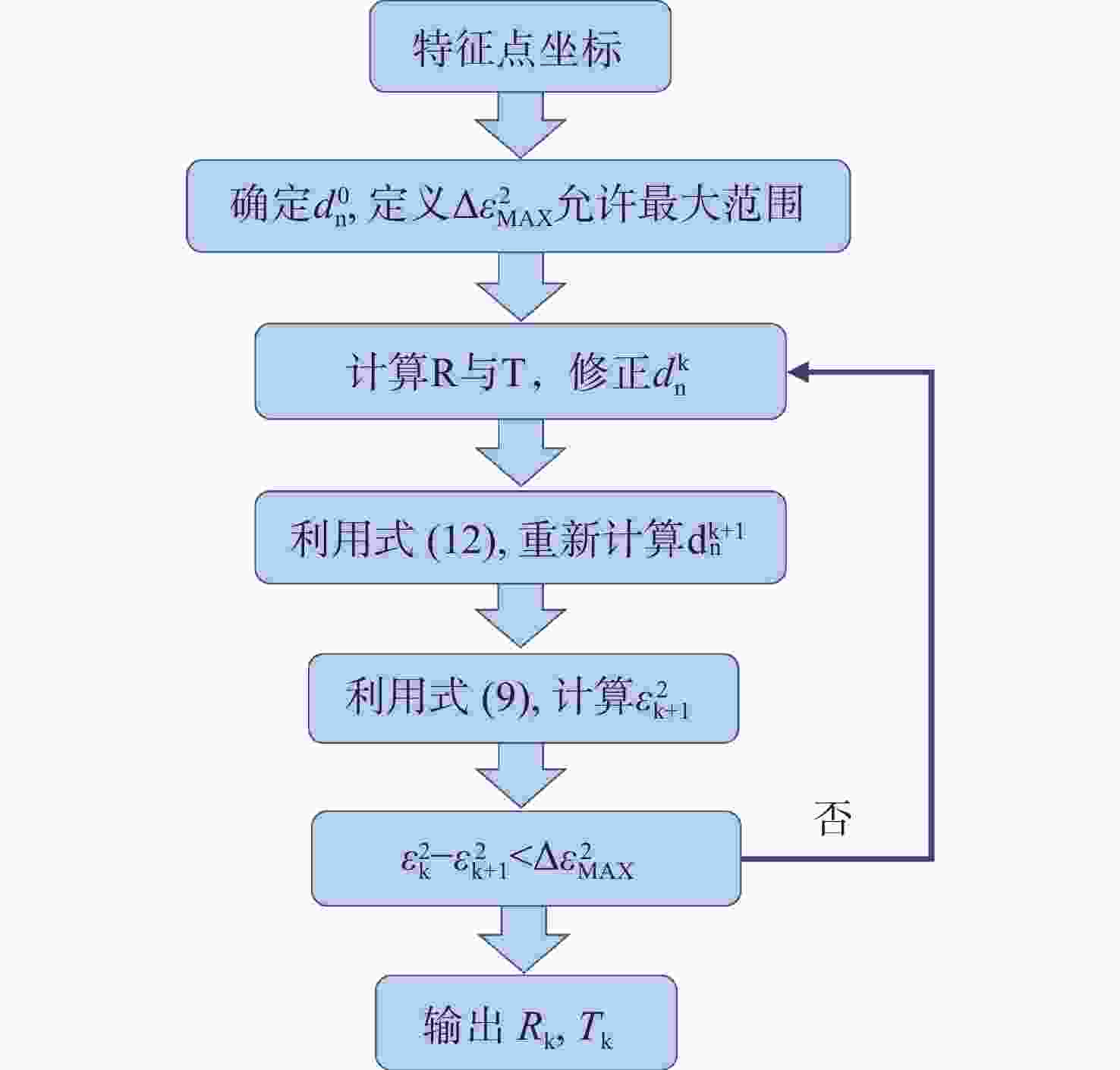

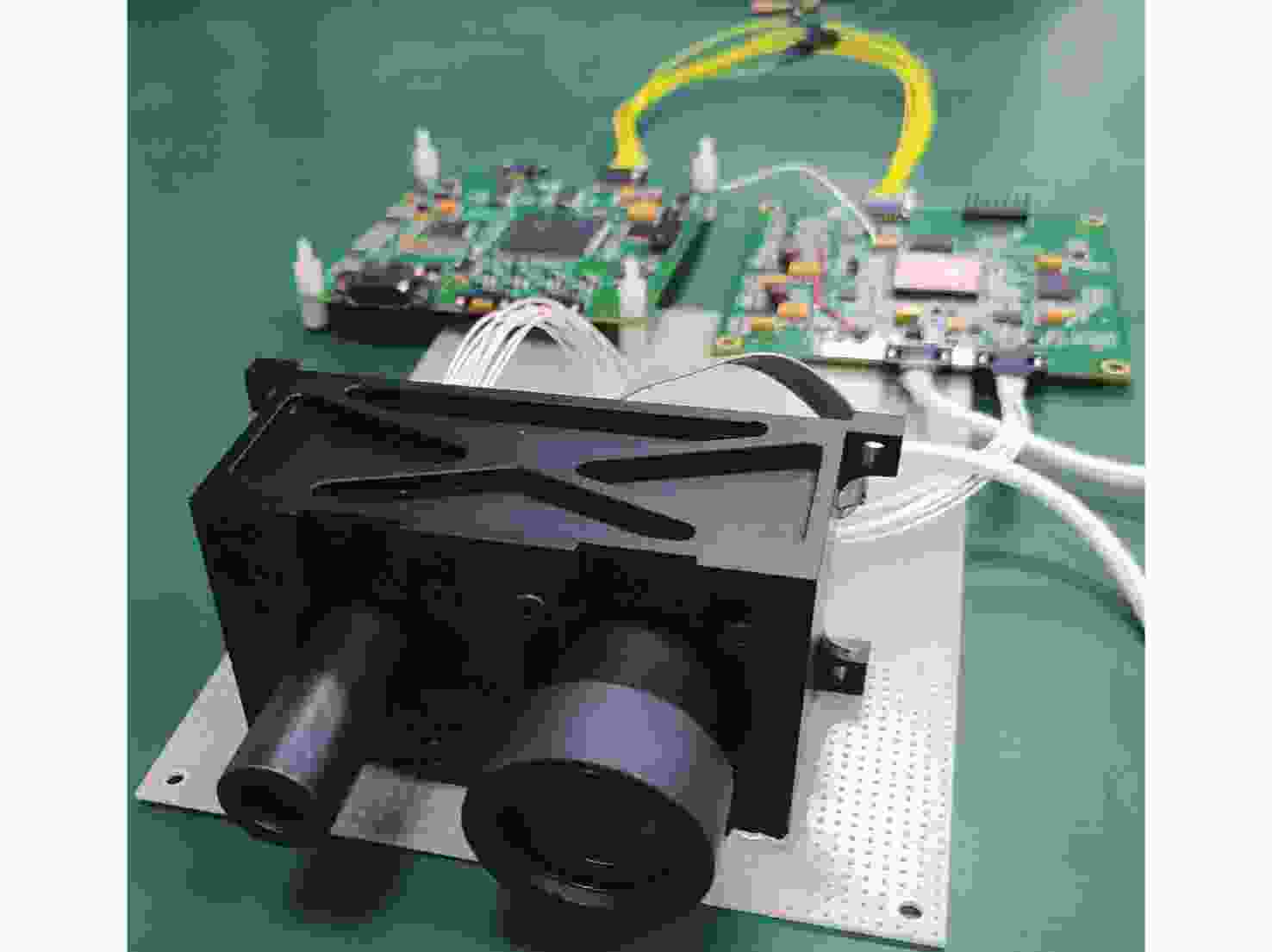

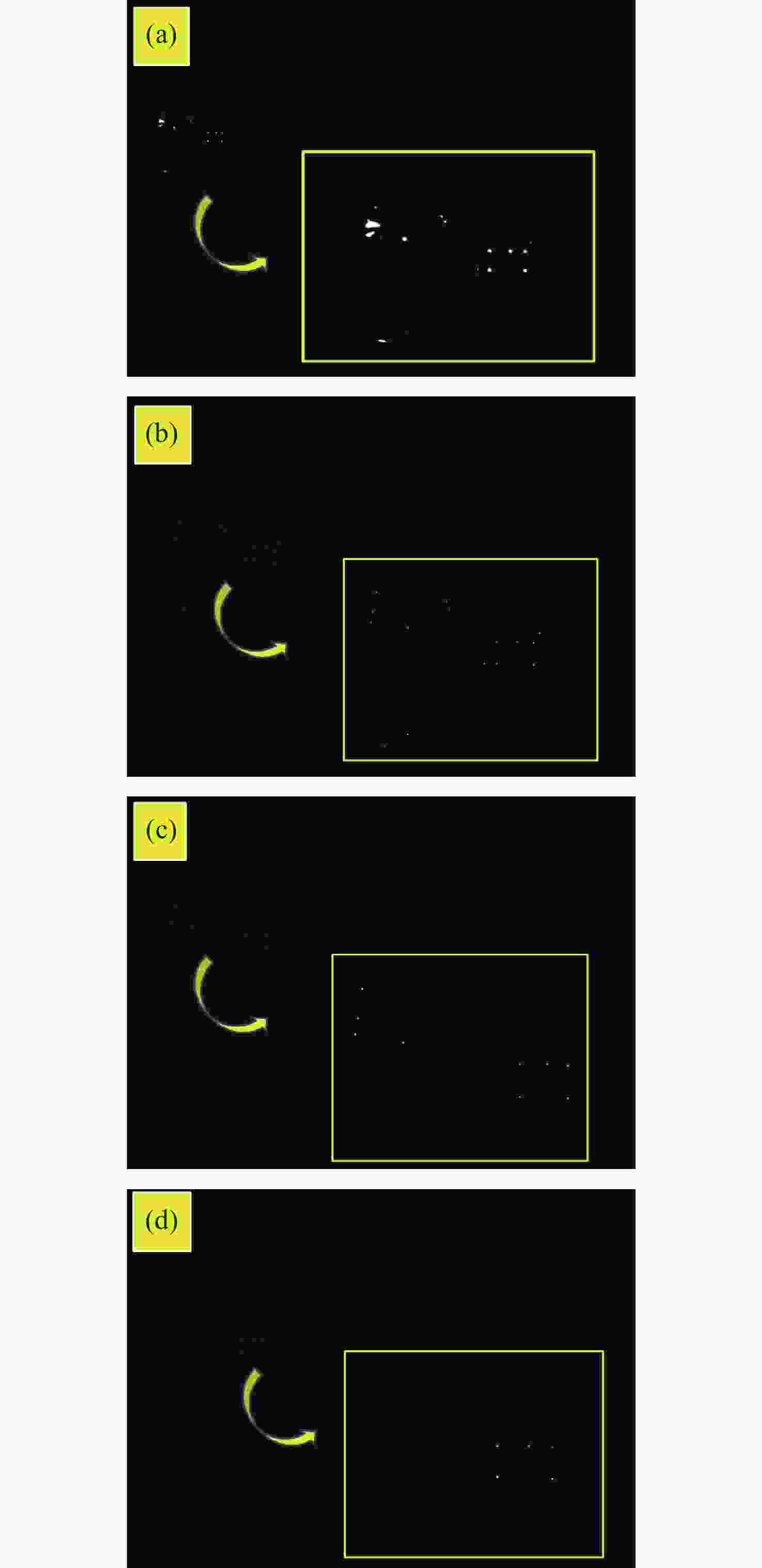

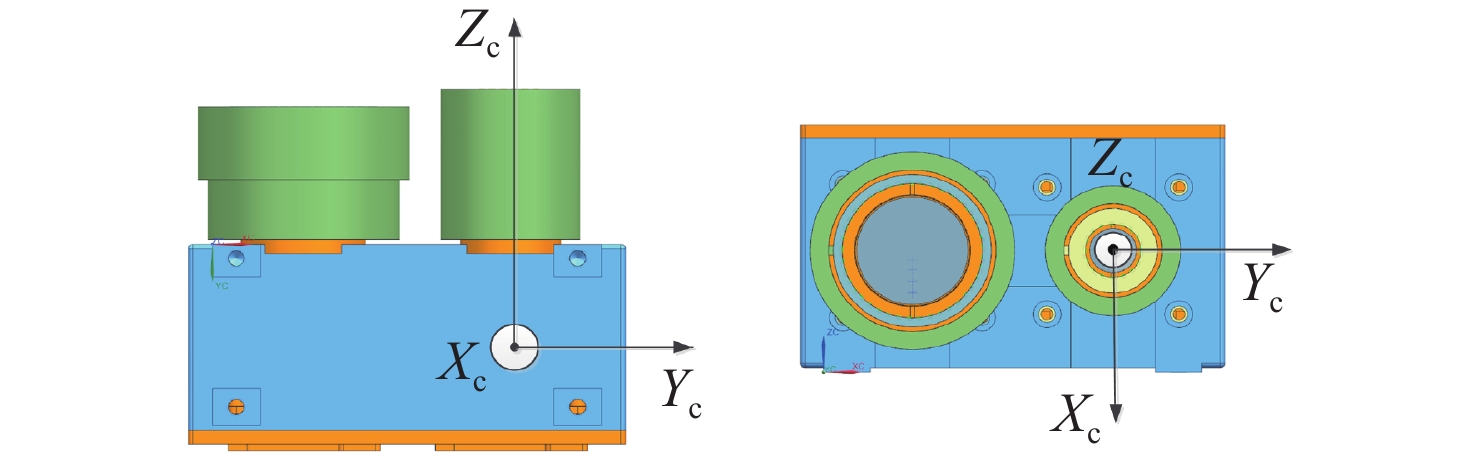

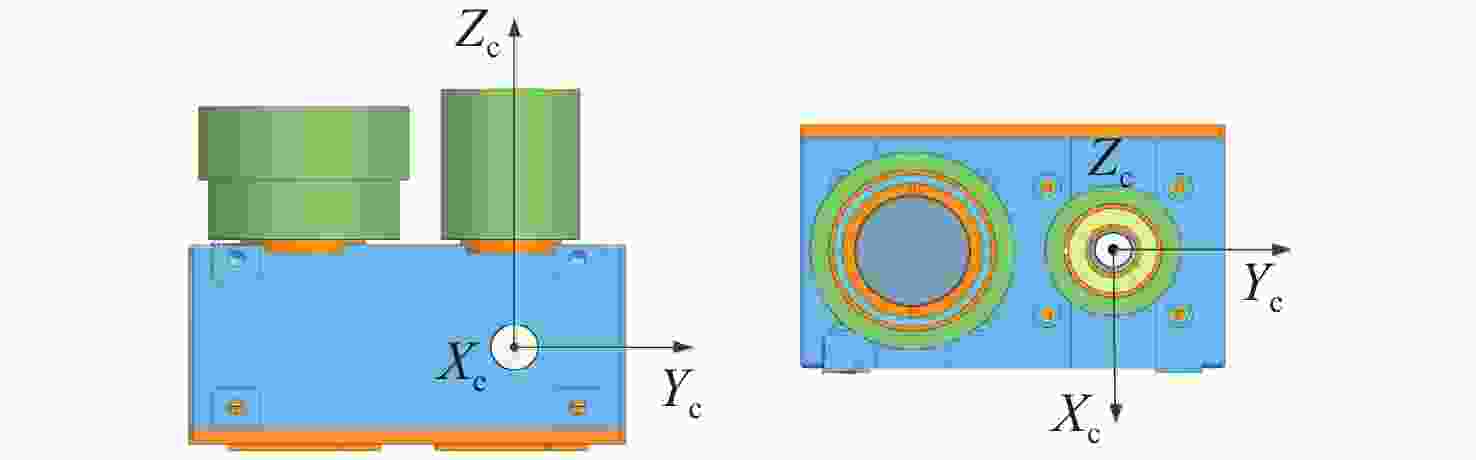

为提高测量系统的稳定性及精度,实现航天器超近距离高精度对接,本文提出了一种基于单相机及合作靶标的相对位姿测量系统,用于双星间相对位置及姿态的高精度测量。通过设计追踪星视觉相机及目标星LED合作靶标,在双星距离为50米到0.4米的范围内,实现了高精度的相对位姿测量。首先,通过设计的远近场LED靶标,实现了相机与靶标间的协同工作,保证在50米到0.4米的距离均能清晰成像;其次,根据设计的靶标特性,提出了多尺度质心提取算法,利用斜率一致性约束与间距比筛选,在复杂光照下稳定获取特征目标;最后,结合靶标几何约束的初值估计,实现了目标星相对于追踪星的位姿解算,为进一步提高测量精度,引入非线性优化方法对位姿结果进行迭代优化,有效降低了测量误差。试验结果表明,系统测量精度由远及近逐渐提高,在距离为0.4米时,位置测量精度优于1毫米,姿态测量精度优于0.2度,满足超近距离对接任务需求。本方案为空间在轨目标相对位姿测量提供了高精度、高稳定性的技术支撑,具有重要的工程应用价值。

Abstract:To enhance measurement stability and accuracy for ultra-close-range target docking, this study proposes a monocular camera-based relative position and attitude measurement system with cooperative targets, enabling high-precision position and attitude determination between CubeSats. Through co-designed vision cameras on the chaser satellite and LED targets on the target satellite, precise relative pose measurement is achieved within 0.4−50 meters. First, the collaborative camera-target operation across far/near fields ensures clear imaging throughout the range from 50 meters to 0.4 meters. Second, a multi-scale centroid extraction algorithm incorporating slope consistency constraints and spacing ratio screening reliably acquires features under complex lighting conditions. Finally, combined with the initial pose estimation of the target satellite relative to the chaser, nonlinear optimization iteratively refines pose results to minimize errors. Experimental results demonstrate progressive accuracy improvement with proximity. At 0.4 m distance, the position measurement accuracy is better than 1 millimeter, and the attitude measurement accuracy is better than 0.2 degrees, satisfying ultra-close docking requirements. This solution provides high-precision, high-stability technical support for on-orbit space target relative navigation with important engineering application value.

-

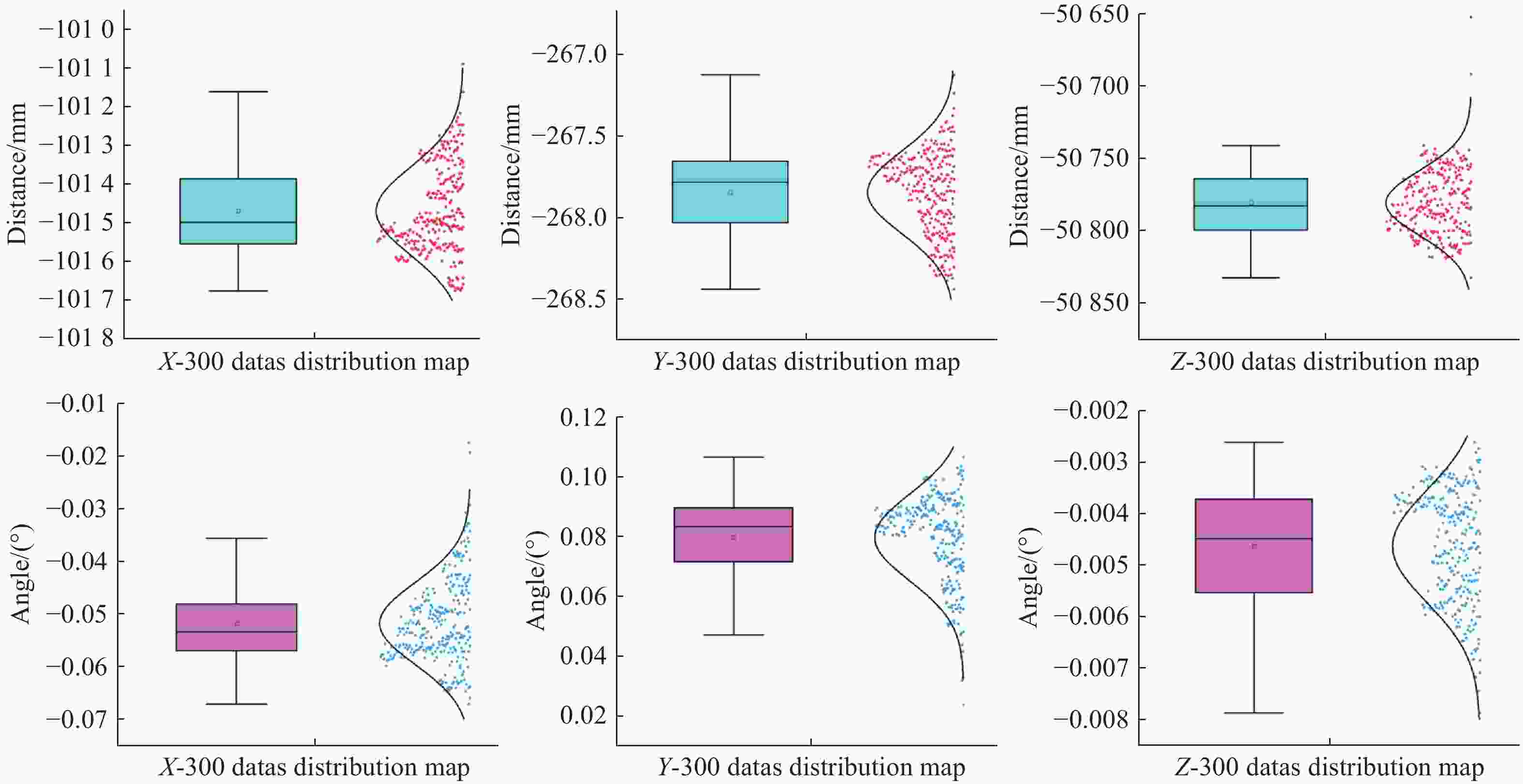

表 1 50 m相对位置与姿态测量结果

Table 1. Relative position and attitude measurement results at 50 m range

Parameters Std Mean-value Max-v Min-v T-x(mm) 1.2015 − 1013.5044 − 1010.9075 − 1016.7402 T-y(mm) 3.9683 − 276.5156 − 267.5542 − 285.0395 T-z(mm) 52.8736 − 50780.0293 − 50652.7305 − 50832.2031 $\varphi $ (°) 0.081 − 0.05188 − 0.0176 − 0.0672 $\theta $ (°) 0.0154 0.07840 0.1064 0.0238 $\psi $(°) 0.0010 − 0.0046 − 0.0027 − 0.0079 表 2 40 m相对位置与姿态测量结果

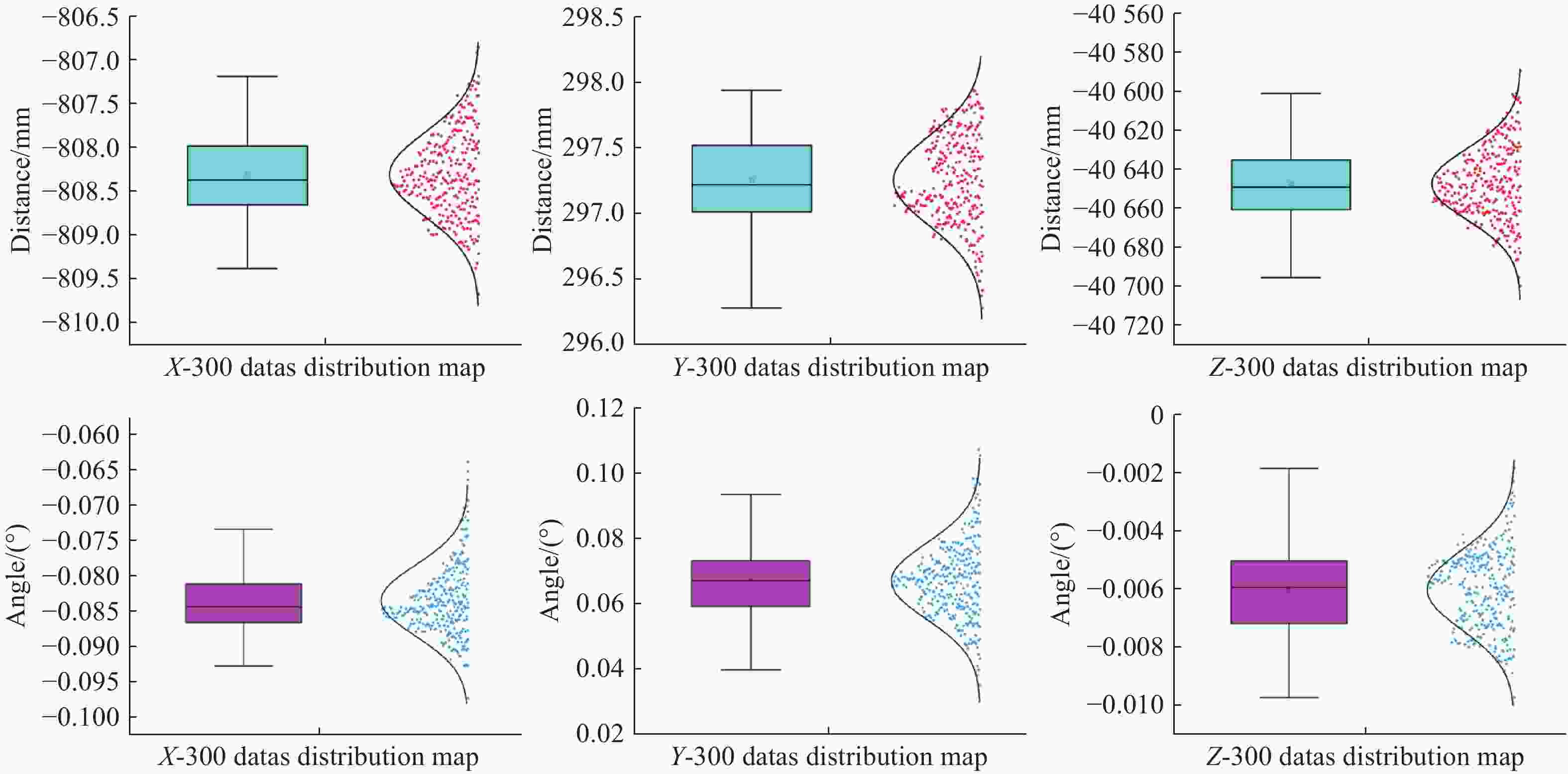

Table 2. Relative position and attitude measurement results at 40 m range

Parameters Std Mean-value Max-v Min-v T-x(mm) 0.4432 − 808.2581 − 806.8610 − 809.6720 T-y(mm) 0.2770 297.0678 297.9350 296.2810 T-z(mm) 19.9998 − 40649.4954 − 40590.0000 − 40699.5000 $\varphi $ (°) 0.0050 − 0.0845 − 0.0651 − 0.0972 $\theta $ (°) 0.0100 0.0680 0.1074 0.0351 $\psi $(°) 0.0015 − 0.0055 − 0.0019 − 0.0097 表 3 20 m相对位置与姿态测量结果

Table 3. Relative position and attitude measurement results at 20 m range

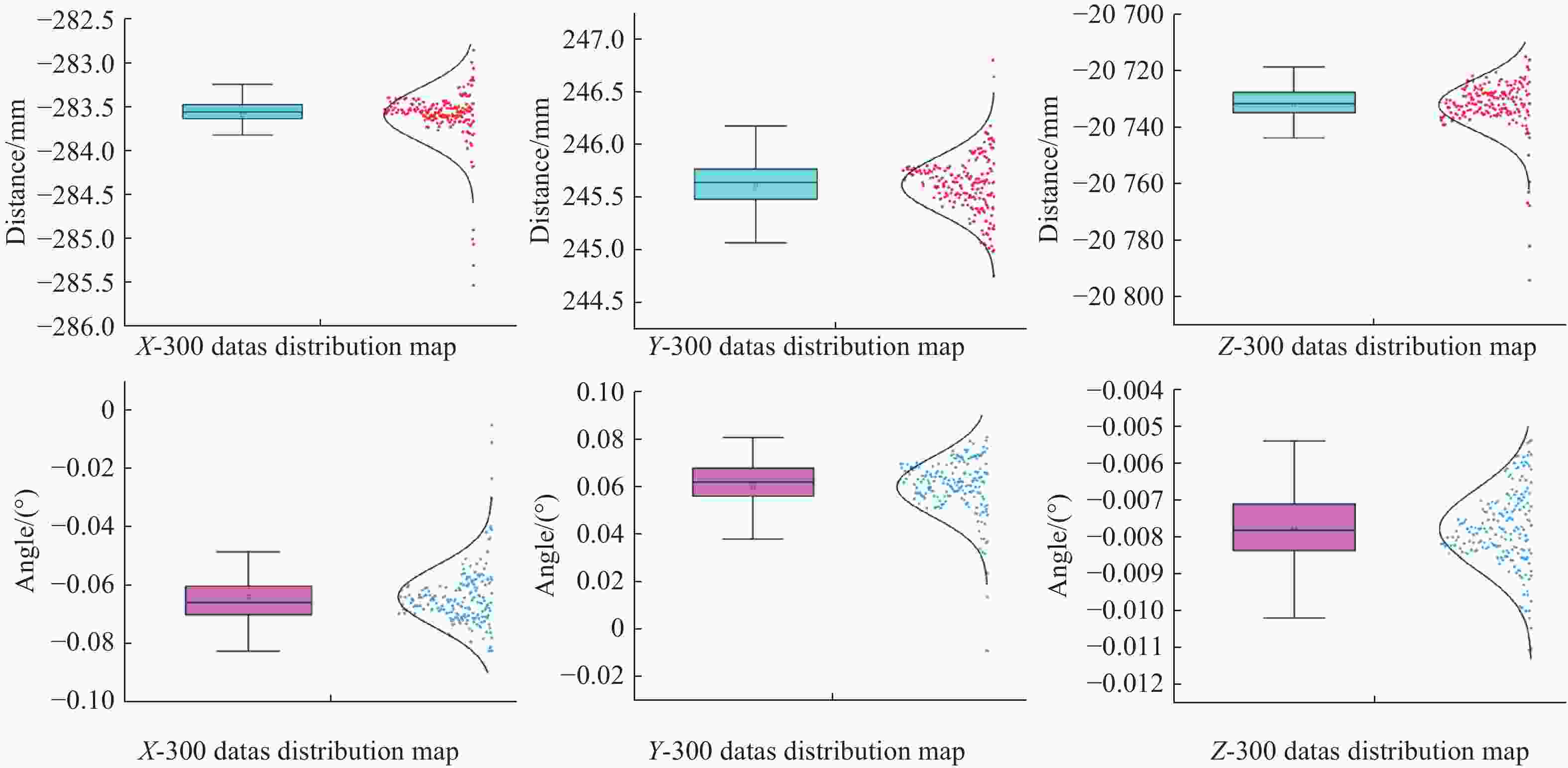

Parameters Std Mean-value Max-v Min-v T-x(mm) 0.3180 − 283.5990 − 283.2110 − 285.5420 T-y(mm) 0.2050 245.5650 246.7930 244.7530 T-z(mm) 4.3640 − 20730.6000 − 20715.2000 − 20742.5000 $\varphi $ (°) 0.0107 − 0.0645 − 0.0052 − 0.0827 $\theta $ (°) 0.0108 0.0595 0.0807 − 0.0091 $\psi $(°) 0.0011 − 0.0079 − 0.0054 − 0.0111 表 4 10 m相对位置与姿态测量结果

Table 4. Relative position and attitude measurement results at 10 m range

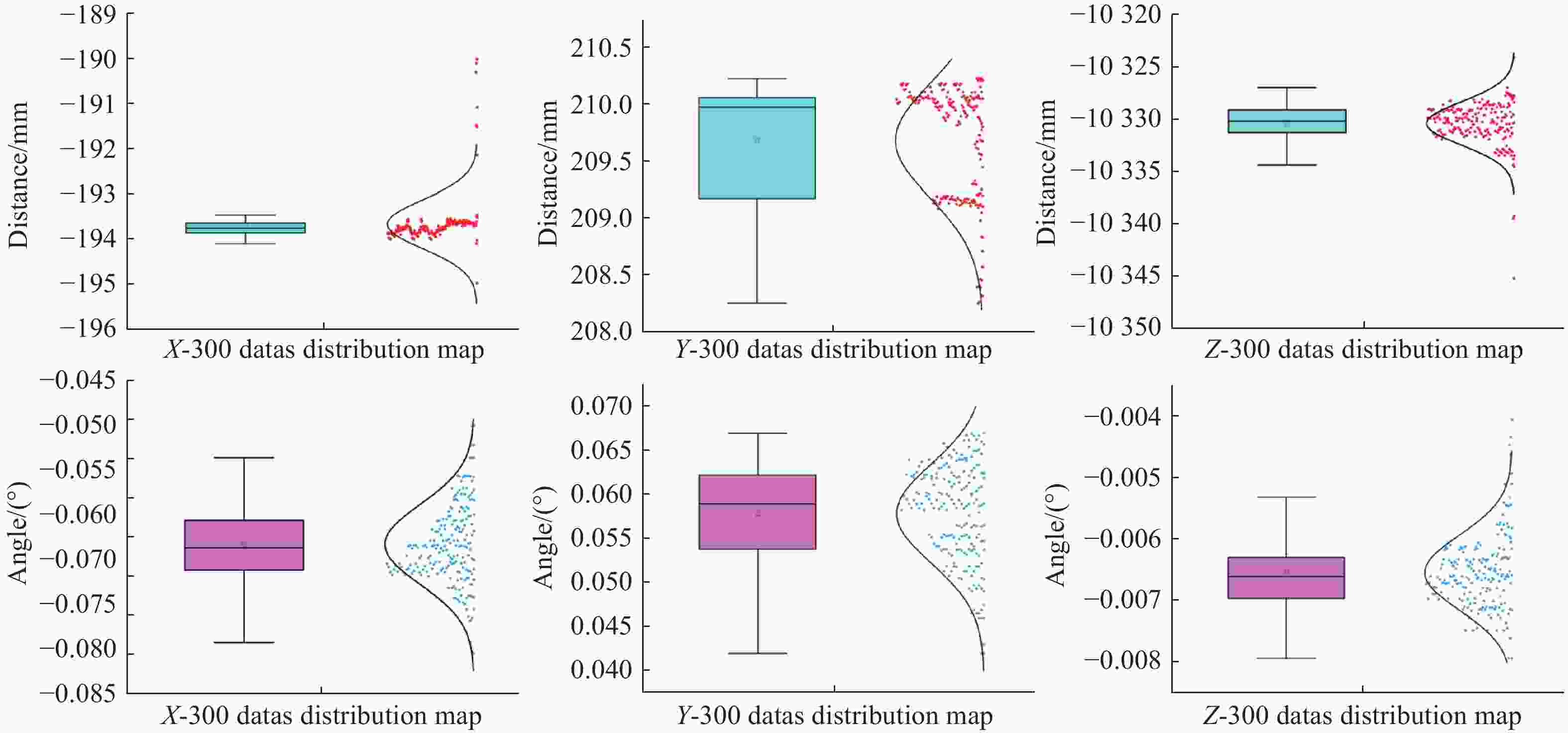

Parameters Std Mean-value Max-v Min-v T-x(mm) 0.7960 − 193.6940 − 190.0220 − 194.9690 T-y(mm) 0.6060 209.2280 210.2260 208.2550 T-z(mm) 4.9600 − 10329.8520 − 10324.0660 − 10345.1570 $\varphi $ (°) 0.0051 − 0.0665 − 0.0508 − 0.0798 $\theta $ (°) 0.0055 0.0584 0.0670 − 0.0420 $\psi $(°) 0.0006 − 0.0065 − 0.0041 − 0.0079 表 5 5.6 m相对位置与姿态测量结果

Table 5. Relative position and attitude measurement results at 5.6 m range

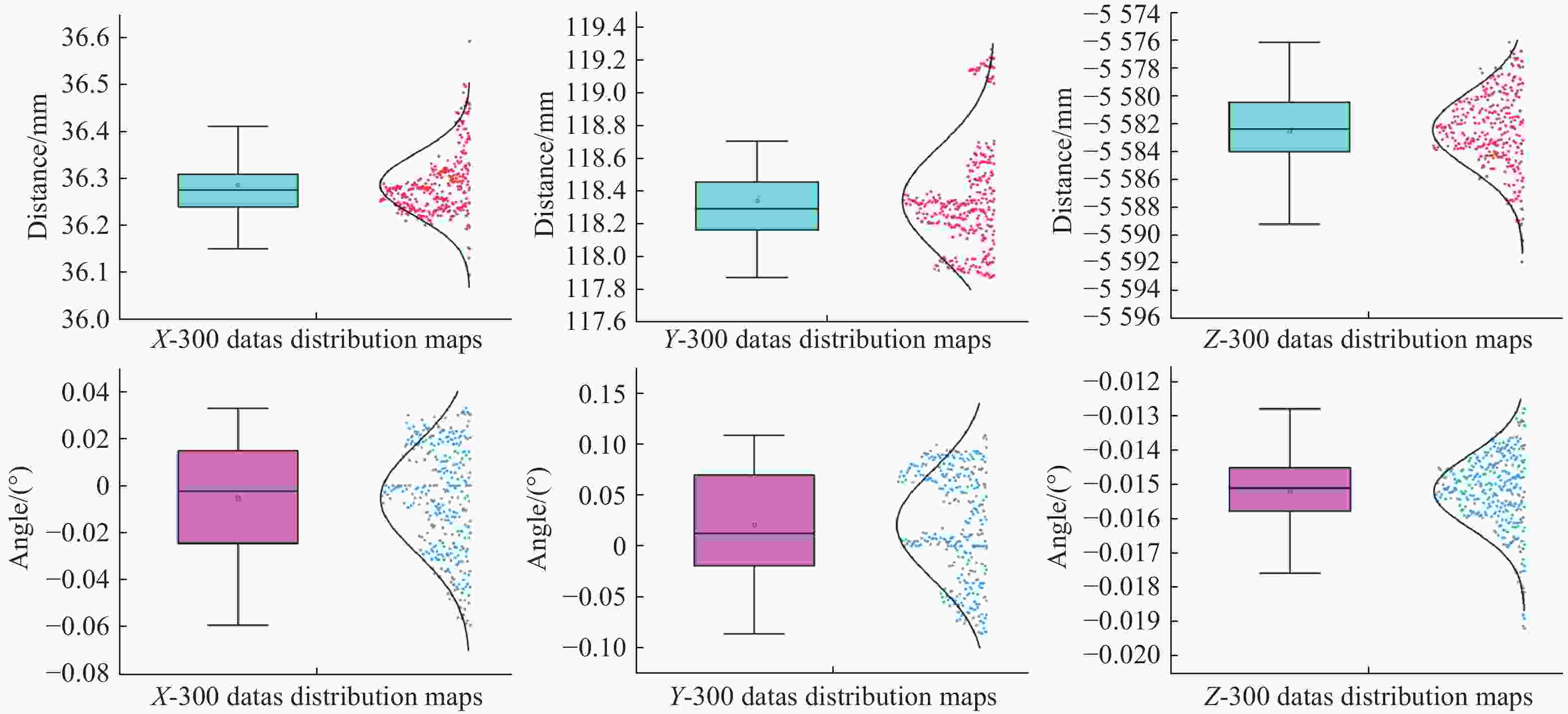

Parameters Std Mean-value Max-v Min-v T-x(mm) 0.1450 36.2800 36.5910 36.0940 T-y(mm) 0.9140 118.6200 119.2630 117.8860 T-z(mm) 1.6290 − 5583.1500 − 5576.1830 − 5591.8940 $\varphi $ (°) 0.0230 − 0.0080 0.0716 − 0.0676 $\theta $ (°) 0.0250 − 0.0020 0.1047 − 0.0843 $\psi $(°) 0.0011 − 0.0149 − 0.0128 − 0.0192 表 6 1.7 m相对位置与姿态测量结果

Table 6. Relative position and attitude measurement results at 1.7 m range

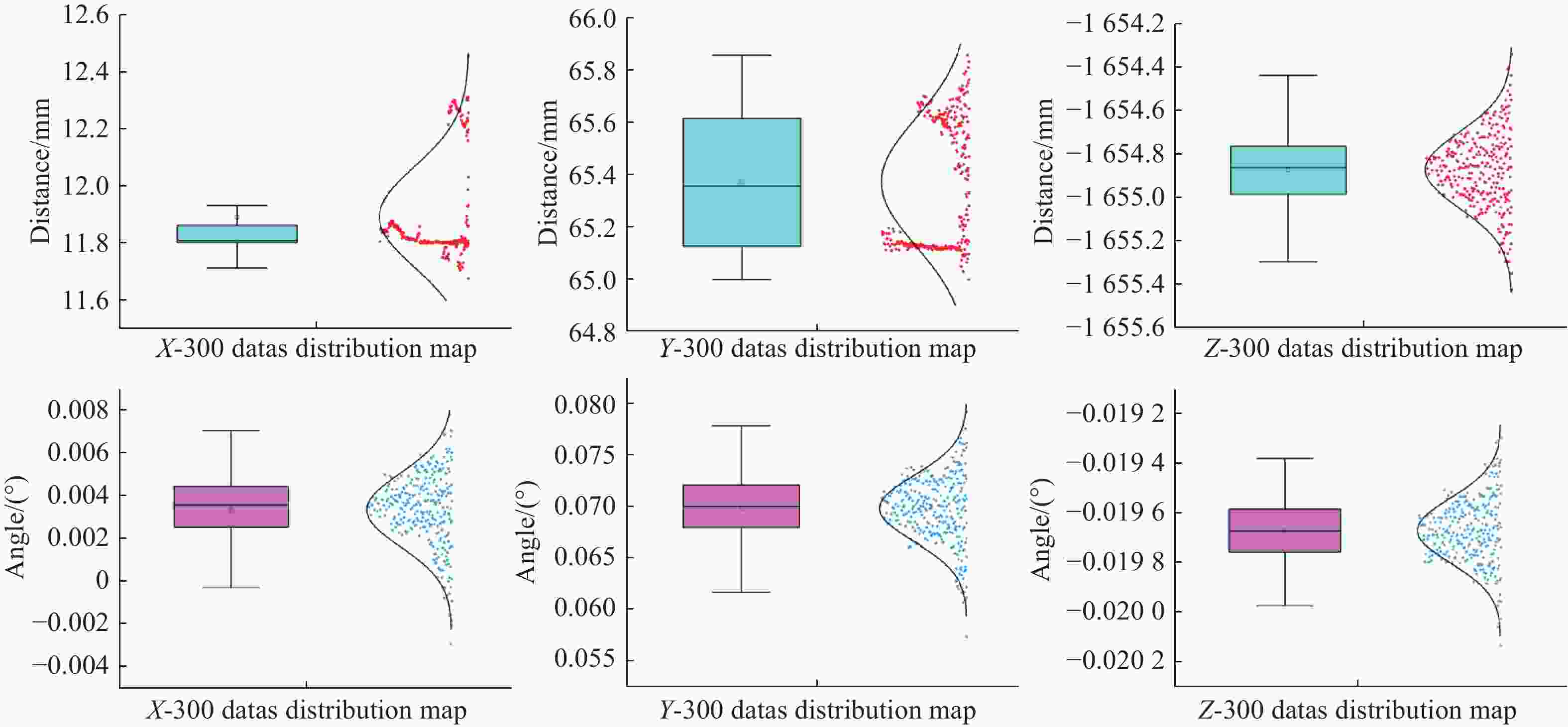

Parameters Std Mean-value Max-v Min-v T-x(mm) 0.2270 11.8730 12.4530 11.6760 T-y(mm) 0.2810 65.3370 65.7950 64.9990 T-z(mm) 0.1870 − 1654.8800 − 1654.3430 − 1655.4240 $\varphi $ (°) 0.0020 − 0.0032 0.0078 − 0.0029 $\theta $ (°) 0.0036 − 0.0691 − 0.0792 − 0.0574 $\psi $(°) 0.0002 − 0.0197 − 0.0192 − 0.0201 表 7 0.4 m相对位置与姿态测量结果

Table 7. Relative position and attitude measurement results at 0.4 m range

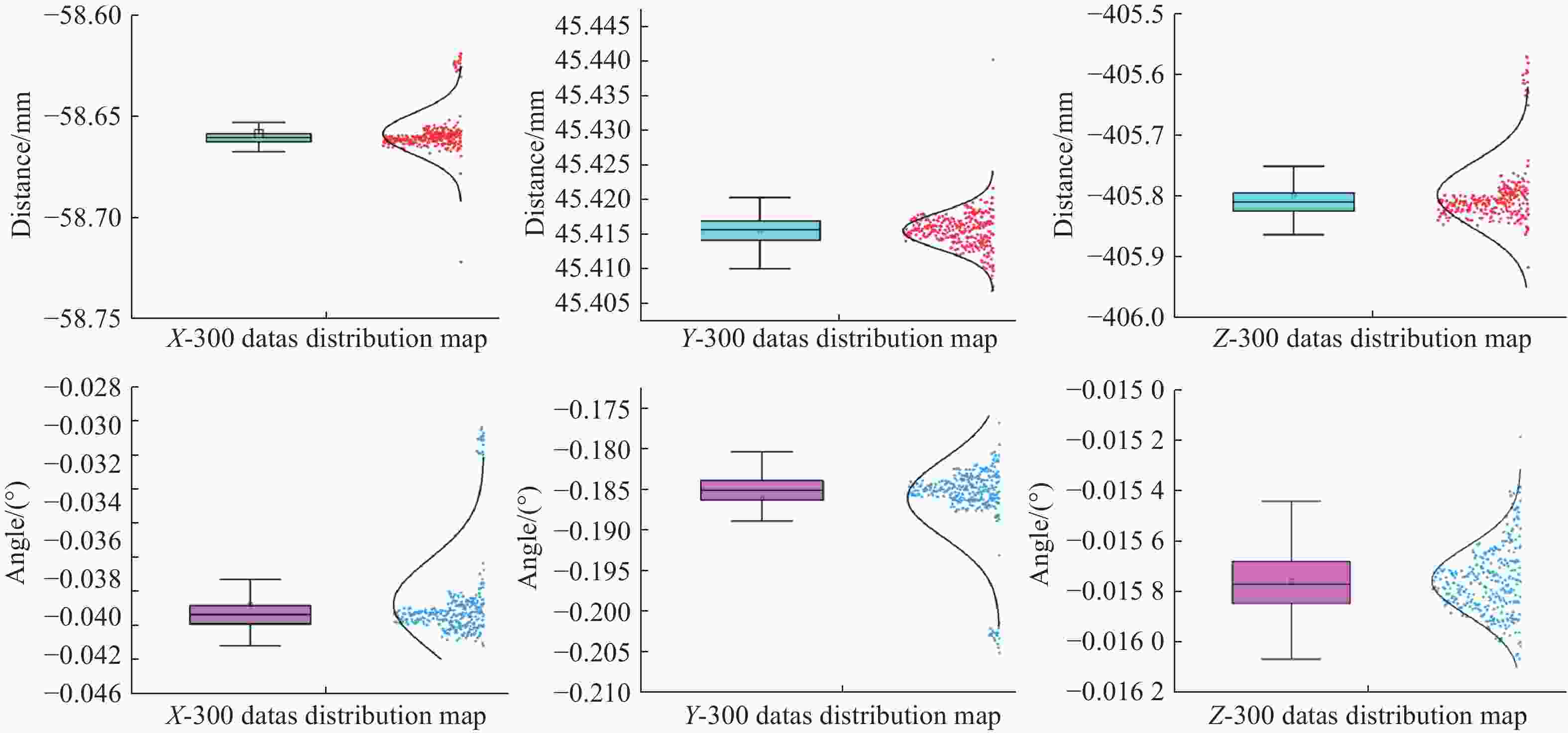

Parameters Std Mean-value Max-v Min-v T-x(mm) 0.08 −58.63 − 58.3670 −58.72 T-y(mm) 0.003 45.41 45.478 45.40 T-z(mm) 0.10 −405.79 −402.922 −405.89 $\varphi $ (°) 0.003 −0.041 0.0009 −0.043 $\theta $ (°) 0.006 −0.184 −0.046 −0.07 $\psi $(°) 0.0003 − 0.0157 −0.007 − 0.0161 -

[1] ZHANG H F, WU J X, LIU D L, et al. Research on rocket engine pose measurement technology based on monocular vision[J]. Proceedings of SPIE, 2023, 12934: 129340I. [2] SEO C T, KANG S W, CHO M. Three-dimensional free view reconstruction in axially distributed image sensing[J]. Chinese Optics Letters, 2017, 15(8): 081102. doi: 10.3788/COL201715.081102 [3] HEATON A F, HOWARD R T, PINSON R M. Orbital express AVGS validation and calibration for automated rendezvous[C]. AIAA/AAS Astrodynamics Specialist Conference and Exhibit, AIAA, 2008. (查阅网上资料, 未找到本条文献页码, 请确认). [4] KAWANO I, MOKUNO M, KASAI T, et al. Result of autonomous rendezvous docking experiment of engineering test Satellite-VII[J]. Journal of Spacecraft and Rockets, 2001, 38(1): 105-111. doi: 10.2514/2.3661 [5] YAN K, XIONG ZH, LAO D B, et al. Attitude measurement method based on 2DPSD and monocular vision[J]. Proceedings of SPIE, 2019, 11338: 113382L. [6] MAO J F, HUANG W, SHENG W G. Target distance measurement method using monocular vision[J]. IET Image Processing, 2020, 14(13): 3181-3187. doi: 10.1049/iet-ipr.2019.1293 [7] 屈也频, 刘坚强, 侯旺. 单目视觉高精度测量中的合作目标图形设计[J]. 光学学报,2020,40(13):1315001. doi: 10.3788/AOS202040.1315001QU Y P, LIU J Q, HOU W. Graphics design of cooperative targets on monocular vision high precision measurement[J]. Acta Optica Sinica, 2020, 40(13): 1315001. (in Chinese). doi: 10.3788/AOS202040.1315001 [8] 董永英, 张高鹏, 常三三, 等. 一种基于单目视觉的空间目标位姿测量算法及其精度定量分析[J]. 光子学报,2021,50(11):1112003. doi: 10.3788/gzxb20215011.1112003DONG Y Y, ZHANG G P, CHANG S S, et al. A pose measurement algorithm of space target based on monocular vision and accuracy analysis[J]. Acta Photonica Sinica, 2021, 50(11): 1112003. (in Chinese). doi: 10.3788/gzxb20215011.1112003 [9] RONDAO D, HE L, AOUF N. AI-based monocular pose estimation for autonomous space refuelling[J]. Acta Astronautica, 2024, 220: 126-140. doi: 10.1016/j.actaastro.2024.04.003 [10] SANSONE F, FRANCESCONI A, OLIVIERI L, et al. Low-cost relative navigation sensors for miniature spacecraft and drones[C]. 2015 IEEE Metrology for Aerospace (MetroAeroSpace), IEEE, 2015: 389-394. [11] PIRAT C, ANKERSEN F, WALKER R, et al. Vision based navigation for autonomous cooperative docking of CubeSats[J]. Acta Astronautica, 2018, 146: 418-434. doi: 10.1016/j.actaastro.2018.01.059 [12] BUI M T, DOSKOCIL R, KRIVANEK V. Distance and angle measurement using monocular vision[C]. 2018 18th International Conference on Mechatronics, IEEE, 2018: 1-6. [13] 陈天择, 葛宝臻, 罗其俊. 重投影优化的自由双目相机位姿估计方法[J]. 中国光学,2021,14(6):1400-1409. doi: 10.37188/CO.2021-0105CHEN T Z, GE B ZH, LUO Q J. Pose estimation for free binocular cameras based on reprojection error optimization[J]. Chinese Optics, 2021, 14(6): 1400-1409. (in Chinese). doi: 10.37188/CO.2021-0105 [14] CAPUANO V, KIM K, HARVARD A, et al. Monocular-based pose determination of uncooperative space objects[J]. Acta Astronautica, 2020, 166: 493-506. doi: 10.1016/j.actaastro.2019.09.027 [15] ZHANG ZH, BIN W, KANG J H, et al. Dynamic pose estimation of uncooperative space targets based on monocular vision[J]. Applied Optics, 2020, 59(26): 7876-7882. doi: 10.1364/AO.395081 [16] PIAZZA M, MAESTRINI M, DI LIZIA P. Monocular relative pose estimation pipeline for uncooperative resident space objects[J]. Journal of Aerospace Information Systems, 2022, 19(9): 613-632. doi: 10.2514/1.I011064 -

下载:

下载: